Causal Inference:

The Mixtape.

Buy the print version today:

Buy the print version today:

\[ % Define terms \newcommand{\Card}{\text{Card }} \DeclareMathOperator*{\cov}{cov} \DeclareMathOperator*{\var}{var} \DeclareMathOperator{\Var}{Var\,} \DeclareMathOperator{\Cov}{Cov\,} \DeclareMathOperator{\Prob}{Prob} \newcommand{\independent}{\perp \!\!\! \perp} \DeclareMathOperator{\Post}{Post} \DeclareMathOperator{\Pre}{Pre} \DeclareMathOperator{\Mid}{\,\vert\,} \DeclareMathOperator{\post}{post} \DeclareMathOperator{\pre}{pre} \]

The difference-in-differences design is an early quasi-experimental identification strategy for estimating causal effects that predates the randomized experiment by roughly eighty-five years. It has become the single most popular research design in the quantitative social sciences, and as such, it merits careful study by researchers everywhere.1 In this chapter, I will explain this popular and important research design both in its simplest form, where a group of units is treated at the same time, and the more common form, where groups of units are treated at different points in time. My focus will be on the identifying assumptions needed for estimating treatment effects, including several practical tests and robustness exercises commonly performed, and I will point you to some of the work on difference-in-differences design (DD) being done at the frontier of research. I have included several replication exercises as well.

When thinking about situations in which a difference-in-differences design can be used, one usually tries to find an instance where a consequential treatment was given to some people or units but denied to others “haphazardly.” This is sometimes called a “natural experiment” because it is based on naturally occurring variation in some treatment variable that affects only some units over time. All good difference-in-differences designs are based on some kind of natural experiment. And one of the most interesting natural experiments was also one of the first difference-in-differences designs. This is the story of how John Snow convinced the world that cholera was transmitted by water, not air, using an ingenious natural experiment (Snow 1855).

Cholera is a vicious disease that attacks victims suddenly, with acute symptoms such as vomiting and diarrhea. In the nineteenth century, it was usually fatal. There were three main epidemics that hit London, and like a tornado, they cut a path of devastation through the city. Snow, a physician, watched as tens of thousands suffered and died from a mysterious plague. Doctors could not help the victims because they were mistaken about the mechanism that caused cholera to spread between people.

The majority medical opinion about cholera transmission at that time was miasma, which said diseases were spread by microscopic poisonous particles that infected people by floating through the air. These particles were thought to be inanimate, and because microscopes at that time had incredibly poor resolution, it would be years before microorganisms would be seen. Treatments, therefore, tended to be designed to stop poisonous dirt from spreading through the air. But tried and true methods like quarantining the sick were strangely ineffective at slowing down this plague.

John Snow worked in London during these epidemics. Originally, Snow—like everyone—accepted the miasma theory and tried many ingenious approaches based on the theory to block these airborne poisons from reaching other people. He went so far as to cover the sick with burlap bags, for instance, but the disease still spread. People kept getting sick and dying. Faced with the theory’s failure to explain cholera, he did what good scientists do—he changed his mind and began look for a new explanation.

Snow developed a novel theory about cholera in which the active agent was not an inanimate particle but was rather a living organism. This microorganism entered the body through food and drink, flowed through the alimentary canal where it multiplied and generated a poison that caused the body to expel water. With each evacuation, the organism passed out of the body and, importantly, flowed into England’s water supply. People unknowingly drank contaminated water from the Thames River, which caused them to contract cholera. As they did, they would evacuate with vomit and diarrhea, which would flow into the water supply again and again, leading to new infections across the city. This process repeated through a multiplier effect which was why cholera would hit the city in epidemic waves.

Snow’s years of observing the clinical course of the disease led him to question the usefulness of miasma to explain cholera. While these were what we would call “anecdote,” the numerous observations and imperfect studies nonetheless shaped his thinking. Here’s just a few of the observations which puzzled him. He noticed that cholera transmission tended to follow human commerce. A sailor on a ship from a cholera-free country who arrived at a cholera-stricken port would only get sick after landing or taking on supplies; he would not get sick if he remained docked. Cholera hit the poorest communities worst, and those people were the very same people who lived in the most crowded housing with the worst hygiene. He might find two apartment buildings next to one another, one would be heavily hit with cholera, but strangely the other one wouldn’t. He then noticed that the first building would be contaminated by runoff from privies but the water supply in the second building was cleaner. While these observations weren’t impossible to reconcile with miasma, they were definitely unusual and didn’t seem obviously consistent with miasmis.

Snow tucked away more and more anecdotal evidence like these. But, while this evidence raised some doubts in his mind, he was not convinced. He needed a smoking gun if he were to eliminate all doubt that cholera was spread by water, not air. But where would he find that evidence? More importantly, what would evidence like that evenlook like?

Let’s imagine the following thought experiment. If Snow was a dictator with unlimited wealth and power, how could he test his theory that cholera is waterborne? One thing he could do is flip a coin over each household member—heads you drink from the contaminated Thames, tails you drink from some uncontaminated source. Once the assignments had been made, Snow could simply compare cholera mortality between the two groups. If those who drank the clean water were less likely to contract cholera, then this would suggest that cholera was waterborne.

Knowledge that physical randomization could be used to identify causal effects was still eighty-five years away. But there were other issues besides ignorance that kept Snow from physical randomization. Experiments like the one I just described are also impractical, infeasible, and maybe even unethical—which is why social scientists so often rely on natural experiments that mimic important elements of randomized experiments. But what natural experiment was there? Snow needed to find a situation where uncontaminated water had been distributed to a large number of people as if by random chance, and then calculate the difference between those those who did and did not drink contaminated water. Furthermore, the contaminated water would need to be allocated to people in ways that were unrelated to the ordinary determinants of cholera mortality, such as hygiene and poverty, implying a degree of balance on covariates between the groups. And then he remembered—a potential natural experiment in London a year earlier had reallocated clean water to citizens of London. Could this work?

In the 1800s, several water companies served different areas of the city. Some neighborhoods were even served by more than one company. They took their water from the Thames, which had been polluted by victims’ evacuations via runoff. But in 1849, the Lambeth water company had moved its intake pipes upstream higher up the Thames, above the main sewage discharge point, thus giving its customers uncontaminated water. They did this to obtain cleaner water, but it had the added benefit of being too high up the Thames to be infected with cholera from the runoff. Snow seized on this opportunity. He realized that it had given him a natural experiment that would allow him to test his hypothesis that cholera was waterborne by comparing the households. If his theory was right, then the Lambeth houses should have lower cholera death rates than some other set of households whose water was infected with runoff—what we might call today the explicit counterfactual. He found his explicit counterfactual in the Southwark and Vauxhall Waterworks Company.

Unlike Lambeth, the Southwark and Vauxhall Waterworks Company had not moved their intake point upstream, and Snow spent an entire book documenting similarities between the two companies’ households. For instance, sometimes their service cut an irregular path through neighborhoods and houses such that the households on either side were very similar; the only difference being they drank different water with different levels of contamination from runoff. Insofar as the kinds of people that each company serviced were observationally equivalent, then perhaps they were similar on the relevant unobservables as well.

Snow meticulously collected data on household enrollment in water supply companies, going door to door asking household heads the name of their utility company. Sometimes these individuals didn’t know, though, so he used a saline test to determine the source himself (Coleman 2019). He matched those data with the city’s data on the cholera death rates at the household level. It was in many ways as advanced as any study we might see today for how he carefully collected, prepared, and linked a variety of data sources to show the relationship between water purity and mortality. But he also displayed scientific ingenuity for how he carefully framed the research question and how long he remained skeptical until the research design’s results convinced him otherwise. After combining everthing, he was able to generate extremely persuasive evidence that influenced policymakers in the city.2

Snow wrote up all of his analysis in a manuscript entitled On the Mode of Communication of Cholera (Snow 1855). Snow’s main evidence was striking, and I will discuss results based on Table XII and Table IX (not shown) in Table 9.1. The main difference between my version and his version of Table XII is that I will use his data to estimate a treatment effect using difference-in-differences.

| Company name | 1849 | 1854 |

|---|---|---|

| Southwark and Vauxhall | 135 | 147 |

| Lambeth | 85 | 19 |

In 1849, there were 135 cases of cholera per 10,000 households at Southwark and Vauxhall and 85 for Lambeth. But in 1854, there were 147 per 100,000 in Southwark and Vauxhall, whereas Lambeth’s cholera cases per 10,000 households fell to 19.

While Snow did not explicitly calculate the difference-in-differences, the ability to do so was there (Coleman 2019). If we difference Lambeth’s 1854 value from its 1849 value, followed by the same after and before differencing for Southwark and Vauxhall, we can calculate an estimate of the ATT equaling 78 fewer deaths per 10,000. While Snow would go on to produce evidence showing cholera deaths were concentrated around a pump on Broad Street contaminated with cholera, he allegedly considered the simple difference-in-differences the more convincing test of his hypothesis.

The importance of the work Snow undertook to understand the causes of cholera in London cannot be overstated. It not only lifted our ability to estimate causal effects with observational data, it advanced science and ultimately saved lives. Of Snow’s work on the cause of cholera transmission, Freedman (1991) states:

The force of Snow’s argument results from the clarity of the prior reasoning, the bringing together of many different lines of evidence, and the amount of shoe leather Snow was willing to use to get the data. Snow did some brilliant detective work on nonexperimental data. What is impressive is not the statistical technique but the handling of the scientific issues. He made steady progress from shrewd observation through case studies to analyze ecological data. In the end, he found and analyzed a natural experiment. (p.298)

Let’s look at this example using some tables, which hopefully will help give you an idea of the intuition behind DD, as well as some of its identifying assumptions.3 Assume that the intervention is clean water, which I’ll write as \(D\), and our objective is to estimate \(D\)’s causal effect on cholera deaths. Let cholera deaths be represented by the variable \(Y\). Can we identify the causal effect of D if we just compare the post-treatment 1854 Lambeth cholera death values to that of the 1854 Southwark and Vauxhall values? This is in many ways an obvious choice, and in fact, it is one of the more common naive approaches to causal inference. After all, we have a control group, don’t we? Why can’t we just compare a treatment group to a control group? Let’s look and see.

One of the things we immediately must remember is that the simple difference in outcomes, which is all we are doing here, only collapsed to the ATE if the treatment had been randomized. But it is never randomized in the real world where most choices if not all choices made by real people is endogenous to potential outcomes. Let’s represent now the differences between Lambeth and Southwark and Vauxhall with fixed level differences, or fixed effects, represented by \(L\) and \(SV\). Both are unobserved, unique to each company, and fixed over time. What these fixed effects mean is that even if Lambeth hadn’t changed its water source there, would still be something determining cholera deaths, which is just the time-invariant unique differences between the two companies as it relates to cholera deathsin 1854.

| Company | Outcome |

|---|---|

| Lambeth | \(Y=L + D\) |

| Southwark and Vauxhall | \(Y=SV\) |

When we make a simple comparison between Lambeth and Southwark and Vauxhall, we get an estimated causal effect equalling \(D+(L-SV)\). Notice the second term, \(L-SV\). We’ve seen this before. It’s the selection bias we found from the decomposition of the simple difference in outcomes from earlier in the book.

Okay, so say we realize that we cannot simply make cross-sectional comparisons between two units because of selection bias. Surely, though, we can compare a unit to itself? This is sometimes called an interrupted time series. Let’s consider that simple before-and-after difference for Lambeth now.

| Company | Time | Outcome |

|---|---|---|

| Lambeth | Before | \(Y=L\) |

| After | \(Y=L + (T + D)\) |

While this procedure successfully eliminates the Lambeth fixed effect (unlike the cross-sectional difference), it doesn’t give me an unbiased estimate of \(D\) because differences can’t eliminate the natural changes in the cholera deaths over time. Recall, these events were oscillating in waves. I can’t compare Lambeth before and after (\(T+D\)) because of \(T\), which is an omitted variable.

The intuition of the DD strategy is remarkably simple: combine these two simpler approaches so the selection bias and the effect of time are, in turns, eliminated. Let’s look at it in the followingtable.

| Companies | Time | Outcome | \(D_1\) | \(D_2\) |

|---|---|---|---|---|

| Lambeth | Before | \(Y=L\) | ||

| After | \(Y=L + T + D\) | \(T+D\) | ||

| \(D\) | ||||

| Southwark and Vauxhall | Before | \(Y=SV\) | ||

| After | \(Y=SV + T\) | \(T\) |

The first difference, \(D_1\), does the simple before-and-after difference. This ultimately eliminates the unit-specific fixed effects. Then, once those differences are made, we difference the differences (hence the name) to get the unbiased estimate of \(D\).

But there’s a a key assumption with a DD design, and that assumption is discernible even in this table. We are assuming that there is no time-variant company specific unobservables. Nothing unobserved in Lambeth households that is changing between these two periods that also determines cholera deaths. This is equivalent to assuming that \(T\) is the same for all units. And we call this the parallel trends assumption. We will discuss this assumption repeatedly as the chapter proceeds, as it is the most important assumption in the design’s engine. If you can buy off on the parallel trends assumption, then DD will identify the causal effect.

DD is a powerful, yet amazingly simple design. Using repeated observations on a treatment and control unit (usually several units), we can eliminate the unobserved heterogeneity to provide a credible estimate of the average treatment effect on the treated (ATT) by transforming the data in very specific ways. But when and why does this process yield the correct answer? Turns out, there is more to it than meets the eye. And it is imperative on the front end that you understand what’s under the hood so that you can avoid conceptual errors about this design.

The cholera case is a particular kind of DD design that Goodman-Bacon (2019) calls the \(2\times 2\) DD design. The \(2\times 2\) DD design has a treatment group \(k\) and untreated group \(U\). There is a pre-period for the treatment group, \(\pre(k)\); a post-period for the treatment group, \(\post(k)\); a pre-treatment period for the untreated group, \(\pre(U)\); and a post-period for the untreated group, \(\post(U)\) So: \[ \widehat{\delta}^{2\times 2}_{kU} = \bigg ( \overline{y}_k^{\post(k)} - \overline{y}_k^{\pre(k)} \bigg ) - \bigg ( \overline{y}_U^{\post(k)} - \overline{y}_U^{\pre(k)} \bigg ) \] where \(\widehat{\delta}_{kU}\) is the estimated ATT for group \(k\), and \(\overline{y}\) is the sample mean for that particular group in a particular time period. The first paragraph differences the treatment group, \(k\), after minus before, the second paragraph differences the untreated group, \(U\), after minus before. And once those quantities are obtained, we difference the second term from the first.

But this is simply the mechanics of calculations. What exactly is this estimated parameter mapping onto? To understand that, we must convert these sample averages into conditional expectations of potential outcomes. But that is easy to do when working with sample averages, as we will see here. First let’s rewrite this as a conditional expectation. \[ \widehat{\delta}^{2\times 2}_{kU} = \bigg(E\big[Y_k \Mid \Post\big] - E\big[Y_k \Mid\Pre\big]\bigg)- \bigg(E\big[Y_U \Mid \Post\big] - E\big[Y_U \Mid \Pre\big]\bigg) \]

Now let’s use the switching equation, which transforms historical quantities of \(Y\) into potential outcomes. As we’ve done before, we’ll do a little trick where we add zero to the right-hand side so that we can use those terms to help illustrate something important. \[ \begin{align} &\widehat{\delta}^{2\times 2}_{kU} = \bigg ( \underbrace{E\big[Y^1_k \Mid \Post\big] - E\big[Y^0_k \Mid \Pre\big] \bigg ) - \bigg(E\big[Y^0_U \Mid \Post\big] - E\big[ Y^0_U \Mid\Pre\big]}_{\text{Switching equation}} \bigg) \\ &+ \underbrace{E\big[Y_k^0 \Mid\Post\big] - E\big[Y^0_k \Mid \Post\big]}_{\text{Adding zero}} \end{align} \]

Now we simply rearrange these terms to get the decomposition of the \(2\times 2\) DD in terms of conditional expected potential outcomes. \[ \begin{align} &\widehat{\delta}^{2\times 2}_{kU} = \underbrace{E\big[Y^1_k \Mid\Post\big] - E\big[Y^0_k \Mid \Post\big]}_{\text{ATT}} \\ &+\Big[\underbrace{E\big[Y^0_k \Mid \Post\big] - E\big[Y^0_k \Mid\Pre\big] \Big] - \Big[E\big[Y^0_U \Mid\Post\big] - E\big[Y_U^0 \Mid\Pre\big] }_{\text{Non-parallel trends bias in $2\times 2$ case}} \Big] \end{align} \]

Now, let’s study this last term closely. This simple \(2\times 2\) difference-in-differences will isolate the ATT (the first term) if and only if the second term zeroes out. But why would this second term be zero? It would equal zero if the first difference involving the treatment group, \(k\), equaled the second difference involving the untreated group, \(U\).

But notice the term in the second line. Notice anything strange about it? The object of interest is \(Y^0\), which is some outcome in a world without the treatment. But it’s the post period, and in the post period, \(Y=Y^1\) not \(Y^0\) by the switching equation. Thus, the first term is counterfactual. And as we’ve said over and over, counterfactuals are not observable. This bottom line is often called the parallel trends assumption and it is by definition untestable since we cannot observe this counterfactual conditional expectation. We will return to this again, but for now I simply present it for your consideration.

Now I’d like to talk about more explicit economic content, and the minimum wage is as good a topic as any. The modern use of DD was brought into the social sciences through esteemed labor economist Orley Ashenfelter (1978). His study was no doubt influential to his advisee, David Card, arguably the greatest labor economist of his generation. Card would go on to use the method in several pioneering studies, such as Card (1990). But I will focus on one in particular—his now-classic minimum wage study (Card and Krueger 1994).

Card and Krueger (1994) is an infamous study both because of its use of an explicit counterfactual for estimation, and because the study challenges many people’s common beliefs about the negative effects of the minimum wage. It lionized a massive back-and-forth minimum-wage literature that continues to this day.4 So controversial was this study that James Buchanan, the Nobel Prize winner, called those influenced by Card and Krueger (1994) “camp following whores” in a letter to the editor of the Wall Street Journal (Buchanan 1996).5

Suppose you are interested in the effect of minimum wages on employment. Theoretically, you might expect that in competitive labor markets, an increase in the minimum wage would move us up a downward-sloping demand curve, causing employment to fall. But in labor markets characterized by monopsony, minimum wages can increase employment. Therefore, there are strong theoretical reasons to believe that the effect of the minimum wage on employment is ultimately an empirical question depending on many local contextual factors. This is where Card and Krueger (1994) entered. Could they uncover whether minimum wages were ultimately harmful or helpful in some local economy?

It’s always useful to start these questions with a simple thought experiment: if you had a billion dollars, complete discretion and could run a randomized experiment, how would you test whether minimum wages increased or decreased employment? You might go across the hundreds of local labor markets in the United States and flip a coin—heads, you raise the minimum wage; tails, you keep it at the status quo. As we’ve done before, these kinds of thought experiments are useful for clarifying both the research design and the causal question.

Lacking a randomized experiment, Card and Krueger (1994) decided on a next-best solution by comparing two neighboring states before and after a minimum-wage increase. It was essentially the same strategy that Snow used in his cholera study and a strategy that economists continue to use, in one form or another, to this day (Dube, Lester, and Reich 2010).

New Jersey was set to experience an increase in the state minimum wage from $4.25 to $5.05 in November 1992, but neighboring Pennsylvania’s minimum wage was staying at $4.25. Realizing they had an opportunity to evaluate the effect of the minimum-wage increase by comparing the two states before and after, they fielded a survey of about four hundred fast-food restaurants in both states—once in February 1992 (before) and again in November (after). The responses from this survey were then used to measure the outcomes they cared about (i.e., employment). As we saw with Snow, we see again here that shoe leather is as important as any statistical technique in causal inference.

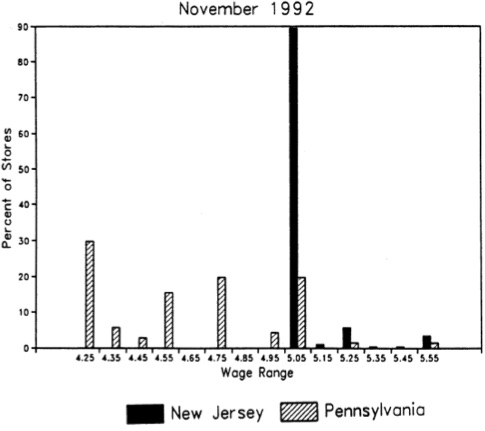

Let’s look at whether the minimum-wage hike in New Jersey in fact raised the minimum wage by examining the distribution of wages in the fast food stores they surveyed. Figure 9.1 shows the distribution of wages in November 1992 after the minimum-wage hike. As can be seen, the minimum-wage hike was binding, evidenced by the mass of wages at the minimum wage in New Jersey.

As a caveat, notice how effective this is at convincing the reader that the minimum wage in New Jersey was binding. This piece of data visualization is not a trivial, or even optional, strategy to be taken in studies such as this. Even John Snow presented carefully designed maps of the distribution of cholera deaths throughout London. Beautiful pictures displaying the “first stage” effect of the intervention on the treatment are crucial in the rhetoric of causal inference, and few have done it as well as Card and Krueger.

Let’s remind ourselves what we’re after—the average causal effect of the minimum-wage hike on employment, or the ATT. Using our decomposition of the \(2\times 2\) DD from earlier, we can write it out as: \[ \begin{align} &\widehat{\delta}^{2\times 2}_{NJ,PA} = \underbrace{E\big[Y^1_{NJ} \Mid\Post\big] - E\big[Y^0_{NJ} \Mid\Post\big]}_{\text{ATT}} \\ &+ \Big[\underbrace{E\big[Y^0_{NJ} \Mid \Post\big] - E\big[Y^0_{NJ} \Mid \Pre\big] \Big]-\Big[E\big[Y^0_{PA} \Mid\Post\big] - E\big[Y_{PA}^0 \Mid\Pre\big] }_{\text{Non-parallel trends bias}} \Big] \end{align} \]

Again, we see the key assumption: the parallel-trends assumption, which is represented by the first difference in the second line. Insofar as parallel trends holds in this situation, then the second term goes to zero, and the \(2\times 2\) DD collapses to the ATT.

The \(2\times 2\) DD requires differencing employment in NJ and PA, then differencing those first differences. This set of steps estimates the true ATT so long as the parallel-trends bias is zero. When that is true, \(\widehat{\delta}^{2\times 2}\) is equal to \(\delta^{ATT}\). If this bottom line is not zero, though, then simple \(2\times 2\) suffers from unknown bias—could bias it upwards, could bias it downwards, could flip the sign entirely. Table 9.2 shows the results of this exercise from Card and Krueger (1994).

| Stores by State | |||

| Dependent Variable | PA | NJ | NJ – PA |

| FTW before | 23.3 | 20.44 | \(-2.89\) |

| (1.35) | (0.51) | (1.44) | |

| FTE after | 21.147 | 21.03 | \(-0.14\) |

| (0.94) | (0.52) | (1.07) | |

| Change in mean FTE | \(-2.16\) | 0.59 | 2.76 |

| (1.25) | (0.54) | (1.36) |

Standard errors in parentheses.

Here you see the result that surprised many people. Card and Krueger (1994) estimate an ATT of +2.76 additional mean full-time-equivalent employment, as opposed to some negative value which would be consistent with competitive input markets. Herein we get Buchanan’s frustration with the paper, which is based mainly on a particular model he had in mind, rather than a criticism of the research design the authors used.

While differences in sample averages will identify the ATT under the parallel assumption, we may want to use multivariate regression instead. For instance, if you need to avoid omitted variable bias through controlling for endogenous covariates that vary over time, then you may want to use regression. Such strategies are another way of saying that you will need to close some known critical backdoor. Another reason for the equation is that by controlling for more appropriate covariates, you can reduce residual variance and improve the precision of your DD estimate.

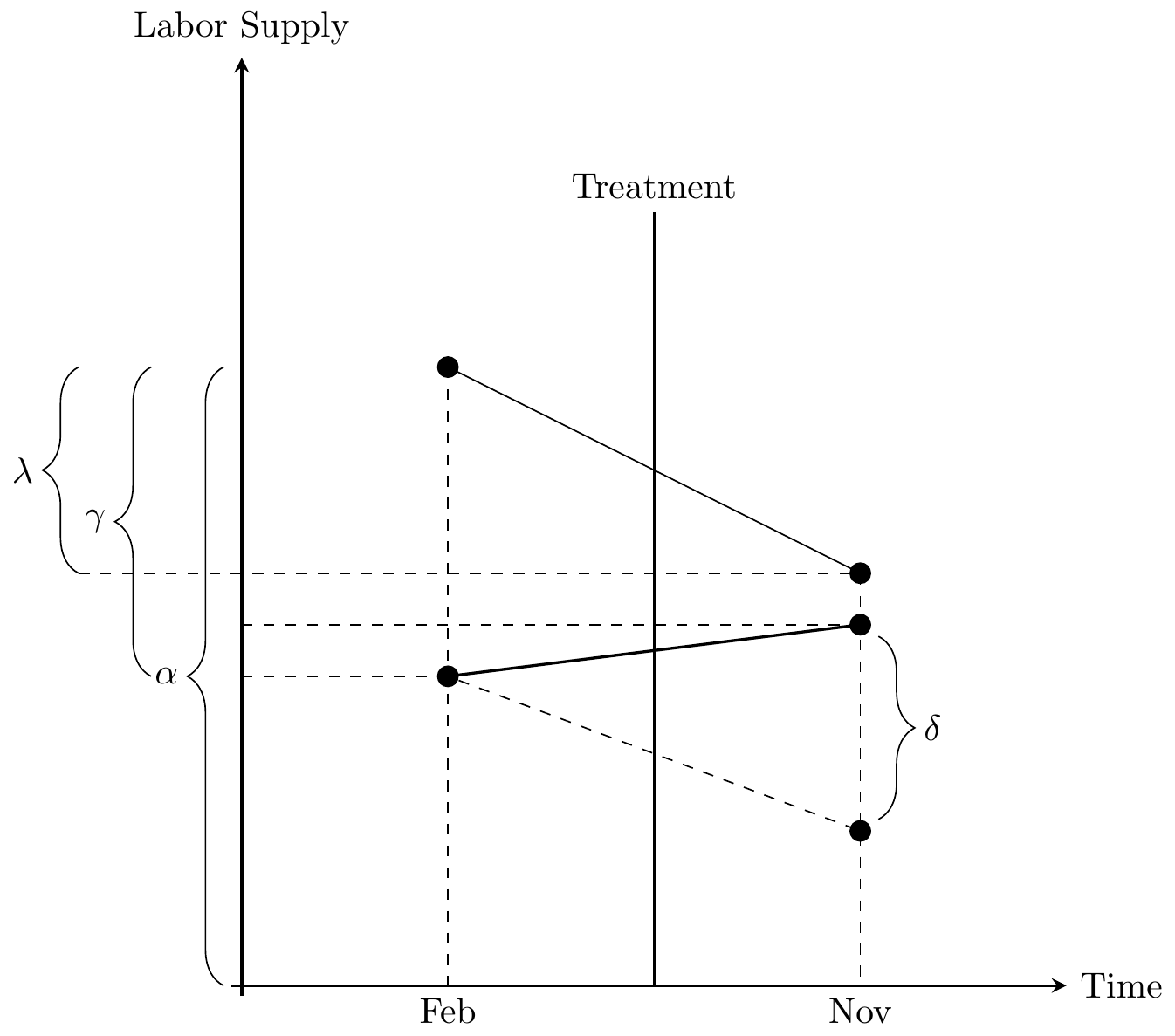

Using the switching equation, and assuming a constant state fixed effect and time fixed effect, we can write out a simple regression model estimating the causal effect of the minimum wage on employment, \(Y\). This simple \(2\times 2\) is estimated with the following equation: \[ Y_{its} = \alpha + \gamma NJ_s + \lambda D_t + \delta (NJ \times D)_{st} + \varepsilon_{its} \] NJ is a dummy equal to 1 if the observation is from NJ, and \(D\) is a dummy equal to 1 if the observation is from November (the post period). This equation takes the following values, which I will list in order according to setting the dummies equal to one and/or zero:

PA Pre: \(\alpha\)

PA Post: \(\alpha + \lambda\)

NJ Pre: \(\alpha + \gamma\)

NJ Post: \(\alpha+\gamma +\lambda+\delta\)

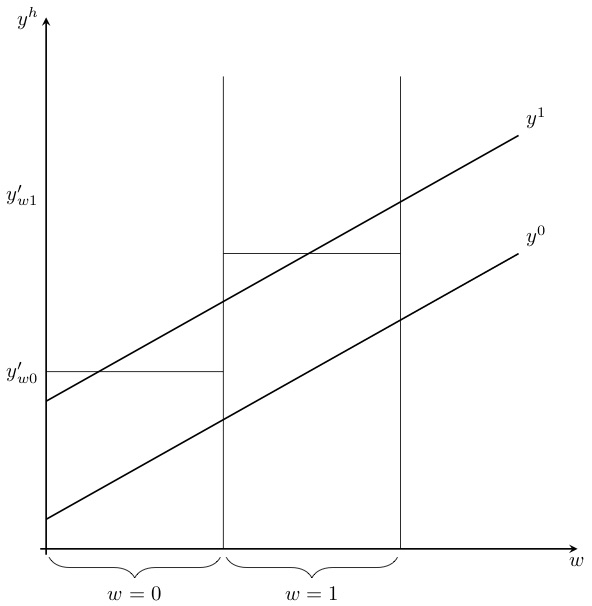

We can visualize the \(2\times 2\) DD parameter in Figure 9.2.

Now before we hammer the parallel trends assumption for the billionth time, I wanted to point something out here which is a bit subtle. But do you see the \(\delta\) parameter floating in the air above the November line in the Figure 55? This is the difference between a counterfactual level of employment (the bottom black circle in November on the negatively sloped dashed line) and the actual level of employment (the above black circle in November on the positively sloped solid line) for New Jersey. It is therefore the ATT, because the ATT is equal to \[ \delta=E[Y^1_{NJ,\Post}] - E[Y^0_{NJ,\Post}] \] wherein the first is observed (because \(Y=Y^1\) in the post period) and the latter is unobserved for the same reason.

Now here’s the kicker: OLS will always estimate that \(\delta\) line even if the counterfactual slope had been something else. That’s because OLS uses Pennsylvania’s change over time to project a point starting at New Jersey’s pre-treatment value. When OLS has filled in that missing amount, the parameter estimate is equal to the difference between the observed post-treatment value and that projected value based on the slope of Pennsylvania regardless of whether that Pennsylvania slope was the correct benchmark for measuring New Jersey’s counterfactual slope. OLS always estimates an effect size using the slope of the untreated group as the counterfactual, regardless of whether that slope is in fact the correct one.

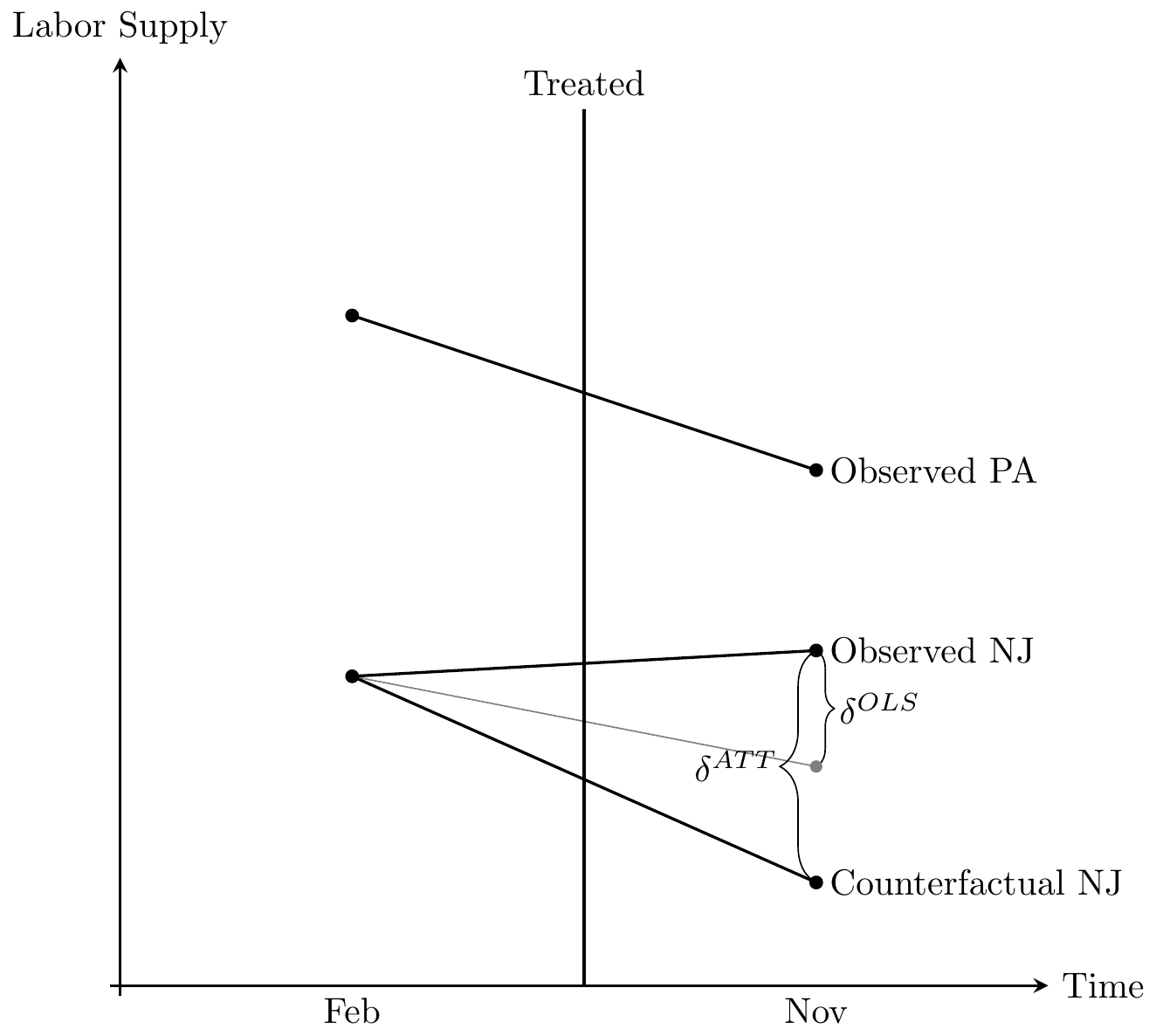

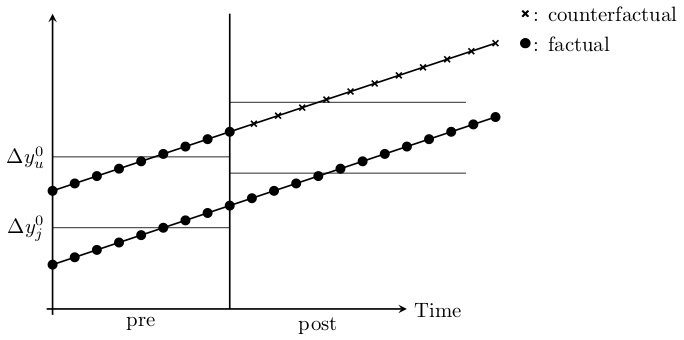

But, see what happens when Pennsylvania’s slope is equal to New Jersey’s counterfactual slope? Then that Pennsylvania slope used in regression will mechanically estimate the ATT. In other words, only when the Pennsylvania slope is the counterfactual slope for New Jersey will OLS coincidentally identify that true effect. Let’s see that here in Figure 9.3.

Notice the two \(\delta\) listed: on the left is the true parameter \(\delta^{ATT}\). On the right is the one estimated by OLS, \(\widehat{\delta}^{OLS}\). The falling solid line is the observed Pennsylvania change, whereas the falling solid line labeled “observed NJ” is the change in observed employment for New Jersey between the two periods.

The true causal effect, \(\delta^{ATT}\), is the line from the “observed NJ” point and the “counterfactual NJ” point. But OLS does not estimate this line. Instead, OLS uses the falling Pennsylvania line to draw a parallel line from the February NJ point, which is shown in thin gray. And OLS simply estimates the vertical line from the observed NJ point to the post NJ point, which as can be seen underestimates the true causaleffect.

Here we see the importance of the parallel trends assumption. The only situation under which the OLS estimate equals the ATT is when the counterfactual NJ just coincidentally lined up with the gray OLS line, which is a line parallel to the slope of the Pennsylvania line. Herein lies the source of understandable skepticism of many who have been paying attention: why should we base estimation on this belief in a coincidence? After all, this is a counterfactual trend, and therefore it is unobserved, given it never occurred. Maybe the counterfactual would’ve been the gray line, but maybe it would’ve been some other unknown line. It could’ve been anything—we just don’t know.

This is why I like to tell people that the parallel trends assumption is actually just a restatement of the strict exogeneity assumption we discussed in the panel chapter. What we are saying when we appeal to parallel trends is that we have found a control group who approximates the traveling path of the treatment group and that the treatment is not endogenous. If it is endogenous, then parallel trends is always violated because in counterfactual the treatment group would’ve diverged anyway, regardless of the treatment.

Before we see the number of tests that economists have devised to provide some reasonable confidence in the belief of the parallel trends, I’d like to quickly talk about standard errors in a DD design.

Many studies employing DD strategies use data from many years—not just one pre-treatment and one post-treatment period like Card and Krueger (1994). The variables of interest in many of these setups only vary at a group level, such as the state, and outcome variables are often serially correlated. In Card and Krueger (1994), it is very likely for instance that employment in each state is not only correlated within the state but also serially correlated. Bertrand, Duflo, and Mullainathan (2004) point out that the conventional standard errors often severely understate the standard deviation of the estimators, and so standard errors are biased downward, “too small,” and therefore overreject the null hypothesis. Bertrand, Duflo, and Mullainathan (2004) propose the following solutions:

Block bootstrapping standard errors.

Aggregating the data into one pre and one post period.

Clustering standard errors at the group level.

If the block is a state, then you simply sample states with replacement for bootstrapping. Block bootstrap is straightforward and only requires a little programming involving loops and storing the estimates. As the mechanics are similar to that of randomization inference, I leave it to the reader to think about how they might tackle this.

This approach ignores the time-series dimensions altogether, and if there is only one pre and post period and one untreated group, it’s as simple as it sounds. You simply average the groups into one pre and post period, and conduct difference-in-differences on those aggregated. But if you have differential timing, it’s a bit unusual because you will need to partial out state and year fixed effects before turning the analysis into an analysis involving residualization. Essentially, for those common situations where you have multiple treatment time periods (which we discuss later in greater detail), you would regress the outcome onto panel unit and time fixed effects and any covariates. You’d then obtain the residuals for only the treatment group. You then divide the residuals only into a pre and post period; you are essentially at this point ignoring the never-treated groups. And then you regress the residuals on the after dummy. It’s a strange procedure, and does not recover the original point estimate, so I focus instead on the third.

Correct treatment of standard errors sometimes makes the number of groups very small: in Card and Krueger (1994), the number of groups is only two. More common than not, researchers will use the third option (clustering the standard errors by group). I have only one time seen someone do all three of these; it’s rare though. Most people will present just the clustering solution—most likely because it requires minimal programming.

For clustering, there is no programming required, as most software packages allow for it already. You simply adjust standard errors by clustering at the group level, as we discussed in the earlier chapter, or the level of treatment. For state-level panels, that would mean clustering at the state level, which allows for arbitrary serial correlation in errors within a state over time. This is the most common solution employed.

Inference in a panel setting is independently an interesting area. When the number of clusters is small, then simple solutions like clustering the standard errors no longer suffice because of a growing false positive problem. In the extreme case with only one treatment unit, the over-rejection rate at a significance of 5% can be as high as 80% in simulations even using the wild bootstrap technique which has been suggested for smaller numbers of clusters (Cameron, Gelbach, and Miller 2008; MacKinnon and Webb 2017). In such extreme cases where there is only one treatment group, I have preferred to use randomization inference following Buchmueller, DiNardo, and Valletta (2011).

Given the critical importance of the parallel trends assumption in identifying causal effects with the DD design, and given that one of the observations needed to evaluate the parallel-trends assumption is not available to the researcher, one might throw up their hands in despair. But economists are stubborn, and they have spent decades devising ways to test whether it’s reasonable to believe in parallel trends. We now discuss the obligatory test for any DD design—the event study. Let’s rewrite the decomposition of the \(2 \times 2\) DD again. \[ \begin{align} &\widehat{\delta}^{2\times 2}_{kU} = \underbrace{E\big[Y^1_{k} \Mid \Post\big] - E\big[Y^0_{k} \Mid \Post\big]}_{\text{ATT}} \\ &+\Big[\underbrace{E\big[Y^0_k \Mid \Post\big] - E\big[Y^0_k \Mid\Pre\big] \Big]-\Big[E\big[Y^0_U \Mid \Post\big] - E\big[Y_{U}^0 \Mid\Pre\big] }_{\text{Non-parallel trends bias}} \Big] \end{align} \]

We are interested in the first term, ATT, but it is contaminated by selection bias when the second term does not equal zero. Since evaluating the second term requires the counterfactual, \(E[Y^0_k \Mid Post]\), we are unable to do so directly. What economists typically do, instead, is compare placebo pre-treatment leads of the DD coefficient. If DD coefficients in the pre-treatment periods are statistically zero, then the difference-in-differences between treatment and control groups followed a similar trend prior to treatment. And here’s the rhetorical art of the design: if they had been similar before, then why wouldn’t they continue to be post-treatment?

But notice that this rhetoric is a kind of proof by assertion. Just because they were similar before does not logically require they be the same after. Assuming that the future is like the past is a form of the gambler’s fallacy called the “reverse position.” Just because a coin came up heads three times in a row does not mean it will come up heads the fourth time—not without further assumptions. Likewise, we are not obligated to believe that that counterfactual trends would be the same post-treatment because they had been similar pre-treatment without further assumptions about the predictive power of pre-treatment trends. But to make such assumptions is again to make untestable assumptions, and so we are back where we started.

One situation where parallel trends would be obviously violated is if the treatment itself was endogenous. In such a scenario, the assignment of the treatment status would be directly dependent on potential outcomes, and absent the treatment, potential outcomes would’ve changed regardless. Such traditional endogeneity requires more than merely lazy visualizations of parallel leads. While the test is important, technically pre-treatment similarities are neither necessary nor sufficient to guarantee parallel counterfactual trends (Kahn-Lang and Lang 2019). The assumption is not so easily proven. You can never stop being diligent in attempting to determine whether groups of units endogenously selected into treatment, the presence of omitted variable biases, various sources of selection bias, and open backdoor paths. When the structural error term in a dynamic regression model is uncorrelated with the treatment variable, you have strict exogeneity, and that is what gives you parallel trends, and that is what makes you able to make meaningful statements about your estimates.

Now with that pessimism out of the way, let’s discuss event study plots because though they are not direct tests of the parallel trends assumption, they have their place because they show that the two groups of units were comparable on dynamics in the pre-treatment period.6 Such conditional independence concepts have been used profitably throughout this book, and we do so again now.

Authors have tried showing the differences between treatment and control groups a few different ways. One way is to simply show the raw data, which you can do if you have a set of groups who received the treatment at the same point in time. Then you would just visually inspect whether the pre-treatment dynamics of the treatment group differed from that of the control group units.

But what if you do not have a single treatment date? What if instead you have differential timing wherein groups of units adopt the treatment at different points? Then the concept of pre-treatment becomes complex. If New Jersey raised its minimum wage in 1992 and New York raised its minimum wage in 1994, but Pennsylvania never raised its minimum wage, the pre-treatment period is defined for New Jersey (1991) and New York (1993), but not Pennsylvania. Thus, how do we go about testing for pre-treatment differences in that case? People have done it in a variety of ways.

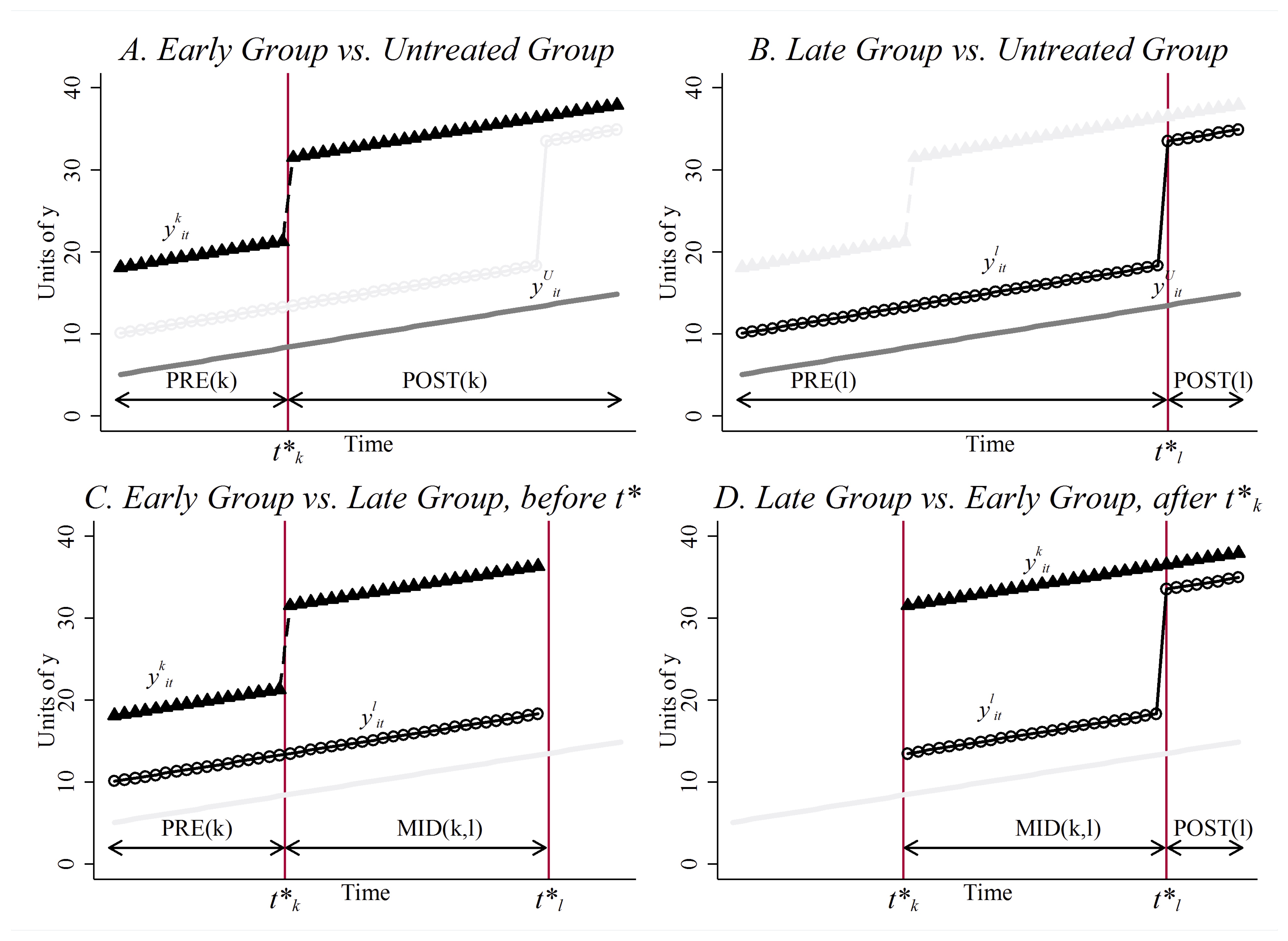

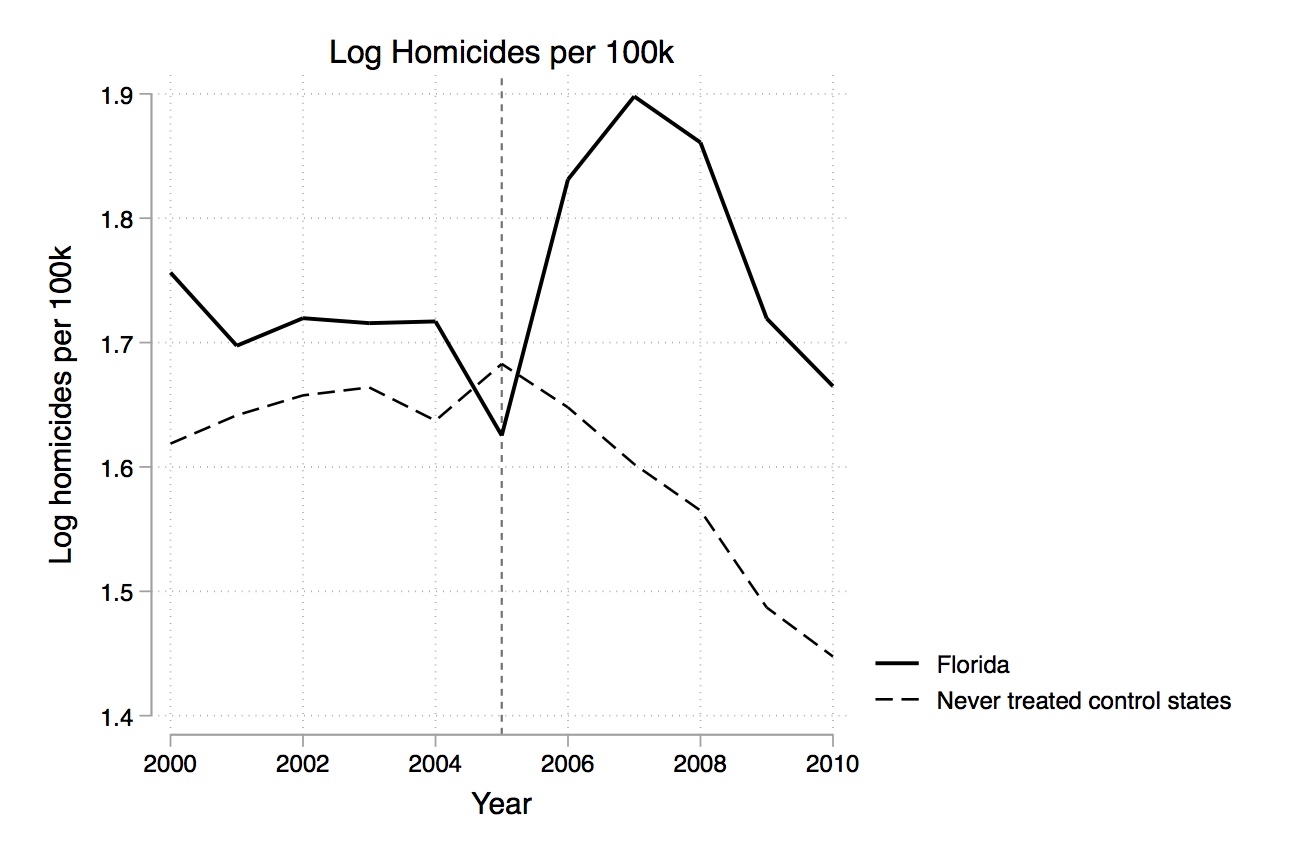

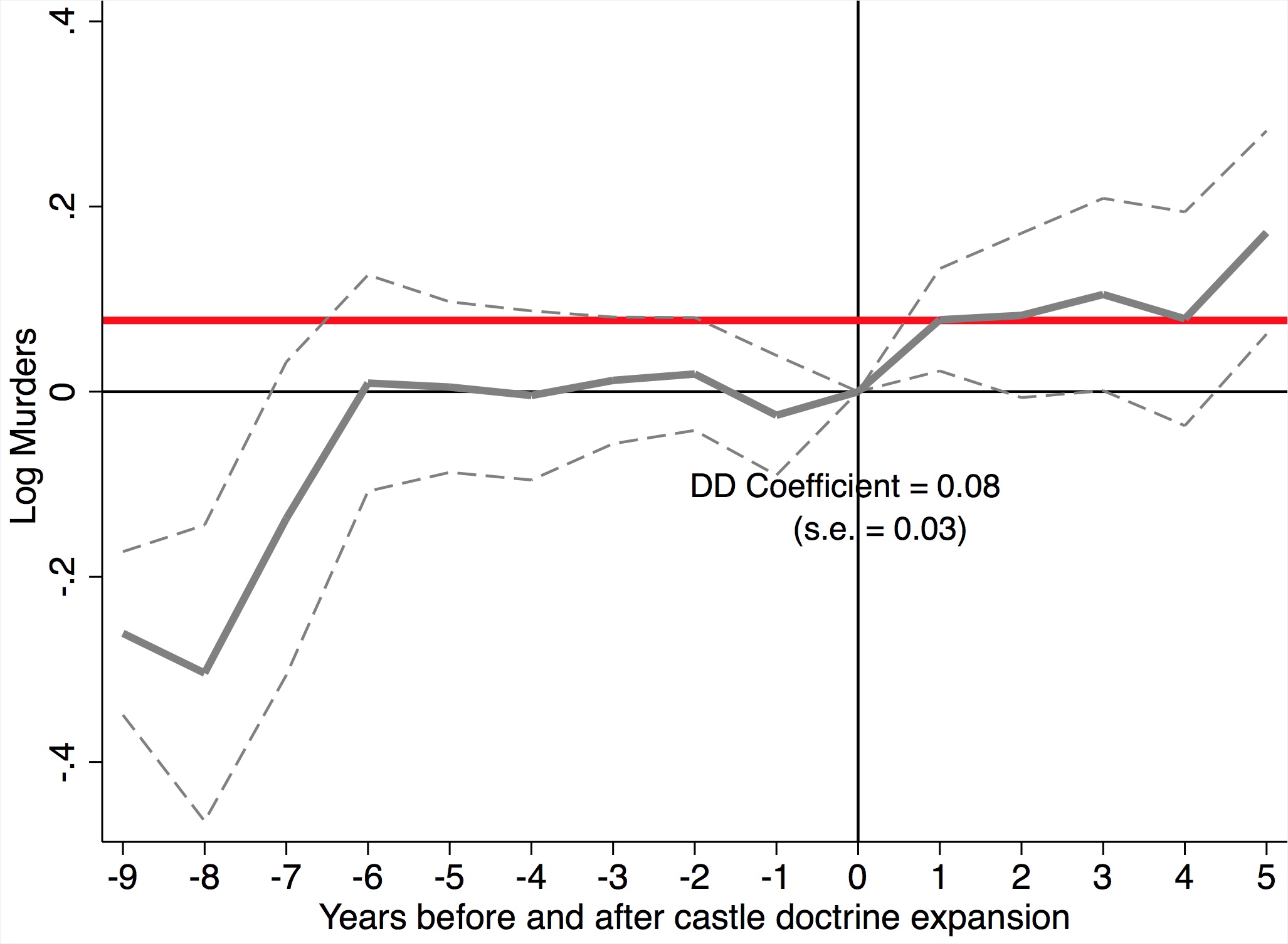

One possibility is to plot the raw data, year by year, and simply eyeball. You would compare the treatment group with the never-treated, for instance, which might require a lot of graphs and may also be awkward looking. Cheng and Hoekstra (2013) took this route, and created a separate graph comparing treatment groups with an untreated group for each different year of treatment. The advantage is its transparent display of the raw unadjusted data. No funny business. The disadvantage of this several-fold. First, it may be cumbersome when the number of treatment groups is large, making it practically impossible. Second, it may not be beautiful. But third, this necessarily assumes that the only control group is the never-treated group, which in fact is not true given what Goodman-Bacon (2019) has shown. Any DD is a combination of a comparison between the treatment and the never treated, an early treated compared to a late treated, and a late treated compared to an early treated. Thus only showing the comparison with the never treated is actually a misleading presentation of the underlying mechanization of identification using an twoway fixed-effects model with differential timing.

Anderson, Hansen, and Rees (2013) took an alternative, creative approach to show the comparability of states with legalized medical marijuana and states without. As I said, the concept of a pre-treatment period for a control state is undefined when pre-treatment is always in reference to a specific treatment date which varies across groups. So, the authors construct a recentered time path of traffic fatality rates for the control states by assigning random treatment dates to all control counties and then plotting the average traffic fatality rates for each group in years leading up to treatment and beyond. This approach has a few advantages. First, it plots the raw data, rather than coefficients from a regression (as we will see next). Second, it plots that data against controls. But its weakness is that technically, the control series is not in fact true. It is chosen so as to give a comparison, but when regressions are eventually run, it will not be based on this series. But the main main shortcoming is that technically it is not displaying any of the control groups that will be used for estimation Goodman-Bacon (2019). It is not displaying a comparison between the treated and the never treated; it is not a comparison between the early and late treated; it is not a comparison between the late and early treated. While a creative attempt to evaluate the pre-treatment differences in leads, it does not in fact technically show that.

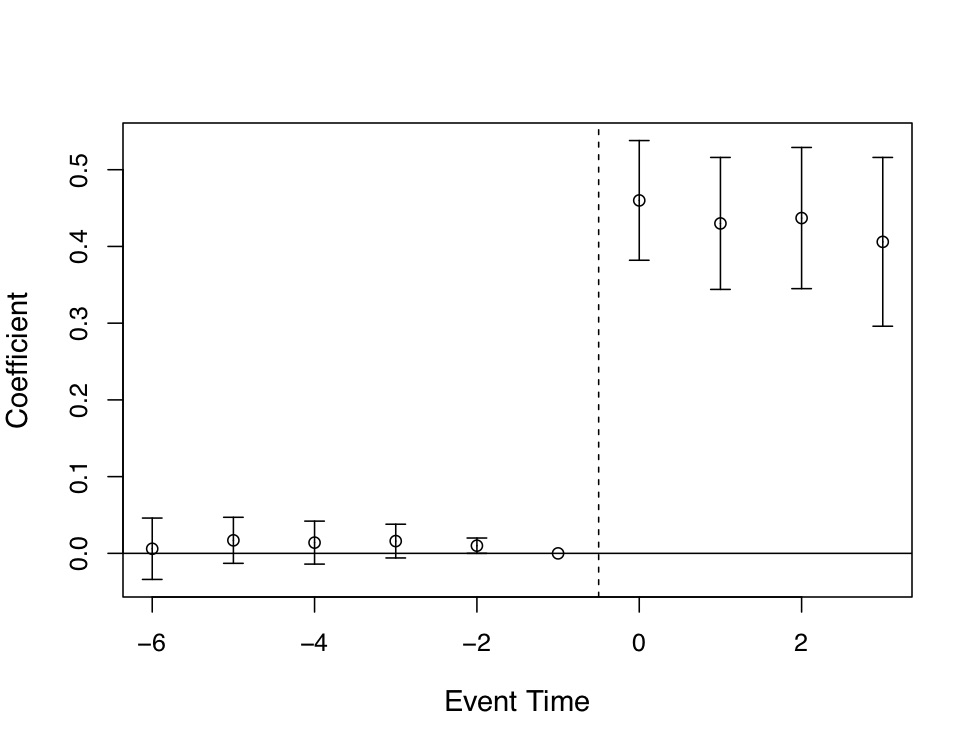

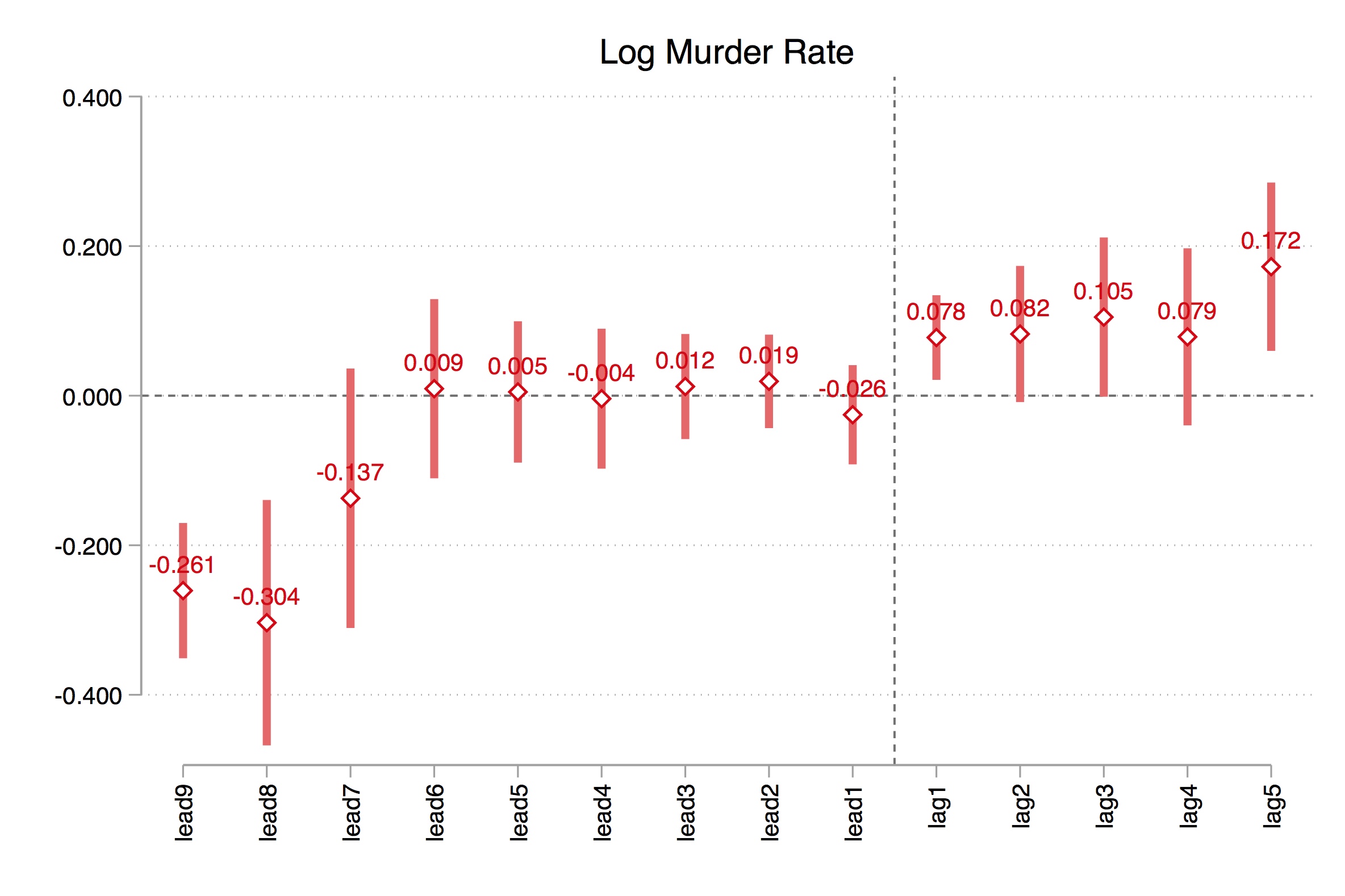

The current way in which authors evaluate the pre-treatment dynamics between a treatment and control group with differential timing is to estimate a regression model that includes treatment leads and lags. I find that it is always useful to teach these concepts in the context of an actual paper, so let’s review an interesting working paper by Miller et al. (2019).

A provocative new study by Miller et al. (2019) examined the expansion of Medicaid under the Affordable Care Act. They were primarily interested in the effect that this expansion had on population mortality. Earlier work had cast doubt on Medicaid’s effect on mortality (Finkelstein et al. 2012; Baicker et al. 2013), so revisiting the question with a larger sample size had value.

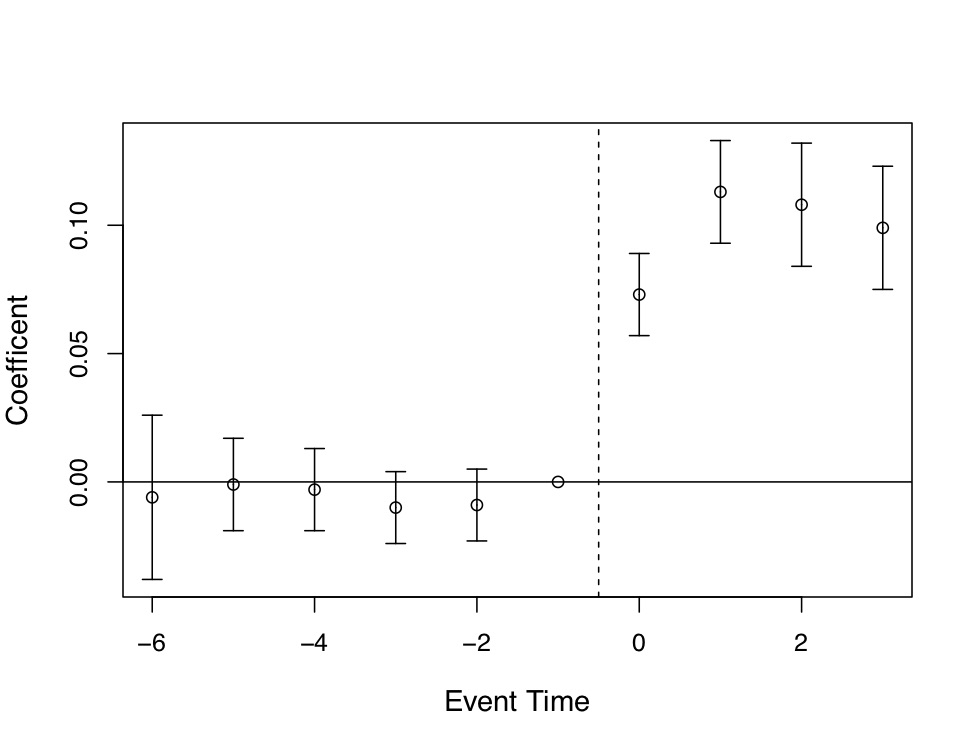

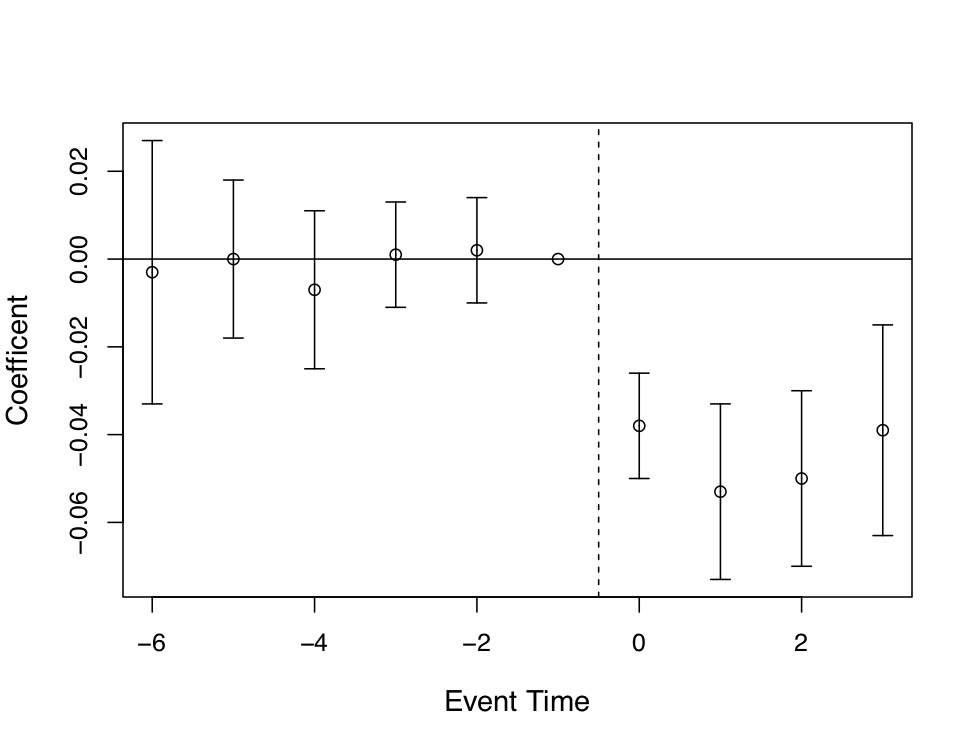

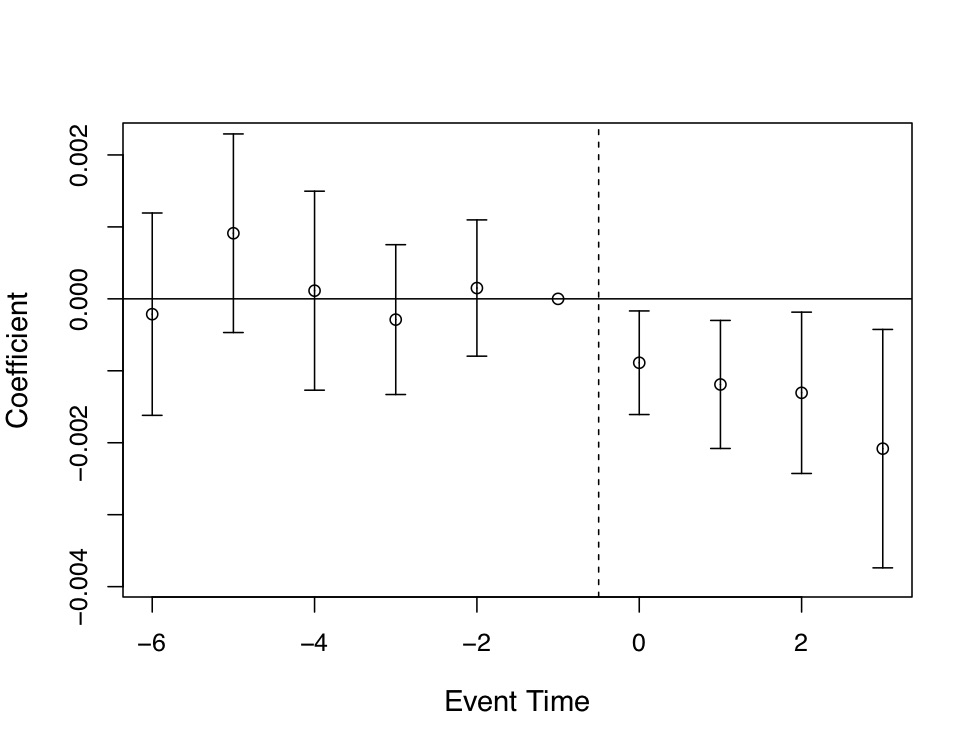

Like Snow before them, the authors link data sets on deaths with a large-scale federal survey data, thus showing that shoe leather often goes hand in hand with good design. They use these data to evaluate the causal impact of Medicaid enrollment on mortality using a DD design. Their focus is on the near-elderly adults in states with and without the Affordable Care Act Medicaid expansions and they find a 0.13-percentage-point decline in annual mortality, which is a 9.3% reduction over the sample mean, as a result of the ACA expansion. This effect is a result of a reduction in disease-related deaths and gets larger over time. Medicaid, in this estimation, saved a non-trivial number of lives.

As with many contemporary DD designs, Miller et al. (2019) evaluate the pre-treatment leads instead of plotting the raw data by treatment and control. Post-estimation, they plotted regression coefficients with 95% confidence intervals on their treatment leads and lags. Including leads and lags into the DD model allowed the reader to check both the degree to which the post-treatment treatment effects were dynamic, and whether the two groups were comparable on outcome dynamics pre-treatment. Models like this one usually follow a form like: \[ Y_{its} = \gamma_s + \lambda_t + \sum_{\tau=-q}^{-1}\gamma_{\tau}D_{s\tau} + \sum_{\tau=0}^m\delta_{\tau}D_{s\tau}+x_{ist}+ \varepsilon_{ist} \] Treatment occurs in year 0. You include \(q\) leads or anticipatory effects and \(m\) lags or post-treatment effects.

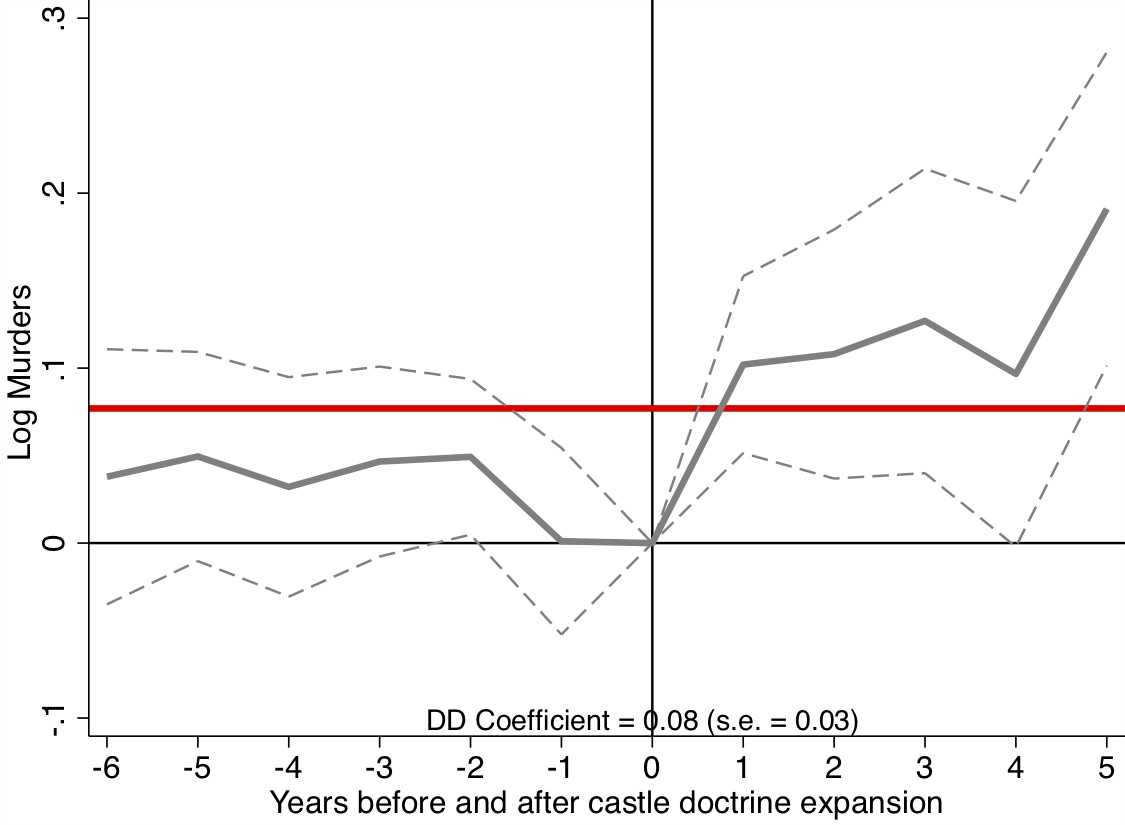

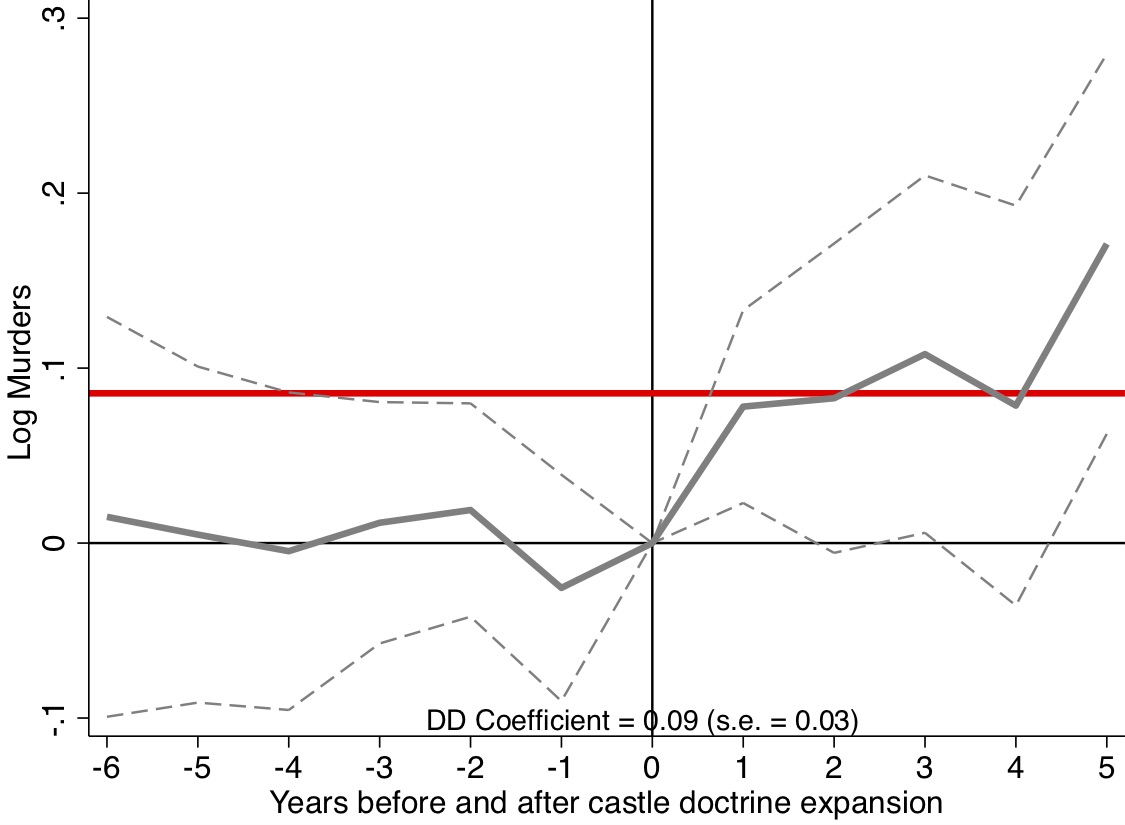

Miller et al. (2019) produce four event studies that when taken together tell the main parts of the story of their paper. This is, quite frankly, the art of the rhetoric of causal inference—visualization of key estimates, such as “first stages” as well as outcomes and placebos. The event study plots are so powerfully persuasive, they will make you a bit jealous, since oftentimes yours won’t be nearly so nice. Let’s look at the first three. State expansion of Medicaid under the Affordable Care Act increased Medicaid eligibility (Figure 9.4), which is not altogether surprising. It also caused an increase in Medicaid coverage (Figure 9.5), and as a consequence reduced the percentage of the uninsured population (Figure 9.6). All three of these are simply showing that the ACA Medicaid expansion had “bite”—people enrolled and became insured.

There are several features of these event studies that should catch your eye. First, look at Figure 9.4. The pre-treatment coefficients are nearly on the zero line itself. Not only are they nearly zero in their point estimate, but their standard errors are very small. This means these are very precisely estimated zero differences between individuals in the two groups of states prior to the expansion.

The second thing you see, though, is the elephant in the room. Post-treatment, the probability that someone becomes eligible for Medicaid immediately shoots up to 0.4 and while not as precise as the pre-treatment coefficients, the authors can rule out effects as low as 0.3 to 0.35. These are large increases in eligibility, and the fact that the coefficients prior to the treatment are basically zero, we find it easy to believe that the risen coefficients post-treatment were caused by the ACA’s expansion of Medicaid in states.

Of course, I would not be me if I did not say that technically the zeroes pre-treatment do not therefore mean that the post-treatment difference between counterfactual trends and observed trends are zero, but doesn’t it seem compelling when you see it? Doesn’t it compel you, just a little bit, that the changes in enrollment and insurance status were probably caused by the Medicaid expansion? I daresay a table of coefficients with leads, lags, and standard errors would probably not be as compelling even though it is the identical information. Also, it is only fair that the skeptic refuse these patterns with new evidence of what it is other than the Medicaid expansion. It is not enough to merely hand wave a criticism of omitted variable bias; the critic must be as engaged in this phenomenon as the authors themselves, which is how empiricists earn the right to critique someoneelse’s work.

Similar graphs are shown for coverage—prior to treatment, the two groups of individuals in treatment and control were similar with regards to their coverage and uninsured rate. But post-treatment, they diverge dramatically. Taken together, we have the “first stage,” which means we can see that the Medicaid expansion under the ACA had “bite.” Had the authors failed to find changes in eligibility, coverage, or uninsured rates, then any evidence from the secondary outcomes would have doubt built in. This is the reason it is so important that you examine the first stage (treatment’s effect on usage), as well as the second stage (treatment’s effect on the outcomes of interest).

But now let’s look at the main result—what effect did this have on population mortality itself? Recall, Miller et al. (2019) linked administrative death records with a large-scale federal survey. So they actually know who is on Medicaid and who is not. John Snow would be proud of this design, the meticulous collection of high-quality data, and all the shoeleather the authors showed.

This event study is presented in Figure 9.7. A graph like this is the contemporary heart and soul of a DD design, both because it conveys key information regarding the comparability of the treatment and control groups in their dynamics just prior to treatment, and because such strong data visualization of main effects are powerfully persuasive. It’s quite clear looking at it that there was no difference between the trending tendencies of the two sets of state prior to treatment, making the subsequent divergence all the more striking.

But a picture like this is only as important as the thing that it is studying, and it is worth summarizing what Miller et al. (2019) have revealed here. The expansion of ACA Medicaid led to large swaths of people becoming eligible for Medicaid. In turn, they enrolled in Medicaid, which caused the uninsured rate to drop considerably. The authors then find amazingly using linked administrative data on death records that the expansion of ACA Medicaid led to a 0.13 percentage point decline in annual mortality, which is a 9.3 percent reduction over the mean. They go on to try and understand the mechanism (another key feature of this high-quality study) by which such amazing effects may have occurred, and conclude that Medicaid caused near-elderly individuals to receive treatment for life-threatening illnesses. I suspect we will be hearing about this study for many years.

There are several tests of the validity of a DD strategy. I have already discussed one—comparability between treatment and control groups on observable pre-treatment dynamics. Next, I will discuss other credible ways to evaluate whether estimated causal effects are credible by emphasizing the use of placebo falsification.

The idea of placebo falsification is simple. Say that you are finding some negative effect of the minimum wage on low-wage employment. Is the hypothesis true if we find evidence in favor? Maybe, maybe not. Maybe what would really help, though, is if you had in mind an alternative hypothesis and then tried to test that alternative hypothesis. If you cannot reject the null on the alternative hypothesis, then it provides some credibility to your original analysis. For instance, maybe you are picking up something spurious, like cyclical factors or other unobservables not easily captured by a time or state fixed effects. So what can you do?

One candidate placebo falsification might simply be to use data for an alternative type of worker whose wages would not be affected by the binding minimum wage. For instance, minimum wages affect employment and earnings of low-wage workers as these are the workers who literally are hired based on the market wage. Without some serious general equilibrium gymnastics, the minimum wage should not affect the employment of higher wage workers, because the minimum wage is not binding on high wage workers. Since high- and low-wage workers are employed in very different sectors, they are unlikely to be substitutes. This reasoning might lead us to consider the possibility that higher wage workers might function as a placebo.

There are two ways you can go about incorporating this idea into our analysis. Many people like to be straightforward and simply fit the same DD design using high wage employment as the outcome. If the coefficient on minimum wages is zero when using high wage worker employment as the outcome, but the coefficient on minimum wages for low wage workers is negative, then we have provided stronger evidence that complements the earlier analysis we did when on the low wage workers. But there is another method that uses the within-state placebo for identification called the difference-in-differences-in-differences (“triple differences”). I will discuss that design now.

In our earlier analysis, we assumed that the only thing that happened to New Jersey after it passed the minimum wage was a common shock, \(T\), but what if there were state-specific time shocks such as \(NJ_t\) or \(PA_t\)? Then even DD cannot recover the treatment effect. Let’s see for ourselves using a modification of the simple minimum-wage table from earlier, which will include the within-state workers who hypothetically were untreated by the minimum wage—the “high-wage workers.”

| States | Group | Period | Outcomes | \(D_1\) | \(D_2\) | \(D_3\) |

|---|---|---|---|---|---|---|

| NJ | Low-wage workers | After | \(NJ_l+T+NJ_t+l_t+D\) | \(T+NJ_t+\) | \((l_t-h_t)+D\) | \(D\) |

| Before | \(NJ_l\) | \(l_t+D\) | ||||

| High-wage workers | After | \(NJ_h+T+NJ_t+h_t\) | \(T+NJ_t+h_t\) | |||

| Before | \(NJ_h\) | |||||

| PA | Low-wage workers | After | \(PA_l+T+PA_t+l_t\) | \(T+PA_t+l_t\) | \(l_t-h_t\) | |

| Before | \(PA_l\) | |||||

| High-wage workers | After | \(PA_h+T+PA_t+h_t\) | \(T+PA_t+h_t\) | |||

| Before | \(PA_h\) |

Before the minimum-wage increase, low- and high-wage employment in New Jersey is determined by a group-specific New Jersey fixed effect (e.g., \(NJ_h\)). The same is true for Pennsylvania. But after the minimum-wage hike, four things change in New Jersey: national trends cause employment to change by \(T\); New Jersey-specific time shocks change employment by \(NJ_t\); generic trends in low-wage workers change employment by \(l_t\); and the minimum-wage has some unknown effect \(D\). We have the same setup in Pennsylvania except there is no minimum wage, and Pennsylvania experiences its own time shocks.

Now if we take first differences for each set of states, we only eliminate the state fixed effect. The first difference estimate for New Jersey includes the minimum-wage effect, \(D\), but is also hopelessly contaminated by confounders (i.e., \(T+NJ_t+l_t\)). So we take a second difference for each state, and doing so, we eliminate two of the confounders: \(T\) disappears and \(NJ_t\) disappears. But while this DD strategy has eliminated several confounders, it has also introduced new ones (i.e., \((l_t-h_t)\)). This is the final source of selection bias that triple differences are designed to resolve. But, by differencing Pennsylvania’s second difference from New Jersey, the \((l_t-h_t)\) is deleted and the minimum-wage effect is isolated.

Now, this solution is not without its own set of unique parallel-trends assumptions. But one of the parallel trends here I’d like you to see is the \(l_t-h_t\) term. This parallel trends assumption states that the effect can be isolated if the gap between high- and low-wage employment would’ve evolved similarly in the treatment state counterfactual as it did in the historical control states. And we should probably provide some credible evidence that this is true with leads and lags in an event study as before.

The triple differences design was first introduced by Gruber (1994) in a study of state-level policies providing maternity benefits. I present his main results in Table 9.3. Notice that he uses as his treatment group married women of childbearing age in treatment and control states, but he also uses a set of placebo units (older women and single men 20–40) as within-state controls. He then goes through the differences in means to get the difference-in-differences for each set of groups, after which he calculates the DDD as the difference between these two difference-in-differences.

| Location/year | Pre-law | Post-law | Difference |

|---|---|---|---|

| A. Treatment: Married women, 20-40yo | |||

| Experimental states | 1.547 | 1.513 | \(-0.034\) |

| (0.012) | (0.012) | (0.017) | |

| Control states | 1.369 | 1.397 | 0.028 |

| (0.010) | (0.010) | (0.014) | |

| Difference | 0.178 | 0.116 | |

| (0.016) | (0.015) | ||

| Difference-in-difference | −0.062 | ||

| (0.022) | |||

| B. Control: Over 40 and Single Males 20-40 | |||

| Experimental states | 1.759 | 1.748 | \(-0.011\) |

| (0.007) | (0.007) | (0.010) | |

| Control states | 1.630 | 1.627 | \(-0.003\) |

| (0.007) | (0.007) | (0.010) | |

| Difference | 1.09 | 1.21 | |

| (0.010) | (0.010) | ||

| Difference-in-difference | -0.008 | ||

| (0.014) | |||

| DDD | -0.054 | ||

| (0.026) |

Standard errors in parentheses.

Ideally when you do a DDD estimate, the causal effect estimate will come from changes in the treatment units, not changes in the control units. That’s precisely what we see in Gruber (1994): the action comes from changes in the married women age 20–40 (\(-0.062\)); there’s little movement among the placebo units \((-0.008)\). Thus when we calculate the DDD, we know that most of that calculation is coming from the first DD, and not so much from the second. We emphasize this because DDD is really just another falsification exercise, and just as we would expect no effect had we done the DD on this placebo group, we hope that our DDD estimate is also based on negligible effects among the control group.

What we have done up to now is show how to use sample analogs and simple differences in means to estimate the treatment effect using DDD. But we can also use regression to control for additional covariates that perhaps are necessary to close backdoor paths and so forth. What does that regression equation look like? Both the regression itself, and the data structure upon which the regression is based, are complicated because of the stacking of different groups and the sheer number of interactions involved. Estimating a DDD model requires estimating the following regression: \[ \begin{align} Y_{ijt} &= \alpha + \psi X_{ijt} + \beta_1 \tau_t + \beta_2 \delta_j + \beta_3 D_i + \beta_4(\delta \times \tau)_{jt} \\ & +\beta_5(\tau \times D)_{ti} + \beta_6(\delta \times D)_{ij} + \beta_7(\delta \times \tau \times D)_{ijt}+ \varepsilon_{ijt} \end{align} \] where the parameter of interest is \(\beta_7\). First, notice the additional subscript, \(j\). This \(j\) indexes whether it’s the main category of interest (e.g., low-wage employment) or the within-state comparison group (e.g., high-wage employment). This requires a stacking of the data into a panel structure by group, as well as state. Second, the DDD model requires that you include all possible interactions across the group dummy \(\delta_j\), the post-treatment dummy \(\tau_t\) and the treatment state dummy \(D_i\). The regression must include each dummy independently, each individual interaction, and the triple differences interaction. One of these will be dropped due to multicollinearity, but I include them in the equation so that you can visualize all the factors used in the product of these terms.

Now that we know a little about the DD design, it would probably be beneficial to replicate a paper. And since the DDD requires reshaping panel data multiple times, that makes working through a detailed replication even more important. The study we will be replicating is Cunningham and Cornwell (2013), one of my first publications and the third chapter of my dissertation. Buckle up, as this will be a bit of a roller-coaster ride.

Gruber, Levine, and Staiger (1999) was the beginning of what would become a controversial literature in reproductive health. They wanted to know the characteristics of the marginal child aborted had that child reached their teen years. The authors found that the marginal counterfactual child aborted was 60% more likely to grow up in a single-parent household, 50% more likely to live in poverty, and 45% more likely to be a welfare recipient. Clearly there were strong selection effects related to early abortion whereby it selected on families with fewer resources.

Their finding about the marginal child led John Donohue and Steven Levitt to wonder if there might be far-reaching effects of abortion legalization given the strong selection associated with its usage in the early 1970s. In Donohue and Levitt (2001), the authors argued that they had found evidence that abortion legalization had also led to massive declines in crime rates. Their interpretation of the results was that abortion legalization had reduced crime by removing high-risk individuals from a birth cohort, and as that cohort aged, those counterfactual crimes disappeared. Levitt (2004) attributed as much as 10% of the decline in crime between 1991 and 2001 to abortion legalization in the 1970s.

This study was, not surprisingly, incredibly controversial—some of it warranted but some unwarranted. For instance, some attacked the paper on ethical grounds and argued the paper was revitalizing the pseudoscience of eugenics. But Levitt was careful to focus only on the scientific issues and causal effects and did not offer policy advice based on his own private views, whatever those may be.

But some of the criticism the authors received was legitimate precisely because it centered on the research design and execution itself. Joyce (2004), Joyce (2009), and Foote and Goetz (2008) disputed the abortion-crime findings—some through replication exercises using different data, some with different research designs, and some through the discovery of key coding errors and erroneous variable construction.

One study in particular challenged the whole enterprise of estimating longrun improvements due to abortion legalization. For instance, Ted Joyce, an expert on reproductive health, cast doubt on the abortion-crime hypothesis using a DDD design (Joyce 2009). In addition to challenging Donohue and Levitt (2001), Joyce also threw down a gauntlet. He argued that if abortion legalization had such extreme negative selection as claimed by by Gruber, Levine, and Staiger (1999) and Donohue and Levitt (2001), then it shouldn’t show up just in crime. It should show up everywhere. Joyce writes:

If abortion lowers homicide rates by 20–30%, then it is likely to have affected an entire spectrum of outcomes associated with well-being: infant health, child development, schooling, earnings and marital status. Similarly, the policy implications are broader than abortion. Other interventions that affect fertility control and that lead to fewer unwanted births—contraception or sexual abstinence—have huge potential payoffs. In short, a causal relationship between legalized abortion and crime has such significant ramifications for social policy and at the same time is so controversial, that further assessment of the identifying assumptions and their robustness to alternative strategies is warranted. (p.112)

Cunningham and Cornwell (2013) took up Joyce’s challenge. Our study estimated the effects of abortion legalization on long-term gonorrhea incidence. Why gonorrhea? For one, single-parent households are a risk factor that lead to earlier sexual activity and unprotected sex, and Levine et al. (1999) found that abortion legalization caused teen childbearing to fall by 12%. Other risky outcomes had been found by numerous authors. Charles and Stephens (2006) reported that children exposed in utero to a legalized abortion regime were less likely to use illegal substances, which is correlated with risky sexual behavior.

My research design differed from Donohue and Levitt (2001) in that they used state-level lagged values of an abortion ratio, whereas I used difference-in-differences. My design exploited the early repeal of abortion in five states in 1970 and compared those states to the states that were legalized under Roe v. Wade in 1973. To do this, I needed cohort-specific data on gonorrhea incidence by state and year, but as those data are not collected by the CDC, I had to settle for second best. That second best was the CDC’s gonorrhea data broken into five-year age categories (e.g., age 15–19, age 20–24). But this might still be useful because even with aggregate data, it might be possible to test the model I had in mind.

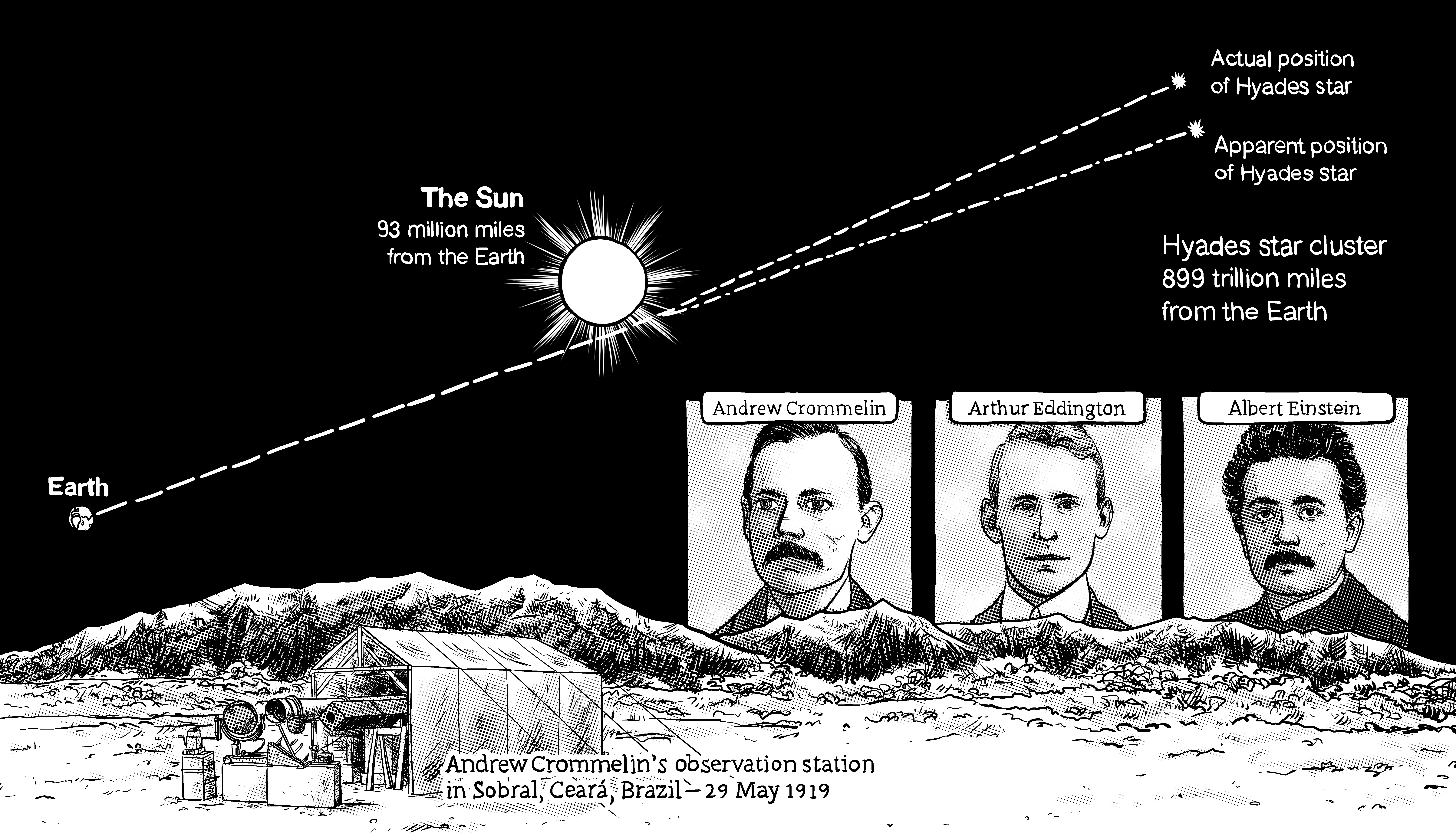

To understand this next part, which I consider the best part of my study, you must first accept a basic view of science that good theories make very specific falsifiable hypotheses. The more specific the hypothesis, the more convincing the theory, because if we find evidence exactly where the theory predicts, a Bayesian is likely to update her beliefs towards accepting the theory’s credibility. Let me illustrate what I mean with a brief detour involving Albert Einstein’s theory of relativity.

Einstein’s theory made several falsifiable hypotheses. One of them involved a precise prediction of the warping of light as it moved past a large object, such as a star. The problem was that testing this theory involved observing distance between stars at night and comparing it to measurements made during the day as the starlight moved past the sun. Problem was, the sun is too bright in the daytime to see the stars, so those critical measurements can’t be made. But Andrew Crommelin and Arthur Eddington realized the measurements could be made using an ingenious natural experiment. That natural experiment was an eclipse. They shipped telescopes to different parts of the world under the eclipse’s path so that they had multiple chances to make the measurements. They decided to measure the distances of a large cluster of stars passing by the sun when it was dark and then immediately during an eclipse (Figure 9.8). That test was over a decade after Einstein’s work was first published (Coles 2019). Think about it for a second—Einstein’s theory by deduction is making predictions about phenomena that no one had ever really observed before. If this phenomena turned out to exist, then how couldn’t the Bayesian update her beliefs and accept that the theory was credible? Incredibly, Einstein was right—just as he predicted, the apparent position of these stars shifted when moving around the sun. Incredible!

So what does that have to do with my study of abortion legalization and gonorrhea? The theory of abortion legalization having strong selection effects on cohorts makes very specific predictions about the shape of observed treatment effects. And if we found evidence for that shape, we’d be forced to take the theory seriously. So what what were these unusual yet testable predictions exactly?

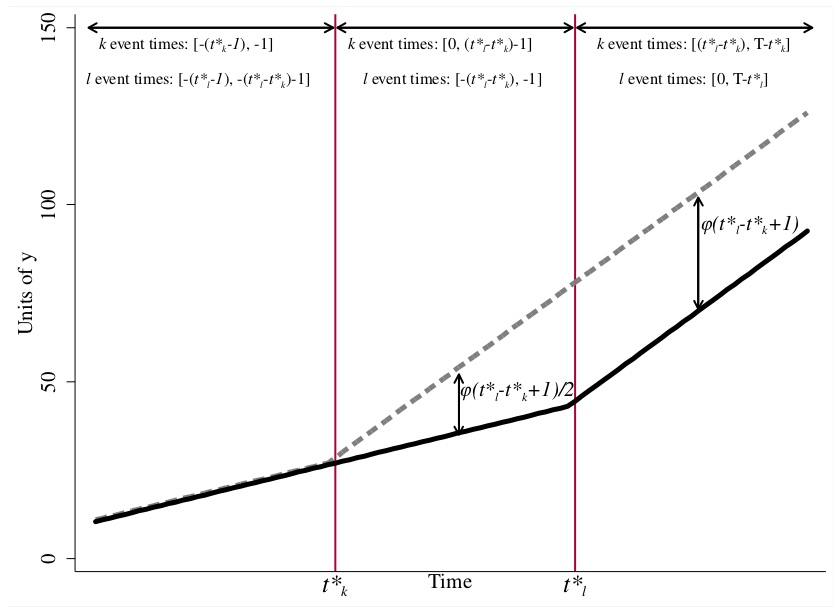

The testable prediction from the staggered adoption of abortion legalization concerned the age-year-state profile of gonorrhea. The early repeal of abortion by five states three years before the rest of the country predicts lower incidence among 15- to 19-year-olds in the repeal states only during the 1986–1992 period relative to their Roe counterparts as the treated cohorts aged. That’s not really all that special a prediction though. Maybe something happens in those same states fifteen to nineteen years later that isn’t controlled for, for instance. What else?

The abortion legalization theory also predicted the shape of the observed treatment effects in this particular staggered adoption. Specifically, we should observe nonlinear treatment effects. These treatment effects should be increasingly negative from 1986 to 1989, plateau from 1989 to 1991, then gradually dissipate until 1992. In other words, the abortion legalization hypothesis predicts a parabolic treatment effect as treated cohorts move through the age distribution. All coefficients on the DD coefficients beyond 1992 should be zero and/or statistically insignificant.

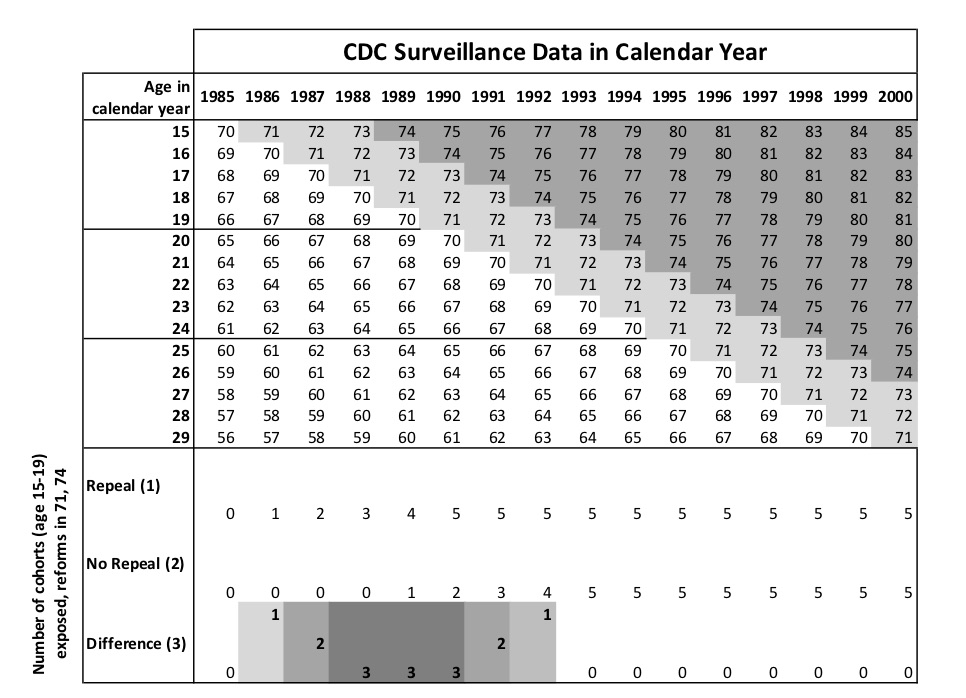

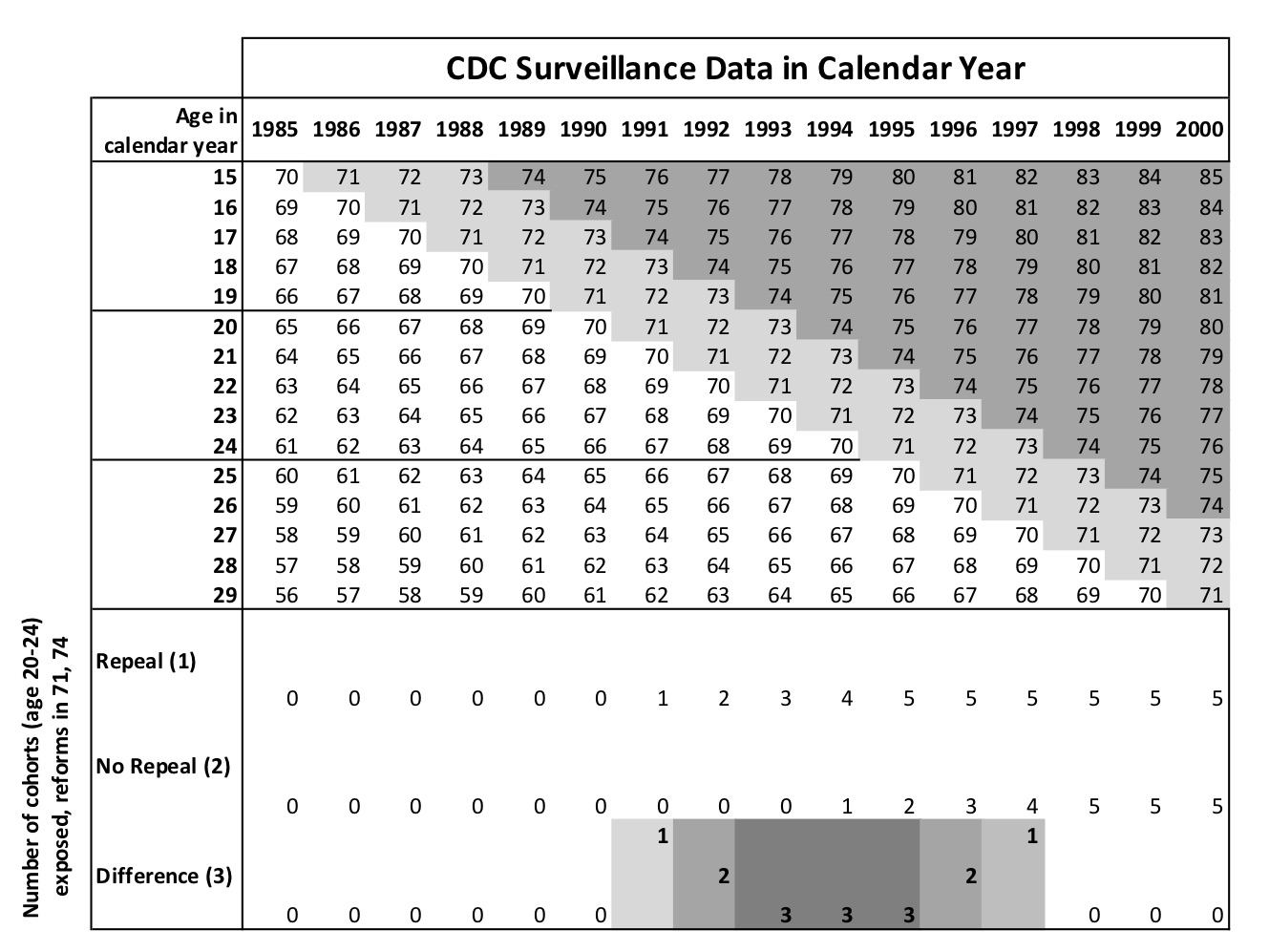

I illustrate these predictions in Figure 9.9. The top horizontal axis shows the year of the panel, the vertical axis shows the age in calendar years, and the cells show the cohort for a given person of a certain age in that given year. For instance, consider a 15-year-old in 1985. She was born in 1970. A 15-year-old in 1986 was born in 1971. A 15-year-old in 1987 was born in 1972, and so forth. I mark the cohorts who were treated by either repeal or Roe in different shades of gray.

The theoretical predictions of the staggered rollout is shown at the bottom of Figure 9.9. In 1986, only one cohort (the 1971 cohort) was treated and only in the repeal states. Therefore, we should see small declines in gonorrhea incidence among 15-year-olds in 1986 relative to Roe states. In 1987, two cohorts in our data are treated in the repeal states relative to Roe, so we should see larger effects in absolute value than we saw in 1986. But from 1988 to 1991, we should at most see only three net treated cohorts in the repeal states because starting in 1988, the Roe state cohorts enter and begin erasing those differences. Starting in 1992, the effects should get smaller in absolute value until 1992, beyond which there should be no difference between repeal and Roe states.

It is interesting that something so simple as a staggered policy rollout should provide two testable hypotheses that together can provide some insight into whether there is credibility to the negative selection in abortion legalization story. If we cannot find evidence for a negative parabola during this specific, narrow window, then the abortion legalization hypothesis has one more nail in its coffin.

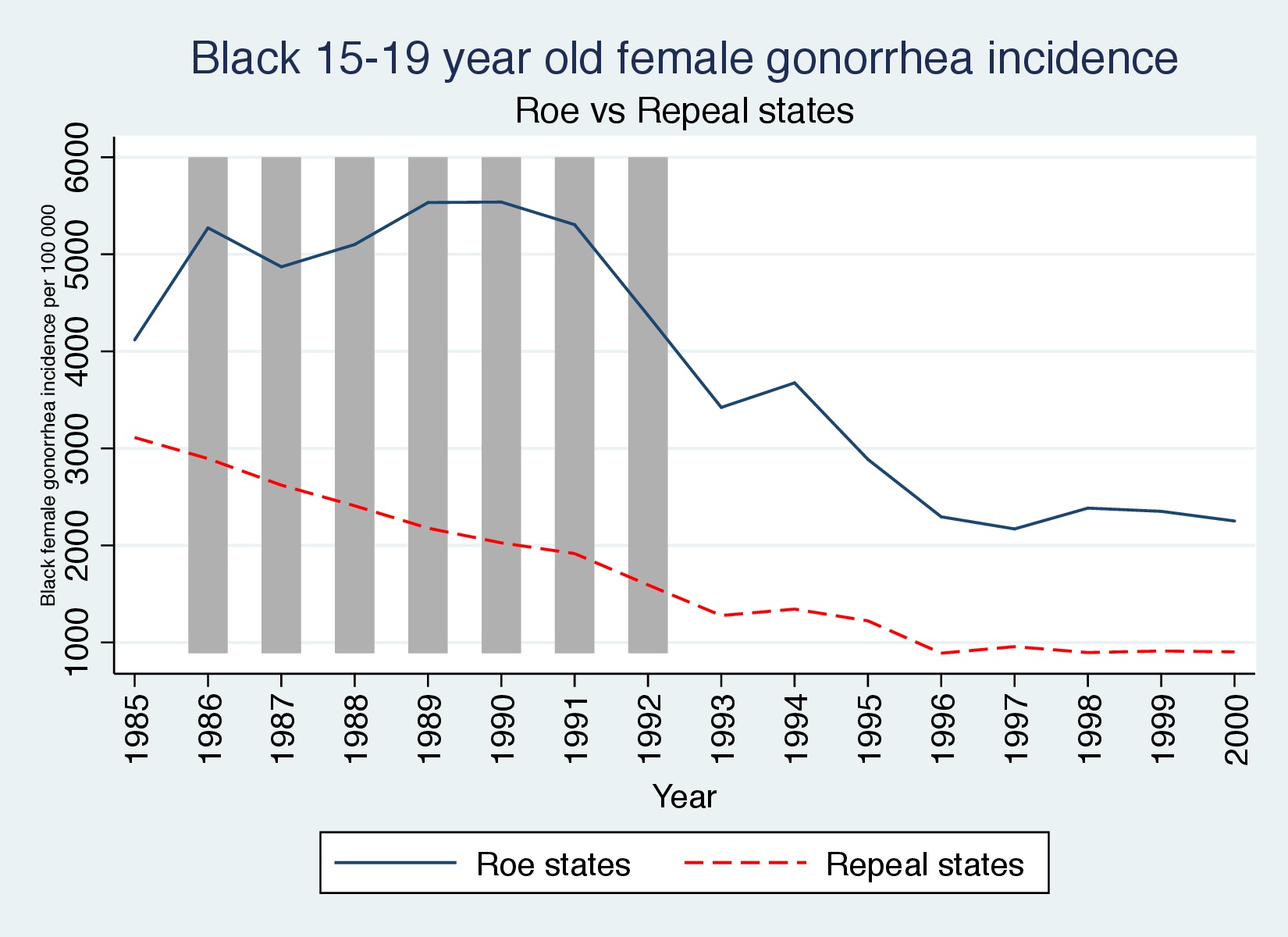

A simple graphic for gonorrhea incidence among black 15- to 19-year-olds can help illustrate our findings. Remember, a picture is worth a thousand words, and whether it’s RDD or DD, it’s helpful to show pictures like these to prepare the reader for the table after table of regression coefficients. So notice what the raw data looks like in Figure 9.10.

First let’s look at the raw data. I have shaded the years corresponding to the window where we expect to find effects. In Figure 9.10, we see the dynamics that will ultimately be picked up in the regression coefficients—the Roe states experienced a large and sustained gonorrhea epidemic that only waned once the treated cohorts emerged and overtook the entire data series.

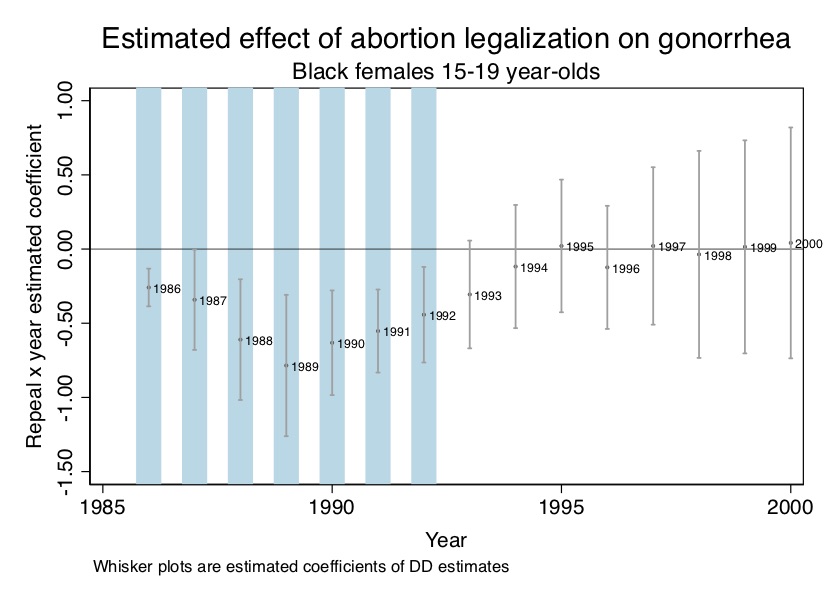

Now let’s look at regression coefficients. Our estimating equation is as follows: \[ Y_{st} =\beta_1Repeals +\beta_2 DT_t +\beta_{3t} Repeal_s \times DT_t +X_{st} \psi+\alpha_{s}DS_s + \varepsilon_{st} \] where \(Y\) is the log number of new gonorrhea cases for 15- to 19-year-olds (per 100,000 of the population); Repeal\(_s\) equals 1 if the state legalized abortion prior to Roe; \(DT_t\) is a year dummy; \(DS_s\) is a state dummy; \(t\) is a time trend; \(X\) is a matrix of covariates. In the paper, I sometimes included state-specific linear trends, but for this analysis, I present the simpler model. Finally, \(\varepsilon_{st}\) is a structural error term assumed to be conditionally independent of the regressors. All standard errors, furthermore, were clustered at the state level allowing for arbitrary serial correlation.

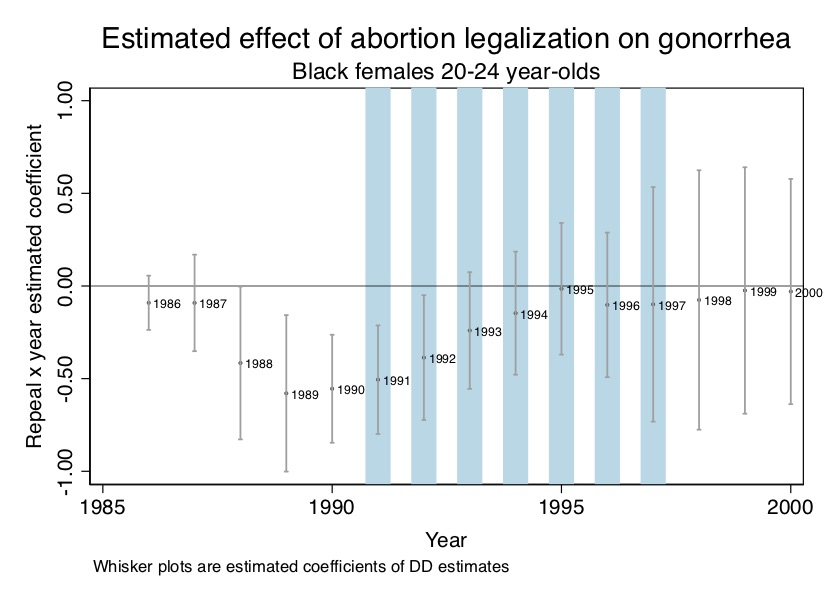

I present the plotted coefficients from this regression for simplicity (and because pictures can be so powerful) in Figure 9.11. As can be seen in Figure 9.11, there is a negative effect during the window where Roe has not fully caught up, and that negative effect forms a parabola—just as our theory predicted.

* DD estimate of 15-19 year olds in repeal states vs Roe states

use https://github.com/scunning1975/mixtape/raw/master/abortion.dta, clear

xi: reg lnr i.repeal*i.year i.fip acc ir pi alcohol crack poverty income ur if bf15==1 [aweight=totpop], cluster(fip)

* ssc install parmest, replace

parmest, label for(estimate min95 max95 %8.2f) li(parm label estimate min95 max95) saving(bf15_DD.dta, replace)

use ./bf15_DD.dta, replace

keep in 17/31

gen year=1986 in 1

replace year=1987 in 2

replace year=1988 in 3

replace year=1989 in 4

replace year=1990 in 5

replace year=1991 in 6

replace year=1992 in 7

replace year=1993 in 8

replace year=1994 in 9

replace year=1995 in 10

replace year=1996 in 11

replace year=1997 in 12

replace year=1998 in 13

replace year=1999 in 14

replace year=2000 in 15

sort year

twoway (scatter estimate year, mlabel(year) mlabsize(vsmall) msize(tiny)) (rcap min95 max95 year, msize(vsmall)), ytitle(Repeal x year estimated coefficient) yscale(titlegap(2)) yline(0, lwidth(vvvthin) lcolor(black)) xtitle(Year) xline(1986 1987 1988 1989 1990 1991 1992, lwidth(vvvthick) lpattern(solid) lcolor(ltblue)) xscale(titlegap(2)) title(Estimated effect of abortion legalization on gonorrhea) subtitle(Black females 15-19 year-olds) note(Whisker plots are estimated coefficients of DD estimator from Column b of Table 2.) legend(off)#-- DD estimate of 15-19 year olds in repeal states vs Roe states

library(tidyverse)

library(haven)

library(estimatr)

read_data <- function(df)

{

full_path <- paste("https://github.com/scunning1975/mixtape/raw/master/",

df, sep = "")

df <- read_dta(full_path)

return(df)

}

abortion <- read_data("abortion.dta") %>%

mutate(

repeal = as_factor(repeal),

year = as_factor(year),

fip = as_factor(fip),

fa = as_factor(fa),

)

reg <- abortion %>%

filter(bf15 == 1) %>%

lm_robust(lnr ~ repeal*year + fip + acc + ir + pi + alcohol+ crack + poverty+ income+ ur,

data = ., weights = totpop, clusters = fip)

abortion_plot <- tibble(

sd = reg[[2]][76:90],

mean = reg[[1]][76:90],

year = c(1986:2000))

abortion_plot %>%

ggplot(aes(x = year, y = mean)) +

geom_rect(aes(xmin=1986, xmax=1992, ymin=-Inf, ymax=Inf), fill = "cyan", alpha = 0.01)+

geom_point()+

geom_text(aes(label = year), hjust=-0.002, vjust = -0.03)+

geom_hline(yintercept = 0) +

geom_errorbar(aes(ymin = mean - sd*1.96, ymax = mean + sd*1.96), width = 0.2,

position = position_dodge(0.05))import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

abortion = read_data('abortion.dta')

abortion = abortion[~pd.isnull(abortion.lnr)]

abortion_bf15 = abortion[abortion.bf15==1]

formula = (

"lnr ~ C(repeal)*C(year) + C(fip)"

" + acc + ir + pi + alcohol + crack + poverty + income + ur"

)

reg = (

smf

.wls(formula, data=abortion_bf15, weights=abortion_bf15.totpop.values)

.fit(

cov_type='cluster',

cov_kwds={'groups': abortion_bf15.fip.values},

method='pinv')

)

reg.summary()

abortion_plot = pd.DataFrame(

{

'sd': reg.bse['C(repeal)[T.1.0]:C(year)[T.1986.0]':'C(repeal)[T.1.0]:C(year)[T.2000.0]'],

'mean': reg.params['C(repeal)[T.1.0]:C(year)[T.1986.0]':'C(repeal)[T.1.0]:C(year)[T.2000.0]'],

'year': np.arange(1986, 2001)

})

abortion_plot['lb'] = abortion_plot['mean'] - abortion_plot['sd']*1.96

abortion_plot['ub'] = abortion_plot['mean'] + abortion_plot['sd']*1.96

(

p.ggplot(abortion_plot, p.aes(x = 'year', y = 'mean')) +

p.geom_rect(p.aes(xmin=1985, xmax=1992, ymin=-np.inf, ymax=np.inf), fill="cyan", alpha = 0.01) +

p.geom_point() +

p.geom_text(p.aes(label = 'year'), ha='right') +

p.geom_hline(yintercept = 0) +

p.geom_errorbar(p.aes(ymin = 'lb', ymax = 'ub'), width = 0.2,

position = p.position_dodge(0.05)) +

p.labs(title= "Estimated effect of abortion legalization on gonorrhea")

)

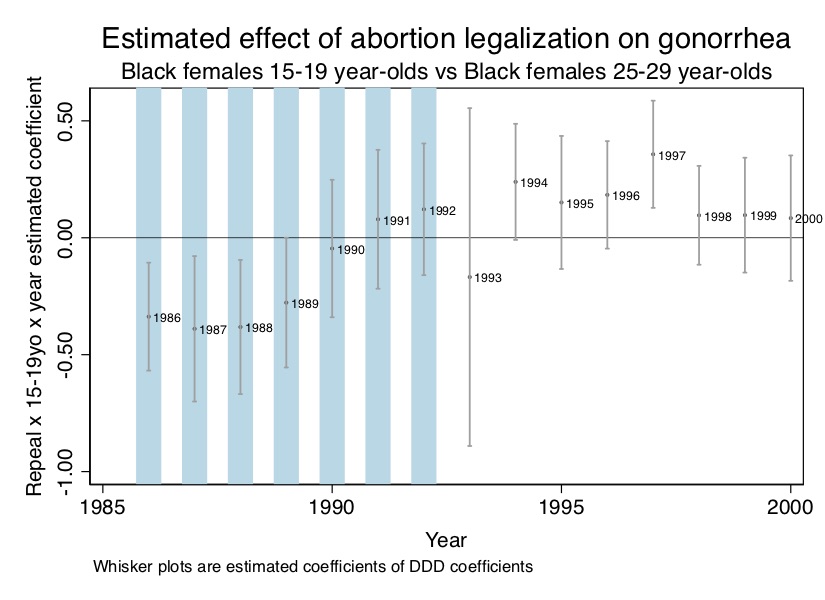

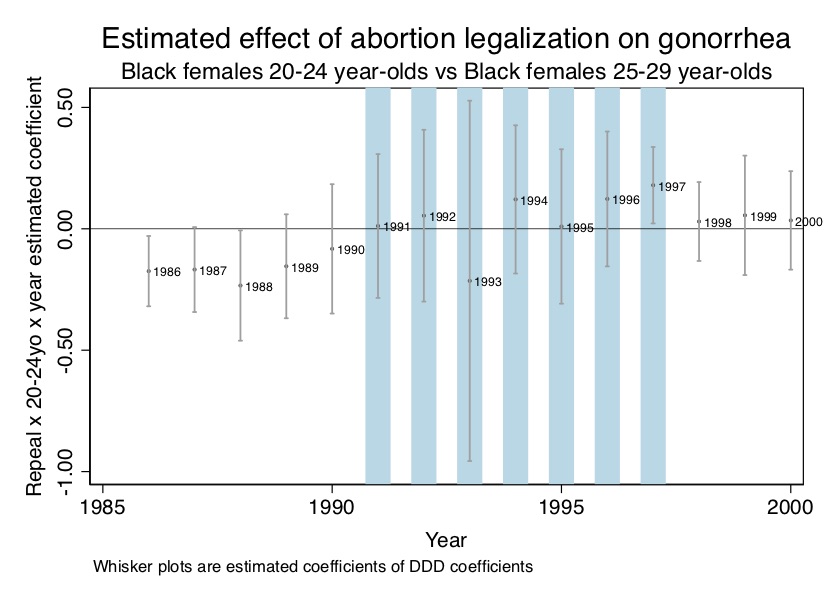

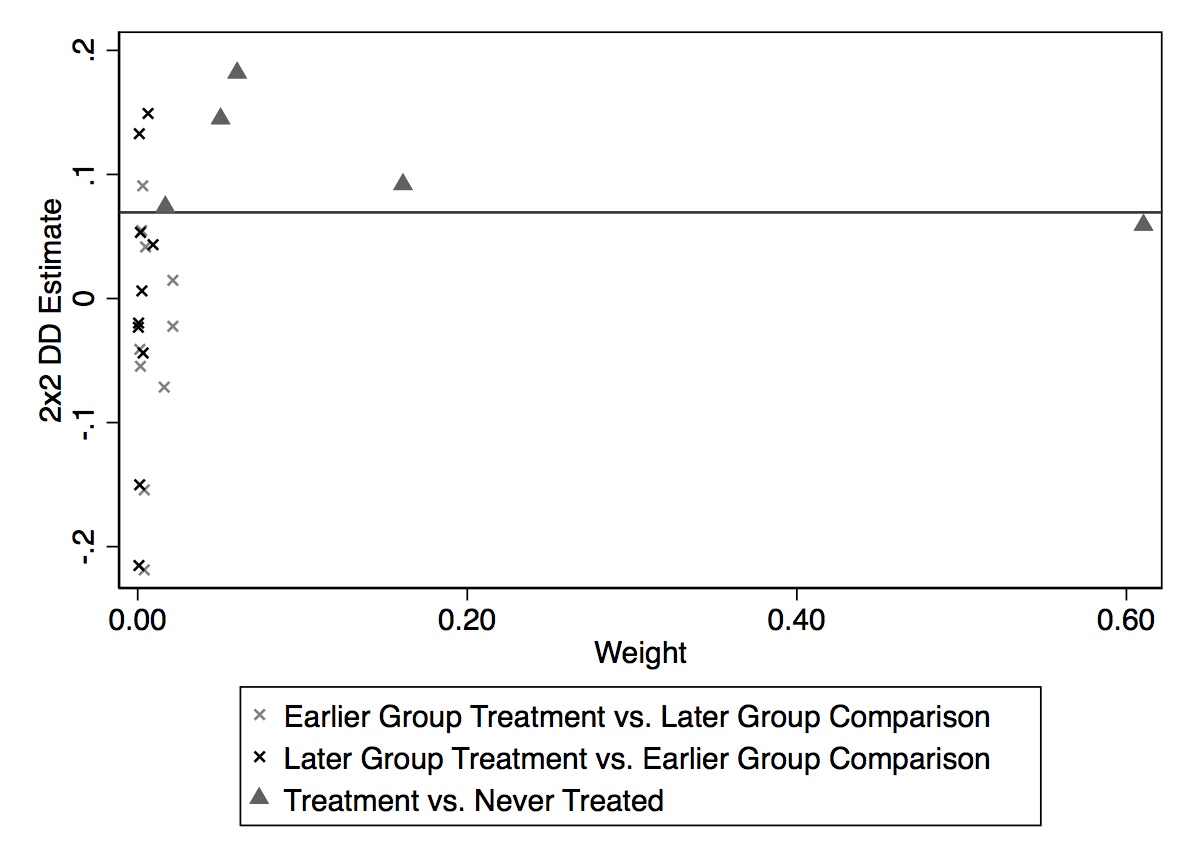

Now, a lot of people might be done, but if you are reading this book, you have revealed that you are not like a lot of people. Credibly identified causal effects requires both finding effects, and ruling out alternative explanations. This is necessary because the fundamental problem of causal inference keeps us blind to the truth. But one way to alleviate some of that doubt is through rigorous placebo analysis. Here I present evidence from a triple difference in which an untreated cohort is used as a within-state control.