Causal Inference:

The Mixtape.

Buy the print version today:

Buy the print version today:

\[ % Define terms \newcommand{\Card}{\text{Card }} \DeclareMathOperator*{\cov}{cov} \DeclareMathOperator*{\var}{var} \DeclareMathOperator{\Var}{Var\,} \DeclareMathOperator{\Cov}{Cov\,} \DeclareMathOperator{\Prob}{Prob} \DeclareMathOperator{\Pr}{Pr} \newcommand{\independent}{\perp \!\!\! \perp} \DeclareMathOperator{\Post}{Post} \DeclareMathOperator{\Pre}{Pre} \DeclareMathOperator{\Mid}{\,\vert\,} \DeclareMathOperator{\post}{post} \DeclareMathOperator{\pre}{pre} \]

In practice, causal inference is based on statistical models that range from the very simple to extremely advanced. And building such models requires some rudimentary knowledge of probability theory, so let’s begin with some definitions. A random process is a process that can be repeated many times with different outcomes each time. The sample space is the set of all the possible outcomes of a random process. We distinguish between discrete and continuous random processes Table 1 below. Discrete processes produce, integers, whereas continuous processes produce fractions as well.

| Description | Type | Potential outcomes |

|---|---|---|

| 12-sided die | Discrete | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12 |

| Coin | Discrete | Heads, Tails |

| Deck of cards | Discrete | 2 \(\diamondsuit\), 3 \(\diamondsuit\), …King \(\heartsuit\), Ace \(\heartsuit\) |

| Gas prices | Continuous | \(P\geq 0\) |

We define independent events two ways. The first refers to logical independence. For instance, two events occur but there is no reason to believe that the two events affect each other. When it is assumed that they do affect each other, this is a logical fallacy called post hoc ergo propter hoc, which is Latin for “after this, therefore because of this.” This fallacy recognizes that the temporal ordering of events is not sufficient to be able to say that the first thing caused the second.

The second definition of an independent event is statistical independence. We’ll illustrate the latter with an example from the idea of sampling with and without replacement. Let’s use a randomly shuffled deck of cards for an example. For a deck of 52 cards, what is the probability that the first card will be an ace? \[ \Pr(\text{Ace}) =\dfrac{\text{Count Aces}}{\text{Sample Space}}=\dfrac{4}{52}= \dfrac{1}{13}=0.077 \] There are 52 possible outcomes in the sample space, or the set of all possible outcomes of the random process. Of those 52 possible outcomes, we are concerned with the frequency of an ace occurring. There are four aces in the deck, so \(\dfrac{4}{52}=0.077\).

Assume that the first card was an ace. Now we ask the question again. If we shuffle the deck, what is the probability the next card drawn is also an ace? It is no longer \(\dfrac{1}{13}\) because we did not sample with replacement. We sampled without replacement. Thus the new probability is \[ \Pr\Big(\text{Ace}\mid\text{Card } 1 =\text{Ace}\Big) = \dfrac{3}{51}= 0.059 \] Under sampling without replacement, the two events—ace on \(\text{Card } 1\) and an ace on \(\text{Card } 2\) if \(\text{Card } 1\) was an ace—aren’t independent events. To make the two events independent, you would have to put the ace back and shuffle the deck. So two events, \(A\) and \(B\), are independent if and only if: \[ \Pr(A\mid B)=\Pr(A) \] An example of two independent events would be rolling a 5 with one die after having rolled a 3 with another die. The two events are independent, so the probability of rolling a 5 is always 0.17 regardless of what we rolled on the first die.1

But what if we want to know the probability of some event occurring that requires that multiple events first to occur? For instance, let’s say we’re talking about the Cleveland Cavaliers winning the NBA championship. In 2016, the Golden State Warriors were 3–1 in a best-of-seven playoff. What had to happen for the Warriors to lose the playoff? The Cavaliers had to win three in a row. In this instance, to find the probability, we have to take the product of all marginal probabilities, or \(\Pr(\cdot)^n\), where \(\Pr(\cdot)\) is the marginal probability of one event occurring, and \(n\) is the number of repetitions of that one event. If the unconditional probability of a Cleveland win is 0.5, and each game is independent, then the probability that Cleveland could come back from a 3–1 deficit is the product of each game’s probability of winning: \[ \text{Win probability} =\Pr\big(W,W,W\big)= (0.5)^3= 0.125 \] Another example may be helpful. In Texas Hold’em poker, each player is dealt two cards facedown. When you are holding two of a kind, you say you have two “in the pocket.” So, what is the probability of being dealt pocket aces? It’s \(\dfrac{4}{52}\times\dfrac{3}{51}=0.0045\). That’s right: it’s \(0.45\%\).

Let’s formalize what we’ve been saying for a more generalized case. For independent events, to calculate joint probabilities, we multiply the marginal probabilities: \[ \Pr(A,B)=\Pr(A)\Pr(B) \] where \(\Pr(A,B)\) is the joint probability of both \(A\) and \(B\) occurring, and \(\Pr(A)\) is the marginal probability of \(A\) event occurring.

Now, for a slightly more difficult application. What is the probability of rolling a 7 using two six-sided dice, and is it the same as the probability of rolling a 3? To answer this, let’s compare the two probabilities. We’ll use a table to help explain the intuition. First, let’s look at all the ways to get a 7 using two six-sided dice. There are 36 total possible outcomes \((6^2=36)\) when rolling two dice. In Table 2.2 we see that there are six different ways to roll a 7 using only two dice. So the probability of rolling a 7 is \(6/36=16.67\)%. Next, let’s look at all the ways to roll a 3 using two six-sided dice. Table 2.3 shows that there are only two ways to get a 3 rolling two six-sided dice. So the probability of rolling a 3 is \(2/36=5.56\)%. So, no, the probabilities of rolling a 7 and rolling a 3 are different.

| Die 1 | Die 2 | Outcome |

|---|---|---|

| 1 | 6 | 7 |

| 2 | 5 | 7 |

| 3 | 4 | 7 |

| 4 | 3 | 7 |

| 5 | 2 | 7 |

| 6 | 1 | 7 |

| Die 1 | Die 2 | Outcome |

|---|---|---|

| 1 | 2 | 3 |

| 2 | 1 | 3 |

First, before we talk about the three ways of representing a probability, I’d like to introduce some new terminology and concepts: events and conditional probabilities. Let \(A\) be some event. And let \(B\) be some other event. For two events, there are four possibilities.

A and B: Both A and B occur.

\(\sim\) A and B: A does not occur, but B occurs.

A and \(\sim\) B: A occurs, but B does not occur.

\(\sim\) A and \(\sim\) B: Neither A nor B occurs.

I’ll use a couple of different examples to illustrate how to represent a probability.

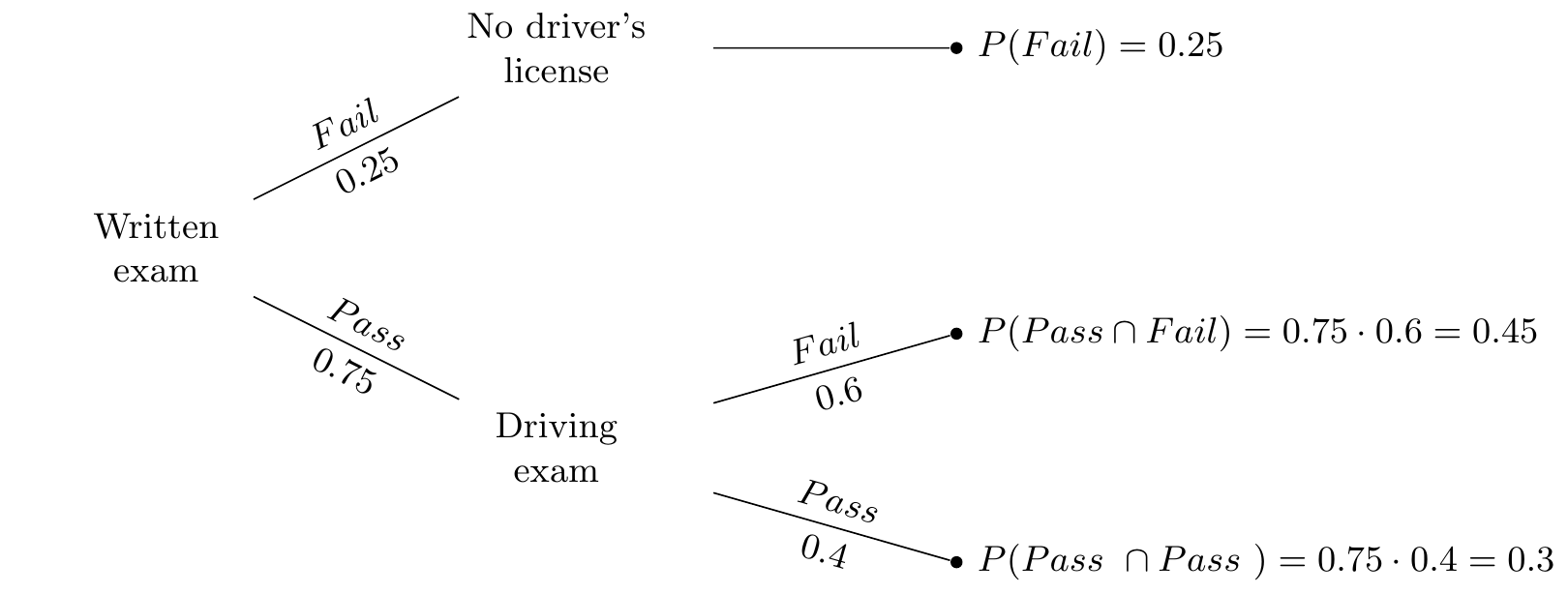

Let’s think about a situation in which you are trying to get your driver’s license. Suppose that in order to get a driver’s license, you have to pass the written exam and the driving exam. However, if you fail the written exam, you’re not allowed to take the driving exam. We can represent these two events in a probability tree.

Probability trees are intuitive and easy to interpret.2 First, we see that the probability of passing the written exam is 0.75 and the probability of failing the exam is 0.25. Second, at every branching off from a node, we can further see that the probabilities associated with a given branch are summing to 1.0. The joint probabilities are also all summing to 1.0. This is called the law of total probability and it is equal to the sum of all joint probability of A and B\(_n\) events occurring: \[ \Pr(A)=\sum_n Pr(A\cap B_n) \]

We also see the concept of a conditional probability in the driver’s license tree. For instance, the probability of failing the driving exam, conditional on having passed the written exam, is represented as \(\Pr(\text{Fail} \mid \text{Pass})= 0.45/0.75 = 0.6\).

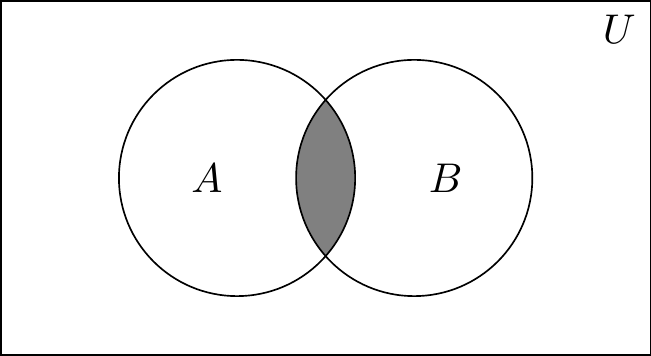

A second way to represent multiple events occurring is with a Venn diagram. Venn diagrams were first conceived by John Venn in 1880. They are used to teach elementary set theory, as well as to express set relationships in probability and statistics. This example will involve two sets, \(A\) and \(B\).

The University of Texas’s football coach has been on the razor’s edge with the athletic director and regents all season. After several mediocre seasons, his future with the school is in jeopardy. If the Longhorns don’t make it to a great bowl game, he likely won’t be rehired. But if they do, then he likely will be rehired. Let’s discuss elementary set theory using this coach’s situation as our guiding example. But before we do, let’s remind ourselves of our terms. \(A\) and \(B\) are events, and \(U\) is the universal set of which \(A\) and \(B\) are subsets. Let \(A\) be the probability that the Longhorns get invited to a great bowl game and \(B\) be the probability that their coach is rehired. Let \(\Pr(A)=0.6\) and let \(\Pr(B)=0.8\). Let the probability that both \(A\) and \(B\) occur be \(\Pr(A,B)=0.5\).

Note, that \(A+\sim A=U\), where \(\sim A\) is the complement of \(A\). The complement means that it is everything in the universal set that is not A. The same is said of B. The sum of \(B\) and \(\sim B=U\). Therefore: \[ A+{\sim} A=B+ {\sim} B \]

We can rewrite out the following definitions:

\[\begin{align} A & = B+{\sim} B - {\sim} A \\ B & = A+{\sim} A - {\sim} B \end{align}\]

Whenever we want to describe a set of events in which either \(A\) or \(B\) could occur, it is: \(A \cup B\). And this is pronounced “\(A\) union \(B\),” which means it is the new set that contains every element from \(A\) and every element from \(B\). Any element that is in either set \(A\) or set \(B\), then, is also in the new union set. And whenever we want to describe a set of events that occurred together—the joint set—it’s \(A \cap B\), which is pronounced “\(A\) intersect \(B\).” This new set contains every element that is in both the \(A\) and \(B\) sets. That is, only things inside both \(A\) and \(B\) get added to the new set.

Now let’s look closely at a relationship involving the set A.

\[\begin{align} A=A \cap B+A \cap{\sim} B \end{align}\]

Notice what this is saying: there are two ways to identify the \(A\) set. First, you can look at all the instances where \(A\) occurs with \(B\). But then what about the rest of \(A\) that is not in \(B\)? Well, that’s the \(A \cap \sim B\) situation, which covers the rest of the \(A\) set.

A similar style of reasoning can help you understand the following expression. \[ A \cup B=A \cap{\sim}B+\sim A \cap B+A \cap B \] To get \(A\) union \(B\), we need three objects: the set of \(A\) units outside of \(B\), the set of \(B\) units outside \(A\), and their joint set. To get \(A \cup B\), just rearrange the previous equation.

Now it is just simple addition to find all missing values. Recall that \(A\) is your team making playoffs and \(\Pr(A)=0.6\). And \(B\) is the probability that the coach is rehired, \(\Pr(B)=0.8\). Also, \(\Pr(A,B)=0.5\), which is the probability of both \(A\) and \(B\) occurring. Then we have:

\[\begin{align} A & = A \cap B+A \cap{\sim}B \\ A \cap{\sim}B & = A - A \cap B \\ \Pr(A, \sim B) & = \Pr(A) - Pr(A,B) \\ \Pr(A,\sim B) & = 0.6 - 0.5 \\ \Pr(A,\sim B) & = 0.1 \end{align}\]

When working with sets, it is important to understand that probability is calculated by considering the share of the set (for example \(A\)) made up by the subset (for example \(A \cap B\)). When we write down that the probability that \(A \cap B\) occurs at all, it is with regards to \(U\). But what if we were to ask the question “What share of A is due to \(A \cap B\)?” Notice, then, that we would need to do this:

\[\begin{align} ? & = A \cap B \div A \\ ? & = 0.5 \div 0.6 \\ ? & = 0.83 \end{align}\]

I left this intentionally undefined on the left side so as to focus on the calculation itself. But now let’s define what we are wanting to calculate: In a world where \(A\) has occurred, what is the probability that \(B\) will also occur? This is:

\[\begin{align} \Pr(B \mid A) & = \dfrac{\Pr(A,B)}{\Pr(A)}= \dfrac{0.5}{0.6}=0.83 \\ \Pr(A \mid B) & = \dfrac{\Pr(A,B)}{\Pr(B)}= \dfrac{0.5}{0.8}=0.63 \end{align}\] Notice, these conditional probabilities are not as easy to see in the Venn diagram. We are essentially asking what percentage of a subset—e.g., \(\Pr(A)\)—is due to the joint set, for example, \(\Pr(A,B)\). This reasoning is the very same reasoning used to define the concept of a conditional probability.

Another way that we can represent events is with a contingency table. Contingency tables are also sometimes called twoway tables. Table 2.4 is an example of a contingency table. We continue with our example about the worried Texas coach.

| Event labels | Coach is not rehired \((\sim B)\) | Coach is rehired \((B)\) | Total |

|---|---|---|---|

| \((A)\) Bowl game | \(\Pr(A,\sim B)\)=0.1 | \(\Pr(A,B)\)=0.5 | \(\Pr(A)\)=0.6 |

| \((\sim A)\) no Bowl game | \(\Pr(\sim A, \sim B)=0.1\) | \(\Pr(\sim A,B)=0.3\) | \(\Pr(\sim A)=0.4\) |

| Total | \(\Pr(\sim B)=0.2\) | \(\Pr(B)=0.8\) | 1.0 |

Recall that \(\Pr(A)=0.6\), \(\Pr(B)=0.8\), and \(Pr(A,B)=0.5\). Note that to calculate conditional probabilities, we must know the frequency of the element in question (e.g., \(\Pr(A,B)\)) relative to some other larger event (e.g., \(\Pr(A)\)). So if we want to know what the conditional probability of \(B\) is given \(A\), then it’s: \[ \Pr(B\mid A)=\dfrac{\Pr(A,B)}{\Pr(A)}=\dfrac{0.5}{0.6}=0.83 \] But note that knowing the frequency of \(A\cap B\) in a world where \(B\) occurs is to ask the following: \[ \Pr(A\mid B)=\dfrac{\Pr(A,B)}{\Pr(B)}= \dfrac{0.5}{0.8}=0.63 \]

So, we can use what we have done so far to write out a definition of joint probability. Let’s start with a definition of conditional probability first. Given two events, \(A\) and \(B\): \[\begin{align} \Pr(A\mid B) &= \frac{\Pr(A,B)}{\Pr(B)} \\ \Pr(B\mid A) &= \frac{\Pr(B,A)}{\Pr(A)} \\ \Pr(A,B) &= \Pr(B,A) \\ \Pr(A) &= \Pr(A,\sim B)+\Pr(A,B) \\ \Pr(B) &= \Pr(A,B)+\Pr(\sim A, B) \end{align}\]

Using equations (1) and (2), I can simply write down a definition of joint probabilities.

\[ \Pr(A,B) = \Pr(A \mid B) \Pr(B) \tag{2.1}\]

\[ \Pr(B,A) = \Pr(B \mid A) \Pr(A) \tag{2.2}\]

And this is the formula for joint probability. Given equation (3), and using the definitions of \((\Pr(A,B)\) and \(\Pr(B,A))\), I can also rearrange terms, make a substitution, and rewrite it as:

\[ \Pr(A\mid B)\Pr(B) = \Pr(B\mid A)\Pr(A) \]

\[ \Pr(A\mid B) = \frac{\Pr(B\mid A) \Pr(A)}{\Pr(B)} \tag{2.3}\]

Equation 2.3 is sometimes called the naive version of Bayes’s rule. We will now decompose this equation more fully, though, by substituting equation (5) into Equation 2.3.

\[ \Pr(A\mid B) = \frac{\Pr(B\mid A)\Pr(A)}{\Pr(A,B) + \Pr(\sim A,B)} \tag{2.4}\]

Substituting Equation 2.1 into the denominator for Equation 2.4 yields:

\[ \Pr(A\mid B)=\frac{\Pr(B\mid A)\Pr(A)}{\Pr(B\mid A)\Pr(A)+\Pr(\sim A, B)} \tag{2.5}\] Finally, we note that using the definition of joint probability, that \(\Pr(B,\sim A)= \Pr(B\mid\sim A)\Pr(\sim A)\), which we substitute into the denominator of Equation 2.5 to get:

\[ \Pr(A\mid B)=\frac{\Pr(B\mid A)\Pr(A)}{\Pr(B\mid A)\Pr(A)+\Pr(B\mid \sim A)\Pr(\sim A)} \tag{2.6}\]

That’s a mouthful of substitutions, so what does Equation 2.6 mean? This is the Bayesian decomposition version of Bayes’s rule. Let’s use our example again of Texas making a great bowl game. \(A\) is Texas making a great bowl game, and \(B\) is the coach getting rehired. And \(A\cup B\) is the joint probability that both events occur. We can make each calculation using the contingency tables. The questions here is this: If the Texas coach is rehired, what’s the probability that the Longhorns made a great bowl game? Or formally, \(\Pr(A\mid B)\). We can use the Bayesian decomposition to find this probability.

\[\begin{align} \Pr(A\mid B) & = \dfrac{\Pr(B\mid A)\Pr(A)}{\Pr(B\mid A)\Pr(A)+\Pr(B\mid \sim A)\Pr(\sim A)} \\ & =\dfrac{0.83\cdot 0.6}{0.83\cdot 0.6+0.75\cdot 0.4} \\ & =\dfrac{0.498}{0.498+0.3} \\ & =\dfrac{0.498}{0.798} \\ \Pr(A\mid B) & =0.624 \end{align}\] Check this against the contingency table using the definition of joint probability:

\[\begin{align} \Pr(A\mid B)=\dfrac{\Pr(A,B)}{\Pr(B)}= \dfrac{0.5}{0.8}=0.625 \end{align}\] So, if the coach is rehired, there is a 63 percent chance we made a great bowl game.3

Let’s use a different example, the Monty Hall example. This is a fun one, because most people find itcounterintuitive. It even is used to stump mathematicians and statisticians.4 But Bayes’s rule makes the answer very clear—so clear, in fact, that it’s somewhat surprising that Bayes’s rule was actually once controversial (Mcgrayne 2012).

Let’s assume three closed doors: door 1 \((D_1)\), door 2 \((D_2)\), and door 3 \((D_3)\). Behind one of the doors is a million dollars. Behind each of the other two doors is a goat. Monty Hall, the game-show host in this example, asks the contestants to pick a door. After they pick the door, but before he opens the door they picked, he opens one of the other doors to reveal a goat. He then asks the contestant, “Would you like to switch doors?”

A common response to Monty Hall’s offer is to say it makes no sense to change doors, because there’s an equal chance that the million dollars is behind either door. Therefore, why switch? There’s a 50–50 chance it’s behind the door picked and there’s a 50–50 chance it’s behind the remaining door, so it makes no rational sense to switch. Right? Yet, a little intuition should tell you that’s not the right answer, because it would seem that when Monty Hall opened that third door, he made a statement. But what exactly did he say?

Let’s formalize the problem using our probability notation. Assume that you chose door 1, \(D_1\). The probability that \(D_1\) had a million dollars when you made that choice is \(\Pr(D_1=1 \text{ million})=\dfrac{1}{3}\). We will call that event \(A_1\). And the probability that \(D_1\) has a million dollars at the start of the game is \(\dfrac{1}{3}\) because the sample space is 3 doors, of which one has a million dollars behind it. Thus, \(\Pr(A_1)=\dfrac{1}{3}\). Also, by the law of total probability, \(\Pr(\sim A_1)=\dfrac{2}{3}\). Let’s say that Monty Hall had opened door 2, \(D_2\), to reveal a goat. Then he asked, “Would you like to change to door number 3?”

We need to know the probability that door 3 has the million dollars and compare that to Door 1’s probability. We will call the opening of door 2 event \(B\). We will call the probability that the million dollars is behind door \(i\), \(A_i\). We now write out the question just asked formally and decompose it using the Bayesian decomposition. We are ultimately interested in knowing what the probability is that door 1 has a million dollars (event \(A_1\)) given that Monty Hall opened door 2 (event \(B\)), which is a conditional probability question. Let’s write out that conditional probability using the Bayesian decomposition from Equation 2.6.

\[ \Pr(A_1 \mid B) = \dfrac{\Pr(B\mid A_1) \Pr(A_1)}{\Pr(B\mid A_1) \Pr(A_1)+\Pr(B\mid A_2) \Pr(A_2)+\Pr(B\mid A_3) \Pr(A_3)} \tag{2.7}\]

There are basically two kinds of probabilities on the right side of the equation. There’s the marginal probability that the million dollars is behind a given door, \(\Pr(A_i)\). And there’s the conditional probability that Monty Hall would open door 2 given that the million dollars is behind door \(A_i\), \(\Pr(B\mid A_i)\).

The marginal probability that door \(i\) has the million dollars behind it without our having any additional information is \(\dfrac{1}{3}\). We call this the prior probability, or prior belief. It may also be called the unconditional probability.

The conditional probability, \(\Pr(B|A_i)\), requires a little more careful thinking. Take the first conditional probability, \(\Pr(B\mid A_1)\). If door 1 has the million dollars behind it, what’s the probability that Monty Hall would open door 2?

Let’s think about the second conditional probability: \(\Pr(B\mid A_2)\). If the money is behind door 2, what’s the probability that Monty Hall would open door 2?

And then the last conditional probability, \(\Pr(B\mid A_3)\). In a world where the money is behind door 3, what’s the probability Monty Hall will open door 2?

Each of these conditional probabilities requires thinking carefully about the feasibility of the events in question. Let’s examine the easiest question: \(\Pr(B\mid A_2)\). If the money is behind door 2, how likely is it for Monty Hall to open that same door, door 2? Keep in mind: this is a game show. So that gives you some idea about how the game-show host will behave. Do you think Monty Hall would open a door that had the million dollars behind it? It makes no sense to think he’d ever open a door that actually had the money behind it—he will always open a door with a goat. So don’t you think he’s only opening doors with goats? Let’s see what happens if take that intuition to its logical extreme and conclude that Monty Hall never opens a door if it has a million dollars. He only opens a door if the door has a goat. Under that assumption, we can proceed to estimate \(\Pr(A_1\mid B)\) by substituting values for \(\Pr(B\mid A_i)\) and \(\Pr(A_i)\) into the right side of Equation 2.7.

What then is \(\Pr(B\mid A_1)\)? That is, in a world where you have chosen door 1, and the money is behind door 1, what is the probability that he would open door 2? There are two doors he could open if the money is behind door 1—he could open either door 2 or door 3, as both have a goat behind them. So \(\Pr(B\mid A_1)=0.5\).

What about the second conditional probability, \(\Pr(B\mid A_2)\)? If the money is behind door 2, what’s the probability he will open it? Under our assumption that he never opens the door if it has a million dollars, we know this probability is 0.0. And finally, what about the third probability, \(\Pr(B\mid A_3)\)? What is the probability he opens door 2 given that the money is behind door 3? Now consider this one carefully—the contestant has already chosen door 1, so he can’t open that one. And he can’t open door 3, because that has the money behind it. The only door, therefore, he could open is door 2. Thus, this probability is 1.0. Furthermore, all marginal probabilities, \(\Pr(A_i)\), equal 1/3, allowing us to solve for the conditional probability on the left side through substitution, multiplication, and division.

\[\begin{align} \Pr(A_1\mid B) & = \dfrac{\dfrac{1}{2}\cdot \dfrac{1}{3}}{\dfrac{1}{2}\cdot \dfrac{1}{3}+0\cdot\dfrac{1}{3}+1.0 \cdot \dfrac{1}{3}} \\ & =\dfrac{\dfrac{1}{6}}{\dfrac{1}{6}+\dfrac{2}{6}} \\ & = \dfrac{1}{3} \end{align}\] Aha. Now isn’t that just a little bit surprising? The probability that the contestant chose the correct door is \(\dfrac{1}{3}\), just as it was before Monty Hall opened door 2.

But what about the probability that door 3, the door you’re holding, has the million dollars? Have your beliefs about that likelihood changed now that door 2 has been removed from the equation? Let’s crank through our Bayesian decomposition and see whether we learned anything.

\[\begin{align} \Pr(A_3\mid B) & = \dfrac{ \Pr(B\mid A_3)\Pr(A_3) }{ \Pr(B\mid A_3)\Pr(A_3)+\Pr(B\mid A_2)\Pr(A_2)+ \Pr(B\mid A_1)\Pr(A_1) } \\ & = \dfrac{ 1.0 \cdot \dfrac{1}{3} }{ 1.0 \cdot \dfrac{1}{3}+0 \cdot \dfrac{1}{3}+\dfrac{1}{2} \cdot \dfrac{1}{3}} \\ & = \dfrac{2}{3} \end{align}\]

Interestingly, while your beliefs about the door you originally chose haven’t changed, your beliefs about the other door have changed. The prior probability, \(\Pr(A_3)=\dfrac{1}{3}\), increased through a process called updating to a new probability of \(\Pr(A_3\mid B)=\dfrac{2}{3}\). This new conditional probability is called the posterior probability, or posterior belief. And it simply means that having witnessed \(B\), you learned information that allowed you to form a new belief about which door the money might be behind.

As was mentioned in footnote 14 regarding the controversy around vos Sant’s correct reasoning about the need to switch doors, deductions based on Bayes’s rule are often surprising even to smart people—probably because we lack coherent ways to correctly incorporate information into probabilities. Bayes’s rule shows us how to do that in a way that is logical and accurate. But besides being insightful, Bayes’s rule also opens the door for a different kind of reasoning about cause and effect. Whereas most of this book has to do with estimating effects from known causes, Bayes’s rule reminds us that we can form reasonable beliefs about causes from known effects.

The tools we use to reason about causality rest atop a bedrock of probabilities. We are often working with mathematical tools and concepts from statistics such as expectations and probabilities. One of the most common tools we will use in this book is the linear regression model, but before we can dive into that, we have to build out some simple notation.5 We’ll begin with the summation operator. The Greek letter \(\Sigma\) (the capital Sigma) denotes the summation operator. Let \(x_1, x_2, \ldots, x_n\) be a sequence of numbers. We can compactly write a sum of numbers using the summation operator as:

\[\begin{align} \sum_{i=1}^nx_i \equiv x_1+x_2+\ldots+x_n \end{align}\] The letter \(i\) is called the index of summation. Other letters, such as \(j\) or \(k\), are sometimes used as indices of summation. The subscript variable simply represents a specific value of a random variable, \(x\). The numbers 1 and \(n\) are the lower limit and the upper limit, respectively, of the summation. The expression \(\Sigma_{i=1}^nx_i\) can be stated in words as “sum the numbers \(x_i\) for all values of \(i\) from 1 to \(n\).” An example can help clarify:

\[\begin{align} \sum_{i=6}^9 x_i= x_6+x_7+x_8+x_9 \end{align}\] The summation operator has three properties. The first property is called the constant rule. Formally, it is:

For any constant \(c\) \[ \sum_{i=1}^n c = nc \] Let’s consider an example. Say that we are given: \[ \sum_{i=1}^3 5=(5+5+5) = 3 \cdot 5=15 \] A second property of the summation operator is: \[ \sum_{i=1}^n c x_i = c\sum_{i=1}^nx_i \] Again let’s use an example. Say we are given:

\[\begin{align} \sum_{i=1}^3 5x_i & =5x_1+5x_2+5x_3 \\ & =5 (x_1+x_2+x_3) \\ & =5\sum_{i=1}^3x_i \end{align}\] We can apply both of these properties to get the following third property:

\[\begin{align} \text{For any constant $a$ and $b$:}\quad \sum_{i=1}^n(ax_i+by_i) =a\sum_{i=1}^n x_i + b\sum_{j=1}^n y_i \end{align}\] Before leaving the summation operator, it is useful to also note things which are not properties of this operator. First, the summation of a ratio is not the ratio of the summations themselves.

\[\begin{align} \sum_i^n \dfrac{x_i}{y_i} \ne \dfrac{ \sum_{i=1}^n x_i}{\sum_{i=1}^ny_i} \end{align}\] Second, the summation of some squared variable is not equal to the squaring of its summation.

\[\begin{align} \sum_{i=1}^nx_i^2 \ne \bigg(\sum_{i=1}^nx_i \bigg)^2 \end{align}\]

We can use the summation indicator to make a number of calculations, some of which we will do repeatedly over the course of this book. For instance, we can use the summation operator to calculate the average: \[\begin{align} \overline{x} & = \dfrac{1}{n} \sum_{i=1}^n x_i \\ & =\dfrac{x_1+x_2+\dots+x_n}{n} \end{align}\] where \(\overline{x}\) is the average (mean) of the random variable \(x_i\). Another calculation we can make is a random variable’s deviations from its own mean. The sum of the deviations from the mean is always equal to 0: \[ \sum_{i=1}^n (x_i - \overline{x})=0 \] You can see this in Table 2.5.

| \(x\) | \(x-\overline{x}\) |

|---|---|

| 10 | 2 |

| 4 | \(-4\) |

| 13 | 5 |

| 5 | \(-3\) |

| Mean=8 | Sum=0 |

Consider a sequence of two numbers {\(y_1, y_2, \ldots, y_n\)} and {\(x_1, x_2, \ldots, x_n\)}. Now we can consider double summations over possible values of \(x\)’s and \(y\)’s. For example, consider the case where \(n=m=2\). Then, \(\sum_{i=1}^2\sum_{j=1}^2x_iy_j\) is equal to \(x_1y_1+x_1y_2+x_2y_1+x_2y_2\). This is because

\[\begin{align} x_1y_1+x_1y_2+x_2y_1+x_2y_2 & = x_1(y_1+y_2)+x_2(y_1+y_2) \\ & = \sum_{i=1}^2x_i(y_1+y_2) \\ & = \sum_{i=1}^2x_i \bigg( \sum_{j=1}^2y_j \bigg) \\ & = \sum_{i=1}^2 \bigg( \sum_{j=1}^2x_iy_j \bigg) \\ & = \sum_{i=1}^2 \sum_{j=1}^2x_iy_j \end{align}\] One result that will be very useful throughout the book is:

\[ \sum_{i=1}^n(x_i - \overline{x})^2=\sum_{i=1}^n x_i^2 - n(\overline{x})^2 \tag{2.8}\]

An overly long, step-by-step proof is below. Note that the summation index is suppressed after the first line for easier reading.

\[\begin{align} \sum_{i=1}^n(x_i-\overline{x})^2 & = \sum_{i=1}^n (x_i^2-2x_i\overline{x}+\overline{x}^2) \\ & = \sum x_i^2 - 2\overline{x} \sum x_i +n\overline{x}^2 \\ & = \sum x_i^2 - 2 \dfrac{1}{n} \sum x_i \sum x_i +n\overline{x}^2 \\ & = \sum x_i^2 +n\overline{x}^2 - \dfrac{2}{n} \bigg (\sum x_i \bigg )^2 \\ & = \sum x_i^2+n\bigg (\dfrac{1}{n} \sum x_i \bigg)^2 - 2n \bigg (\dfrac{1}{n} \sum x_i \bigg )^2 \\ & = \sum x_i^2 - n \bigg (\dfrac{1}{n} \sum x_i \bigg )^2 \\ & = \sum x_i^2 - n \overline{x}^2 \end{align}\]

A more general version of this result is:

\[\begin{align} \sum_{i=1}^n(x_i-\overline{x})(y_i-\overline{y}) & = \sum_{i=1}^n x_i(y_i - \overline{y}) \\ & = \sum_{i=1}^n (x_i - \overline{x})y_i \\ & = \sum_{i=1}^n x_iy_i - n(\overline{xy}) \\ \end{align}\]

Or: \[\begin{align} \sum_{i=1}^n (x_i -\overline{x})(y_i - \overline{y}) &= \sum_{i=1}^n x_i(y_i - \overline{y}) \\ &= \sum_{i=1}^n (x_i - \overline{x})y_i = \sum_{i=1}^n x_i y_i - n(\overline{x}\overline{y}) \end{align}\]

The expected value of a random variable, also called the expectation and sometimes the population mean, is simply the weighted average of the possible values that the variable can take, with the weights being given by the probability of each value occurring in the population. Suppose that the variable \(X\) can take on values \(x_1, x_2, \ldots, x_k\), each with probability \(f(x_1), f(x_2), \ldots, f(x_k)\), respectively. Then we define the expected value of \(X\) as: \[\begin{align} E(X) & = x_1f(x_1)+x_2f(x_2)+\dots+x_kf(x_k) \\ & = \sum_{j=1}^k x_jf(x_j) \end{align}\] Let’s look at a numerical example. If \(X\) takes on values of \(-1\), 0, and 2, with probabilities 0.3, 0.3, and 0.4, respectively.6 Then the expected value of \(X\) equals:

\[\begin{align} E(X) & = (-1)(0.3)+(0)(0.3)+(2)(0.4) \\ & = 0.5 \end{align}\] In fact, you could take the expectation of a function of that variable, too, such as \(X^2\). Note that \(X^2\) takes only the values 1, 0, and 4, with probabilities 0.3, 0.3, and 0.4. Calculating the expected value of \(X^2\) therefore is:

\[\begin{align} E(X^2) & = (-1)^2(0.3)+(0)^2(0.3)+(2)^2(0.4) \\ & = 1.9 \end{align}\]

The first property of expected value is that for any constant \(c\), \(E(c)=c\). The second property is that for any two constants \(a\) and \(b\), then \(E(aX+ b)=E(aX)+E(b)=aE(X)+b\). And the third property is that if we have numerous constants, \(a_1, \dots, a_n\) and many random variables, \(X_1, \dots, X_n\), then the following is true:

\[\begin{align} E(a_1X_1+\dots+a_nX_n)=a_1E(X_1)+\dots+a_nE(X_n) \end{align}\]

We can also express this using the expectation operator:

\[\begin{align} E\bigg(\sum_{i=1}^na_iX_i\bigg)=\sum_{i=1}a_iE(X_i) \end{align}\]

And in the special case where \(a_i=1\), then

\[\begin{align} E\bigg(\sum_{i=1}^nX_i\bigg)=\sum_{i=1}^nE(X_i) \end{align}\]

The expectation operator, \(E(\cdot)\), is a population concept. It refers to the whole group of interest, not just to the sample available to us. Its meaning is somewhat similar to that of the average of a random variable in the population. Some additional properties for the expectation operator can be explained assuming two random variables, \(W\) and \(H\).

\[\begin{align} E(aW+b) & = aE(W)+b\ \text{for any constants $a$, $b$} \\ E(W+H) & = E(W)+E(H) \\ E\Big(W - E(W)\Big) & = 0 \end{align}\] Consider the variance of a random variable, \(W\):

\[\begin{align} V(W)=\sigma^2=E\Big[\big(W-E(W)\big)^2\Big]\ \text{in the population} \end{align}\] We can show \[ V(W)=E(W^2) - E(W)^2 \tag{2.9}\] In a given sample of data, we can estimate the variance by the following calculation:

\[\begin{align} \widehat{S}^2=(n-1)^{-1}\sum_{i=1}^n(x_i - \overline{x})^2 \end{align}\] where we divide by \(n\ -\ 1\) because we are making a degree-of-freedom adjustment from estimating the mean. But in large samples, this degree-of-freedom adjustment has no practical effect on the value of \(S^2\) where \(S^2\) is the average (after a degree of freedom correction) over the sum of all squared deviations from the mean.7

A few more properties of variance. First, the variance of a line is: \[ V(aX+b)=a^2V(X) \tag{2.10}\]

And the variance of a constant is 0 (i.e., \(V(c)=0\) for any constant, \(c\)). The variance of the sum of two random variables is equal to: \[ V(X+Y)=V(X)+V(Y)+2\Big(E(XY) - E(X)E(Y)\Big) \tag{2.11}\] If the two variables are independent, then \(E(XY)=E(X)E(Y)\) and \(V(X+Y)\) is equal to the sum of \(V(X)+V(Y)\).

The last part of Equation 2.11 is called the covariance. The covariance measures the amount of linear dependence between two random variables. We represent it with the \(C(X,Y)\) operator. The expression \(C(X,Y)>0\) indicates that two variables move in the same direction, whereas \(C(X,Y)<0\) indicates that they move in opposite directions. Thus we can rewrite Equation 2.11 as:

\[\begin{align} V(X+Y)=V(X)+V(Y)+2C(X,Y) \end{align}\] While it’s tempting to say that a zero covariance means that two random variables are unrelated, that is incorrect. They could have a nonlinear relationship. The definition of covariance is \[ C(X,Y) = E(XY) - E(X)E(Y) \tag{2.12}\] As we said, if \(X\) and \(Y\) are independent, then \(C(X,Y)=0\) in the population. The covariance between two linear functions is:

\[\begin{align} C(a_1+b_1X, a_2+b_2Y)=b_1b_2C(X,Y) \end{align}\] The two constants, \(a_1\) and \(a_2\), zero out because their mean is themselves and so the difference equals 0.

Interpreting the magnitude of the covariance can be tricky. For that, we are better served by looking at correlation. We define correlation as follows. Let \(W=\dfrac{X-E(X)}{\sqrt{V(X)}}\) and \(Z=\dfrac{Y - E(Y)}{\sqrt{V(Y)}}\). Then: \[ \text{Corr}(X,Y) = \text{Cov}(W,Z)=\dfrac{C(X,Y)}{\sqrt{V(X)V(Y)}} \tag{2.13}\] The correlation coefficient is bounded by \(-1\) and 1. A positive (negative) correlation indicates that the variables move in the same (opposite) ways. The closer the coefficient is to 1 or \(-1\), the stronger the linear relationship is.

We begin with cross-sectional analysis. We will assume that we can collect a random sample from the population of interest. Assume that there are two variables, \(x\) and \(y\), and we want to see how \(y\) varies with changes in \(x\).8

There are three questions that immediately come up. One, what if \(y\) is affected by factors other than \(x\)? How will we handle that? Two, what is the functional form connecting these two variables? Three, if we are interested in the causal effect of \(x\) on \(y\), then how can we distinguish that from mere correlation? Let’s start with a specific model. \[ y=\beta_0+\beta_1x+u \tag{2.14}\] This model is assumed to hold in the population. Equation 2.14 defines a linear bivariate regression model. For models concerned with capturing causal effects, the terms on the left side are usually thought of as the effect, and the terms on the right side are thought of as the causes.

Equation 2.14 explicitly allows for other factors to affect \(y\) by including a random variable called the error term, \(u\). This equation also explicitly models the functional form by assuming that \(y\) is linearly dependent on \(x\). We call the \(\beta_0\) coefficient the intercept parameter, and we call the \(\beta_1\) coefficient the slope parameter. These describe a population, and our goal in empirical work is to estimate their values. We never directly observe these parameters, because they are not data (I will emphasize this throughout the book). What we can do, though, is estimate these parameters using data and assumptions. To do this, we need credible assumptions to accurately estimate these parameters with data. We will return to this point later. In this simple regression framework, all unobserved variables that determine \(y\) are subsumed by the error term \(u\).

First, we make a simplifying assumption without loss of generality. Let the expected value of \(u\) be zero in the population. Formally: \[ E(u)=0 \tag{2.15}\] where \(E(\cdot)\) is the expected value operator discussed earlier. If we normalize the \(u\) random variable to be 0, it is of no consequence. Why? Because the presence of \(\beta_0\) (the intercept term) always allows us this flexibility. If the average of \(u\) is different from 0—for instance, say that it’s \(\alpha_0\)—then we adjust the intercept. Adjusting the intercept has no effect on the \(\beta_1\) slope parameter, though. For instance:

\[\begin{align} y=(\beta_0+\alpha_0)+\beta_1x+(u-\alpha_0) \end{align}\] where \(\alpha_0=E(u)\). The new error term is \(u-\alpha_0\), and the new intercept term is \(\beta_0+ \alpha_0\). But while those two terms changed, notice what did not change: the slope, \(\beta_1\).

An assumption that meshes well with our elementary treatment of statistics involves the mean of the error term for each “slice” of the population determined by values of \(x\): \[ E(u\mid x)=E(u)\ \text{for all values $x$} \tag{2.16}\] where \(E(u\mid x)\) means the “expected value of \(u\) given \(x\).” If Equation 2.16 holds, then we say that \(u\) is mean independent of \(x\).

An example might help here. Let’s say we are estimating the effect of schooling on wages, and \(u\) is unobserved ability. Mean independence requires that \(E(\text{ability}\mid x=8)=E(\text{ability}\mid x=12)=E(\text{ability}\mid x=16)\) so that the average ability is the same in the different portions of the population with an eighth-grade education, a twelfth-grade education, and a college education. Because people choose how much schooling to invest in based on their own unobserved skills and attributes, Equation 2.16 is likely violated—at least in our example.

But let’s say we are willing to make this assumption. Then combining this new assumption, \(E(u\mid x)=E(u)\) (the nontrivial assumption to make), with \(E(u)=0\) (the normalization and trivial assumption), and you get the following new assumption: \[ E(u\mid x)=0,\ \text{for all values $x$} \tag{2.17}\] Equation 2.17 is called the zero conditional mean assumption and is a key identifying assumption in regression models. Because the conditional expected value is a linear operator, \(E(u\mid x)=0\) implies that \[ E(y\mid x)=\beta_0+\beta_1x \] which shows the population regression function is a linear function of \(x\), or what Angrist and Pischke (2009) call the conditional expectation function.9 This relationship is crucial for the intuition of the parameter, \(\beta_1\), as a causal parameter.

Buy the print version today:

Given data on \(x\) and \(y\), how can we estimate the population parameters, \(\beta_0\) and \(\beta_1\)? Let the pairs of \(\big\{(x_i,\ \textrm{and}\ y_i): i=1,2,\dots,n \big\}\) be random samples of size \(n\) from the population. Plug any observation into the population equation:

\[ y_i=\beta_0+\beta_1x_i+u_i \] where \(i\) indicates a particular observation. We observe \(y_i\) and \(x_i\) but not \(u_i\). We just know that \(u_i\) is there. We then use the two population restrictions that we discussed earlier:

\[\begin{align} E(u) & =0 \\ E(u\mid x) & = 0 \end{align}\]

to obtain estimating equations for \(\beta_0\) and \(\beta_1\). We talked about the first condition already. The second one, though, means that the mean value of the error term does not change with different slices of \(x\). This independence assumption implies \(E(xu)=0\), we get \(E(u)=0\), and \(C(x,u)=0\). Next we plug in for \(u\), which is equal to \(y-\beta_0-\beta_1x\):

\[\begin{align} E(y-\beta_0-\beta_1x) & =0 \\ \Big(x[y-\beta_0-\beta_1x]\Big) & =0 \end{align}\]

These are the two conditions in the population that effectively determine \(\beta_0\) and \(\beta_1\). And again, note that the notation here is population concepts. We don’t have access to populations, though we do have their sample counterparts:

\[\begin{align} \dfrac{1}{n}\sum_{i=1}^n\Big(y_i-\widehat{\beta_0}-\widehat{\beta_1}x_i\Big) & =0 \\ \dfrac{1}{n}\sum_{i=1}^n \Big(x_i \Big[y_i - \widehat{\beta_0} - \widehat{\beta_1} x_i \Big]\Big) & =0 \end{align}\] where \(\widehat{\beta}_0\) and \(\widehat{\beta}_1\) are the estimates from the data.10 These are two linear equations in the two unknowns \(\widehat{\beta}_0\) and \(\widehat{\beta}_1\). Recall the properties of the summation operator as we work through the following sample properties of these two equations. We begin with the above equation and pass the summation operator through.

\[\begin{align} \dfrac{1}{n}\sum_{i=1}^n\Big(y_i-\widehat{\beta_0}-\widehat{\beta_1}x_i\Big) & = \dfrac{1}{n}\sum_{i=1}^n(y_i) - \dfrac{1}{n}\sum_{i=1}^n\widehat{\beta_0} - \dfrac{1}{n}\sum_{i=1}^n\widehat{\beta_1}x_i \\ & = \dfrac{1}{n}\sum_{i=1}^n y_i - \widehat{\beta_0} - \widehat{\beta_1} \bigg( \dfrac{1}{n}\sum_{i=1}^n x_i \bigg) \\ & = \overline{y} - \widehat{\beta_0} - \widehat{\beta_1} \overline{x} \end{align}\] where \(\overline{y}=\dfrac{1}{n}\sum_{i=1}^n y_i\) which is the average of the \(n\) numbers \(\{y_i:1,\dots,n\}\). For emphasis we will call \(\overline{y}\) the sample average. We have already shown that the first equation equals zero, so this implies \(\overline{y}=\widehat{\beta_0}+\widehat{\beta_1} \overline{x}\). So we now use this equation to write the intercept in terms of the slope:

\[\begin{align} \widehat{\beta_0}=\overline{y}-\widehat{\beta_1} \overline{x} \end{align}\] We now plug \(\widehat{\beta_0}\) into the second equation, \(\sum_{i=1}^n x_i (y_i-\widehat{\beta_0} - \widehat{\beta_1}x_i)=0\). This gives us the following (with some simple algebraic manipulation):

\[\begin{align} \sum_{i=1}^n x_i\Big[y_i-(\overline{y}- \widehat{\beta_1} \overline{x})-\widehat{\beta_1} x_i\Big] & =0 \\ \sum_{i=1}^n x_i(y_i-\overline{y}) & = \widehat{\beta_1} \bigg[ \sum_{i=1}^n x_i(x_i- \overline{x})\bigg] \end{align}\] So the equation to solve is11

\[\begin{align} \sum_{i=1}^n (x_i-\overline{x}) (y_i- \overline{y})=\widehat{\beta_1} \bigg[ \sum_{i=1}^n (x_i - \overline{x})^2 \bigg] \end{align}\] If \(\sum_{i=1}^n(x_i-\overline{x})^2\ne0\), we can write: \[\begin{align} \widehat{\beta}_1 & = \dfrac{\sum_{i=1}^n (x_i-\overline{x}) (y_i-\overline{y})}{\sum_{i=1}^n(x_i-\overline{x})^2 } \\ & =\dfrac{\text{Sample covariance}(x_i,y_i) }{\text{Sample variance}(x_i)} \end{align}\]

The previous formula for \(\widehat{\beta}_1\) is important because it shows us how to take data that we have and compute the slope estimate. The estimate, \(\widehat{\beta}_1\), is commonly referred to as the ordinary least squares (OLS) slope estimate. It can be computed whenever the sample variance of \(x_i\) isn’t 0. In other words, it can be computed if \(x_i\) is not constant across all values of \(i\). The intuition is that the variation in \(x\) is what permits us to identify its impact in \(y\). This also means, though, that we cannot determine the slope in a relationship if we observe a sample in which everyone has the same years of schooling, or whatever causal variable we are interested in.

Once we have calculated \(\widehat{\beta}_1\), we can compute the intercept value, \(\widehat{\beta}_0\), as \(\widehat{\beta}_0=\overline{y} - \widehat{\beta}_1\overline{x}\). This is the OLS intercept estimate because it is calculated using sample averages. Notice that it is straightforward because \(\widehat{\beta}_0\) is linear in \(\widehat{\beta}_1\). With computers and statistical programming languages and software, we let our computers do these calculations because even when \(n\) is small, these calculations are quite tedious.

For any candidate estimates, \(\widehat{\beta}_0, \widehat{\beta}_1\), we define a fitted value for each \(i\) as:

\[\begin{align} \widehat{y_i}=\widehat{\beta}_0+\widehat{\beta}_1x_i \end{align}\] Recall that \(i=\{1, \ldots, n\}\), so we have \(n\) of these equations. This is the value we predict for \(y_i\) given that \(x=x_i\). But there is prediction error because \(y\ne y_i\). We call that mistake the residual, and here use the \(\widehat{u_i}\) notation for it. So the residual equals:

\[\begin{align} \widehat{u_i} & = y_i-\widehat{y_i} \\ \widehat{u_i} & = y_i-\widehat{\beta_0}-\widehat{\beta_1}x_i \end{align}\] While both the residual and the error term are represented with a \({u}\), it is important that you know the differences. The residual is the prediction error based on our fitted \(\widehat{y}\) and the actual \(y\). The residual is therefore easily calculated with any sample of data. But \(u\) without the hat is the error term, and it is by definition unobserved by the researcher. Whereas the residual will appear in the data set once generated from a few steps of regression and manipulation, the error term will never appear in the data set. It is all of the determinants of our outcome not captured by our model. This is a crucial distinction, and strangely enough it is so subtle that even some seasoned researchers struggle to express it.

Suppose we measure the size of the mistake, for each \(i\), by squaring it. Squaring it will, after all, eliminate all negative values of the mistake so that everything is a positive value. This becomes useful when summing the mistakes if we don’t want positive and negative values to cancel one another out. So let’s do that: square the mistake and add them all up to get \(\sum_{i=1}^n \widehat{u_i}^2\):

\[\begin{align} \sum_{i=1}^n \widehat{u_i}^2 & =\sum_{i=1}^n (y_i - \widehat{y_i})^2 \\ & = \sum_{i=1}^n \Big(y_i-\widehat{\beta_0}-\widehat{\beta_1}x_i\Big)^2 \end{align}\]

This equation is called the sum of squared residuals because the residual is \(\widehat{u_i}=y_i-\widehat{y}\). But the residual is based on estimates of the slope and the intercept. We can imagine any number of estimates of those values. But what if our goal is to minimize the sum of squared residuals by choosing \(\widehat{\beta_0}\) and \(\widehat{\beta_1}\)? Using calculus, it can be shown that the solutions to that problem yield parameter estimates that are the same as what we obtained before.

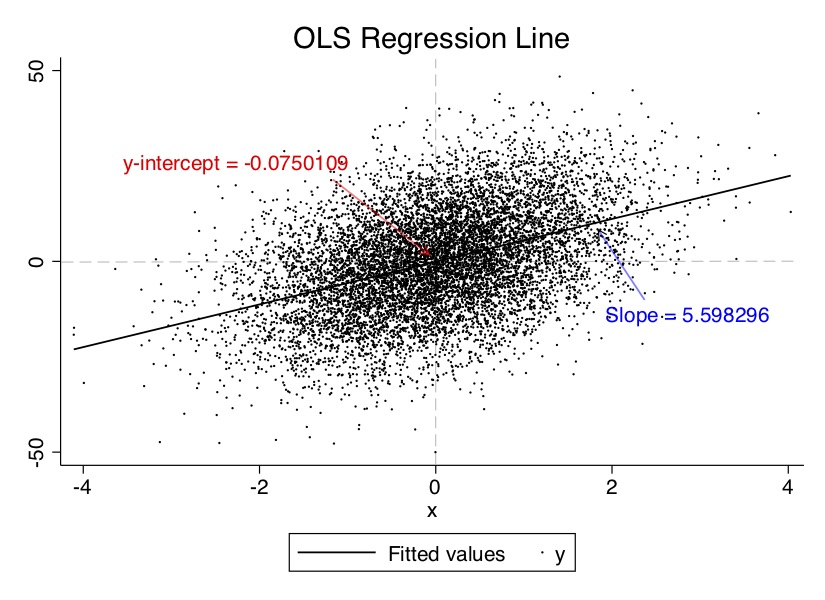

Once we have the numbers \(\widehat{\beta}_0\) and \(\widehat{\beta}_1\) for a given data set, we write the OLS regression line: \[ \widehat{y}=\widehat{\beta_0}+\widehat{\beta_1}x \] Let’s consider a short simulation.

set seed 1

clear

set obs 10000

gen x = rnormal()

gen u = rnormal()

gen y = 5.5*x + 12*u

reg y x

predict yhat1

gen yhat2 = -0.0750109 + 5.598296*x // Compare yhat1 and yhat2

sum yhat*

predict uhat1, residual

gen uhat2=y-yhat2

sum uhat*

twoway (lfit y x, lcolor(black) lwidth(medium)) (scatter y x, mcolor(black) ///

msize(tiny) msymbol(point)), title(OLS Regression Line)

rvfplot, yline(0) library(tidyverse)

set.seed(1)

tb <- tibble(

x = rnorm(10000),

u = rnorm(10000),

y = 5.5*x + 12*u

)

reg_tb <- tb %>%

lm(y ~ x, .) %>%

print()

reg_tb$coefficients

tb <- tb %>%

mutate(

yhat1 = predict(lm(y ~ x, .)),

yhat2 = 0.0732608 + 5.685033*x,

uhat1 = residuals(lm(y ~ x, .)),

uhat2 = y - yhat2

)

summary(tb[-1:-3])

tb %>%

lm(y ~ x, .) %>%

ggplot(aes(x=x, y=y)) +

ggtitle("OLS Regression Line") +

geom_point(size = 0.05, color = "black", alpha = 0.5) +

geom_smooth(method = lm, color = "black") +

annotate("text", x = -1.5, y = 30, color = "red",

label = paste("Intercept = ", -0.0732608)) +

annotate("text", x = 1.5, y = -30, color = "blue",

label = paste("Slope =", 5.685033))import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

np.random.seed(1)

tb = pd.DataFrame({

'x': np.random.normal(size=10000),

'u': np.random.normal(size=10000)})

tb['y'] = 5.5*tb['x'].values + 12*tb['u'].values

reg_tb = sm.OLS.from_formula('y ~ x', data=tb).fit()

reg_tb.summary()

tb['yhat1'] = reg_tb.predict(tb)

tb['yhat2'] = 0.1114 + 5.6887*tb['x']

tb['uhat1'] = reg_tb.resid

tb['uhat2'] = tb['y'] - tb['yhat2']

tb.describe()

p.ggplot(tb, p.aes(x='x', y='y')) +\

p.ggtitle("OLS Regression Line") +\

p.geom_point(size = 0.05, color = "black", alpha = 0.5) +\

p.geom_smooth(p.aes(x='x', y='y'), method = "lm", color = "black") +\

p.annotate("text", x = -1.5, y = 30, color = "red",

label = "Intercept = {}".format(-0.0732608)) +\

p.annotate("text", x = 1.5, y = -30, color = "blue",

label = "Slope = {}".format(5.685033))Let’s look at the output from this. First, if you summarize the data, you’ll see that the fitted values are produced both using Stata’s Predict command and manually using the Generate command. I wanted the reader to have a chance to better understand this, so did it both ways. But second, let’s look at the data and paste on top of it the estimated coefficients, the y-intercept and slope on \(x\) in Figure 2.1. The estimated coefficients in both are close to the hard coded values built into the data-generating process.

Once we have the estimated coefficients and we have the OLS regression line, we can predict \(y\) (outcome) for any (sensible) value of \(x\). So plug in certain values of \(x\), and we can immediately calculate what \(y\) will probably be with some error. The value of OLS here lies in how large that error is: OLS minimizes the error for a linear function. In fact, it is the best such guess at \(y\) for all linear estimators because it minimizes the prediction error. There’s always prediction error, in other words, with any estimator, but OLS is the least worst.

Notice that the intercept is the predicted value of \(y\) if and when \(x=0\). In this sample, that value is \(-0.0750109\).12 The slope allows us to predict changes in \(y\) for any reasonable change in \(x\) according to:

\[ \Delta \widehat{y}=\widehat{\beta}_1 \Delta x \] And if \(\Delta x=1\), then \(x\) increases by one unit, and so \(\Delta \widehat{y}=5.598296\) in our numerical example because \(\widehat{\beta}_1=5.598296\).

Now that we have calculated \(\widehat{\beta}_0\) and \(\widehat{\beta}_1\), we get the OLS fitted values by plugging \(x_i\) into the following equation for \(i=1,\dots,n\):

\[ \widehat{y_i}=\widehat{\beta}_0+\widehat{\beta}_1x_i \] The OLS residuals are also calculated by:

\[ \widehat{u_i}=y_i - \widehat{\beta}_0 - \widehat{\beta}_1 x_i \] Most residuals will be different from 0 (i.e., they do not lie on the regression line). You can see this in Figure 2.1. Some are positive, and some are negative. A positive residual indicates that the regression line (and hence, the predicted values) underestimates the true value of \(y_i\). And if the residual is negative, then the regression line overestimates the true value.

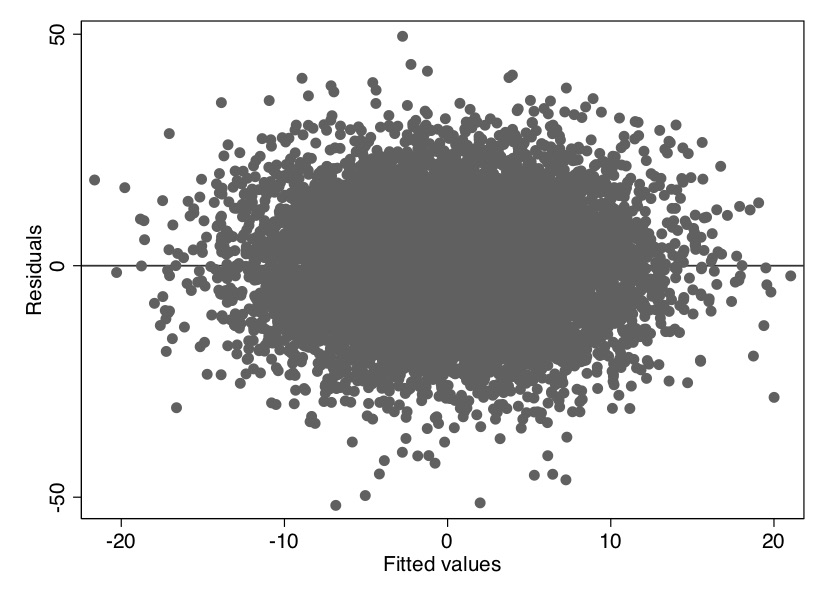

Recall that we defined the fitted value as \(\widehat{y_i}\) and the residual, \(\widehat{u_i}\), as \(y_i - \widehat{y_i}\). Notice that the scatter-plot relationship between the residuals and the fitted values created a spherical pattern, suggesting that they are not correlated (Figure 2.2). This is mechanical—least squares produces residuals which are uncorrelated with fitted values. There’s no magic here, just least squares.

Remember how we obtained \(\widehat{\beta}_0\) and \(\widehat{\beta}_1\)? When an intercept is included, we have:

\[\begin{align} \sum_{i=1}^n\Big(y_i-\widehat{\beta}_0 -\widehat{\beta}_1x_i\Big) =0 \end{align}\] The OLS residual always adds up to zero, by construction. \[ \sum_{i=1}^n \widehat{u_i}=0 \tag{2.18}\] Sometimes seeing is believing, so let’s look at this together. Type the following into Stata verbatim.

clear

set seed 1234

set obs 10

gen x = 9*rnormal()

gen u = 36*rnormal()

gen y = 3 + 2*x + u

reg y x

predict yhat

predict residuals, residual

su residuals

list

collapse (sum) x u y yhat residuals

listlibrary(tidyverse)

set.seed(1)

tb <- tibble(

x = 9*rnorm(10),

u = 36*rnorm(10),

y = 3 + 2*x + u,

yhat = predict(lm(y ~ x)),

uhat = residuals(lm(y ~ x))

)

summary(tb)

colSums(tb)import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

tb = pd.DataFrame({

'x': 9*np.random.normal(size=10),

'u': 36*np.random.normal(size=10)})

tb['y'] = 3*tb['x'].values + 2*tb['u'].values

reg_tb = sm.OLS.from_formula('y ~ x', data=tb).fit()

tb['yhat1'] = reg_tb.predict(tb)

tb['uhat1'] = reg_tb.resid

tb.describe()Output from this can be summarized as in the following table (Table 2.6).

| no. | \(x\) | \(u\) | \(y\) | \(\widehat{y}\) | \(\widehat{u}\) | \(x\widehat{u}\) | \(\widehat{y}\widehat{u}\) |

|---|---|---|---|---|---|---|---|

| 1. | \(-4.381653\) | \(-32.95803\) | \(-38.72134\) | \(-3.256034\) | \(-35.46531\) | 155.3967 | 115.4762 |

| 2. | \(-13.28403\) | \(-8.028061\) | \(-31.59613\) | \(-26.30994\) | \(-5.28619\) | 70.22192 | 139.0793 |

| 3. | \(-.0982034\) | 17.80379 | 20.60738 | 7.836532 | 12.77085 | \(-1.254141\) | 100.0792 |

| 4. | \(-.1238423\) | \(-9.443188\) | \(-6.690872\) | 7.770137 | \(-14.46101\) | 1.790884 | \(-112.364\) |

| 5. | 4.640209 | 13.18046 | 25.46088 | 20.10728 | 5.353592 | 24.84179 | 107.6462 |

| 6. | \(-1.252096\) | \(-34.64874\) | \(-34.15294\) | 4.848374 | \(-39.00131\) | 48.83337 | \(-189.0929\) |

| 7. | 11.58586 | 9.118524 | 35.29023 | 38.09396 | \(-2.80373\) | \(-32.48362\) | \(-106.8052\) |

| 8. | \(-5.289957\) | 82.23296 | 74.65305 | \(-5.608207\) | 80.26126 | \(-424.5786\) | \(-450.1217\) |

| 9. | \(-.2754041\) | 11.60571 | 14.0549 | 7.377647 | 6.677258 | \(-1.838944\) | 49.26245 |

| 10. | \(-19.77159\) | \(-14.61257\) | \(-51.15575\) | \(-43.11034\) | \(-8.045414\) | 159.0706 | 346.8405 |

| Sum | \(-28.25072\) | 34.25085 | 7.749418 | 7.749418 | 1.91e-06 | \(-6.56e-06\) | .0000305 |

Notice the difference between the \(u\), \(\widehat{y}\), and \(\widehat{u}\) columns. When we sum these ten lines, neither the error term nor the fitted values of \(y\) sum to zero. But the residuals do sum to zero. This is, as we said, one of the algebraic properties of OLS—coefficients were optimally chosen to ensure that the residuals sum to zero.

Because \(y_i=\widehat{y_i}+\widehat{u_i}\) by definition (which we can also see in Table 6), we can take the sample average of both sides:

\[ \dfrac{1}{n} \sum_{i=1}^n y_i=\dfrac{1}{n} \sum_{i=1}^n \widehat{y_i}+\dfrac{1}{n} \sum_{i=1}^n \widehat{u_i} \] and so \(\overline{y}=\overline{\widehat{y}}\) because the residuals sum to zero. Similarly, the way that we obtained our estimates yields

\[ \sum_{i=1}^n x_i \Big(y_i-\widehat{\beta}_0 - \widehat{\beta}_1 x_i\Big)=0 \] The sample covariance (and therefore the sample correlation) between the explanatory variables and the residuals is always zero (see Table 2.6).

\[ \sum_{i=1}^n x_i \widehat{u_i}=0 \] Because the \(\widehat{y_i}\) are linear functions of the \(x_i\), the fitted values and residuals are uncorrelated too (see Table 2.6):

\[ \sum_{i=1}^n \widehat{y_i} \widehat{u_i}=0 \tag{2.19}\] Both properties hold by construction. In other words, \(\widehat{\beta}_0\) and \(\widehat{\beta}_1\) were selected to make them true.13

A third property is that if we plug in the average for \(x\), we predict the sample average for \(y\). That is, the point \((\overline{x}, \overline{y})\) is on the OLS regression line, or:

\[\begin{align} \overline{y}=\widehat{\beta}_0+\widehat{\beta}_1 \overline{x} \end{align}\]

For each observation, we write

\[\begin{align} y_i=\widehat{y_i}+\widehat{u_i} \end{align}\] Define the total sum of squares (SST), explained sum of squares (SSE), and residual sum of squares (SSR) as

\[ SST = \sum_{i=1}^n (y_i - \overline{y})^2 \tag{2.20}\] \[ SSE = \sum_{i=1}^n (\widehat{y_i} - \overline{y})^2 \tag{2.21}\] \[ SSR = \sum_{i=1}^n \widehat{u_i}^2 \tag{2.22}\]

These are sample variances when divided by \(n-1\).14 \(\dfrac{SST}{n-1}\) is the sample variance of \(y_i\), \(\dfrac{SSE}{n-1}\) is the sample variance of \(\widehat{y_i}\), and \(\dfrac{SSR}{n-1}\) is the sample variance of \(\widehat{u_i}\). With some simple manipulation rewrite Equation 2.20:

\[\begin{align} SST & = \sum_{i=1}^n (y_i - \overline{y})^2 \\ & = \sum_{i=1}^n \Big[ (y_i - \widehat{y_i}) + (\widehat{y_i} - \overline{y}) \Big]^2 \\ & = \sum_{i=1}^n \Big[ \widehat{u_i} + (\widehat{y_i} - \overline{y})\Big]^2 \end{align}\] Since Equation 2.19 shows that the fitted values are uncorrelated with the residuals, we can write the following equation:

\[\begin{align} SST = SSE + SSR \end{align}\] Assuming \(SST>0\), we can define the fraction of the total variation in \(y_i\) that is explained by \(x_i\) (or the OLS regression line) as

\[\begin{align} R^2=\dfrac{SSE}{SST}=1-\dfrac{SSR}{SST} \end{align}\] which is called the R-squared of the regression. It can be shown to be equal to the square of the correlation between \(y_i\) and \(\widehat{y_i}\). Therefore \(0\leq R^2 \leq 1\). An R-squared of zero means no linear relationship between \(y_i\) and \(x_i\), and an \(R\)-squared of one means a perfect linear relationship (e.g., \(y_i=x_i+2\)). As \(R^2\) increases, the \(y_i\) are closer and closer to falling on the OLS regression line.

I would encourage you not to fixate on \(R\)-squared in research projects where the aim is to estimate some causal effect, though. It’s a useful summary measure, but it does not tell us about causality. Remember, you aren’t trying to explain variation in \(y\) if you are trying to estimate some causal effect. The \(R^2\) tells us how much of the variation in \(y_i\) is explained by the explanatory variables. But if we are interested in the causal effect of a single variable, \(R^2\) is irrelevant. For causal inference, we need Equation 2.17.

Up until now, we motivated simple regression using a population model. But our analysis has been purely algebraic, based on a sample of data. So residuals always average to zero when we apply OLS to a sample, regardless of any underlying model. But our job gets tougher. Now we have to study the statistical properties of the OLS estimator, referring to a population model and assuming random sampling.15

The field of mathematical statistics is concerned with questions. How do estimators behave across different samples of data? On average, for instance, will we get the right answer if we repeatedly sample? We need to find the expected value of the OLS estimators—in effect, the average outcome across all possible random samples—and determine whether we are right, on average. This leads naturally to a characteristic called unbiasedness, which is desirable of all estimators. \[ E(\widehat{\beta})=\beta \tag{2.23}\] Remember, our objective is to estimate \(\beta_1\), which is the slope \({population}\) parameter that describes the relationship between \(y\) and \(x\). Our estimate, \(\widehat{\beta_1}\), is an estimator of that parameter obtained for a specific sample. Different samples will generate different estimates (\(\widehat{\beta_1}\)) for the “true” (and unobserved) \(\beta_1\). Unbiasedness means that if we could take as many random samples on \(Y\) as we want from the population and compute an estimate each time, the average of the estimates would be equal to \(\beta_1\).

There are several assumptions required for OLS to be unbiased. The first assumption is called linear in the parameters. Assume a population model

\[ y=\beta_0+\beta_1 x+u \] where \(\beta_0\) and \(\beta_1\) are the unknown population parameters. We view \(x\) and \(u\) as outcomes of random variables generated by some data-generating process. Thus, since \(y\) is a function of \(x\) and \(u\), both of which are random, then \(y\) is also random. Stating this assumption formally shows that our goal is to estimate \(\beta_0\) and \(\beta_1\).

Our second assumption is random sampling. We have a random sample of size \(n\), \(\{ (x_i, y_i){:} i=1, \dots, n\}\), following the population model. We know how to use this data to estimate \(\beta_0\) and \(\beta_1\) by OLS. Because each \(i\) is a draw from the population, we can write, for each \(i\):

\[ y_i=\beta_0+\beta_1x_i+u_i \] Notice that \(u_i\) here is the unobserved error for observation \(i\). It is not the residual that we compute from the data.

The third assumption is called sample variation in the explanatory variable. That is, the sample outcomes on \(x_i\) are not all the same value. This is the same as saying that the sample variance of \(x\) is not zero. In practice, this is no assumption at all. If the \(x_i\) all have the same value (i.e., are constant), we cannot learn how \(x\) affects \(y\) in the population. Recall that OLS is the covariance of \(y\) and \(x\) divided by the variance in \(x\), and so if \(x\) is constant, then we are dividing by zero, and the OLS estimator is undefined.

With the fourth assumption our assumptions start to have real teeth. It is called the zero conditional mean assumption and is probably the most critical assumption in causal inference. In the population, the error term has zero mean given any value of the explanatory variable:

\[ E(u\mid x) = E(u) = 0 \tag{2.24}\] This is the key assumption for showing that OLS is unbiased, with the zero value being of no importance once we assume that \(E(u\mid x)\) does not change with \(x\). Note that we can compute OLS estimates whether or not this assumption holds, even if there is an underlying population model.

So, how do we show that \(\widehat{\beta_1}\) is an unbiased estimate of \(\beta_1\) (Equation 2.24)? We need to show that under the four assumptions we just outlined, the expected value of \(\widehat{\beta_1}\), when averaged across random samples, will center on the true value of \(\beta_1\). This is a subtle yet critical concept. Unbiasedness in this context means that if we repeatedly sample data from a population and run a regression on each new sample, the average over all those estimated coefficients will equal the true value of \(\beta_1\). We will discuss the answer as a series of steps.

Step 1: Write down a formula for \(\widehat{\beta_1}\). It is convenient to use the \(\dfrac{C(x,y)}{V(x)}\) form:

\[\begin{align} \widehat{\beta_1}=\dfrac{\sum_{i=1}^n (x_i - \overline{x})y_i}{\sum_{i=1}^n (x_i - \overline{x})^2} \end{align}\] Let’s get rid of some of this notational clutter by defining \(\sum_{i=1}^n (x_i - \overline{x})^2=SST_x\) (i.e., total variation in the \(x_i\)) and rewrite this as:

\[\begin{align} \widehat{\beta_1}=\dfrac{ \sum_{i=1}^n (x_i - \overline{x})y_i}{SST_x} \end{align}\]

Step 2: Replace each \(y_i\) with \(y_i= \beta_0+\beta_1 x_i+u_i\), which uses the first linear assumption and the fact that we have sampled data (our second assumption). The numerator becomes:

\[\begin{align} \sum_{i=1}^n (x_i - \overline{x})y_i & =\sum_{i=1}^n (x_i - \overline{x})(\beta_0+\beta_1 x_i+u_i) \\ & = \beta_0 \sum_{i=1}^n (x_i - \overline{x})+\beta_1 \sum_{i=1}^n (x_i - \overline{x})x_i+\sum_{i=1}^n (x_i+\overline{x}) u_i \\ & =0+\beta_1 \sum_{i=1}^n (x_i - \overline{x})^2+ \sum_{i=1}^n (x_i - \overline{x})u_i \\ & = \beta_1 SST_x+\sum_{i=1}^n (x_i - \overline{x}) u_i \end{align}\] Note, we used \(\sum_{i=1}^n (x_i-\overline{x})=0\) and \(\sum_{i=1}^n (x_i - \overline{x})x_i=\sum_{i=1}^n (x_i - \overline{x})^2\) to do this.16

We have shown that:

\[\begin{align} \widehat{\beta_1} & = \dfrac{ \beta_1 SST_x+ \sum_{i=1}^n (x_i - \overline{x})u_i }{SST_x} \nonumber \\ & = \beta_1+\dfrac{ \sum_{i=1}^n (x_i - \overline{x})u_i }{SST_x} \end{align}\] Note that the last piece is the slope coefficient from the OLS regression of \(u_i\) on \(x_i\), \(i\): \(1, \dots, n\).17 We cannot do this regression because the \(u_i\) are not observed. Now define \(w_i=\dfrac{(x_i - \overline{x})}{SST_x}\) so that we have the following:

\[\begin{align} \widehat{\beta_1}=\beta_1+\sum_{i=1}^n w_i u_i \end{align}\] This has showed us the following: First, \(\widehat{\beta_1}\) is a linear function of the unobserved errors, \(u_i\). The \(w_i\) are all functions of \(\{ x_1, \dots, x_n \}\). Second, the random difference between \(\beta_1\) and the estimate of it, \(\widehat{\beta_1}\), is due to this linear function of the unobservables.

Step 3: Find \(E(\widehat{\beta_1})\). Under the random sampling assumption and the zero conditional mean assumption, \(E(u_i \mid x_1, \dots, x_n)=0\), that means conditional on each of the \(x\) variables:

\[\begin{align} E\big(w_iu_i\mid x_1, \dots, x_n\big) = w_i E\big(u_i \mid x_1, \dots, x_n\big)=0 \end{align}\] because \(w_i\) is a function of \(\{x_1, \dots, x_n\}\). This would be true if in the population \(u\) and \(x\) are correlated.

Now we can complete the proof: conditional on \(\{x_1, \dots, x_n\}\),

\[\begin{align} E(\widehat{\beta_1}) & = E \bigg(\beta_1+\sum_{i=1}^n w_i u_i \bigg) \\ & =\beta_1+\sum_{i=1}^n E(w_i u_i) \\ & = \beta_1+\sum_{i=1}^n w_i E(u_i) \\ & =\beta_1 +0 \\ & =\beta_1 \end{align}\] Remember, \(\beta_1\) is the fixed constant in the population. The estimator, \(\widehat{\beta_1}\), varies across samples and is the random outcome: before we collect our data, we do not know what \(\widehat{\beta_1}\) will be. Under the four aforementioned assumptions, \(E(\widehat{\beta_0})=\beta_0\) and \(E(\widehat{\beta_1})=\beta_1\).

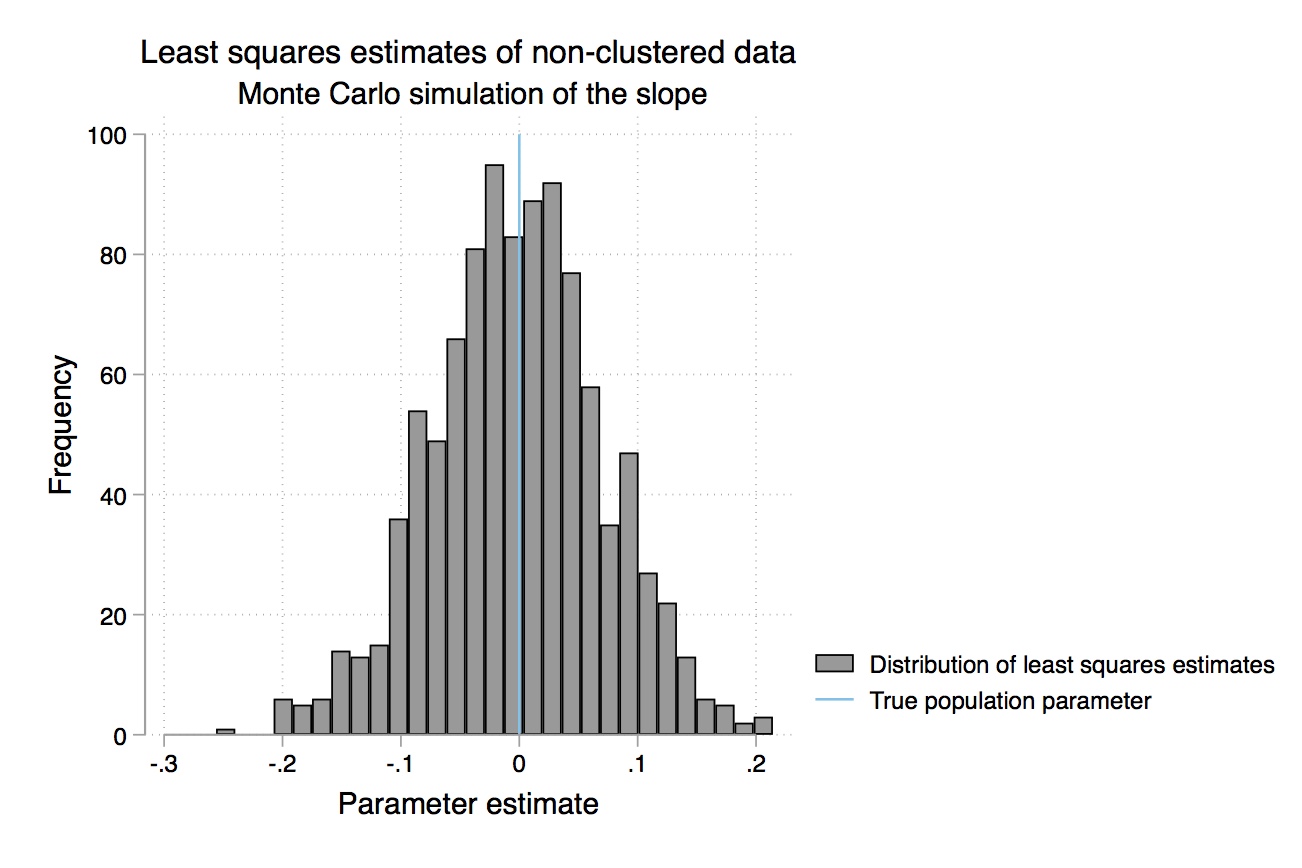

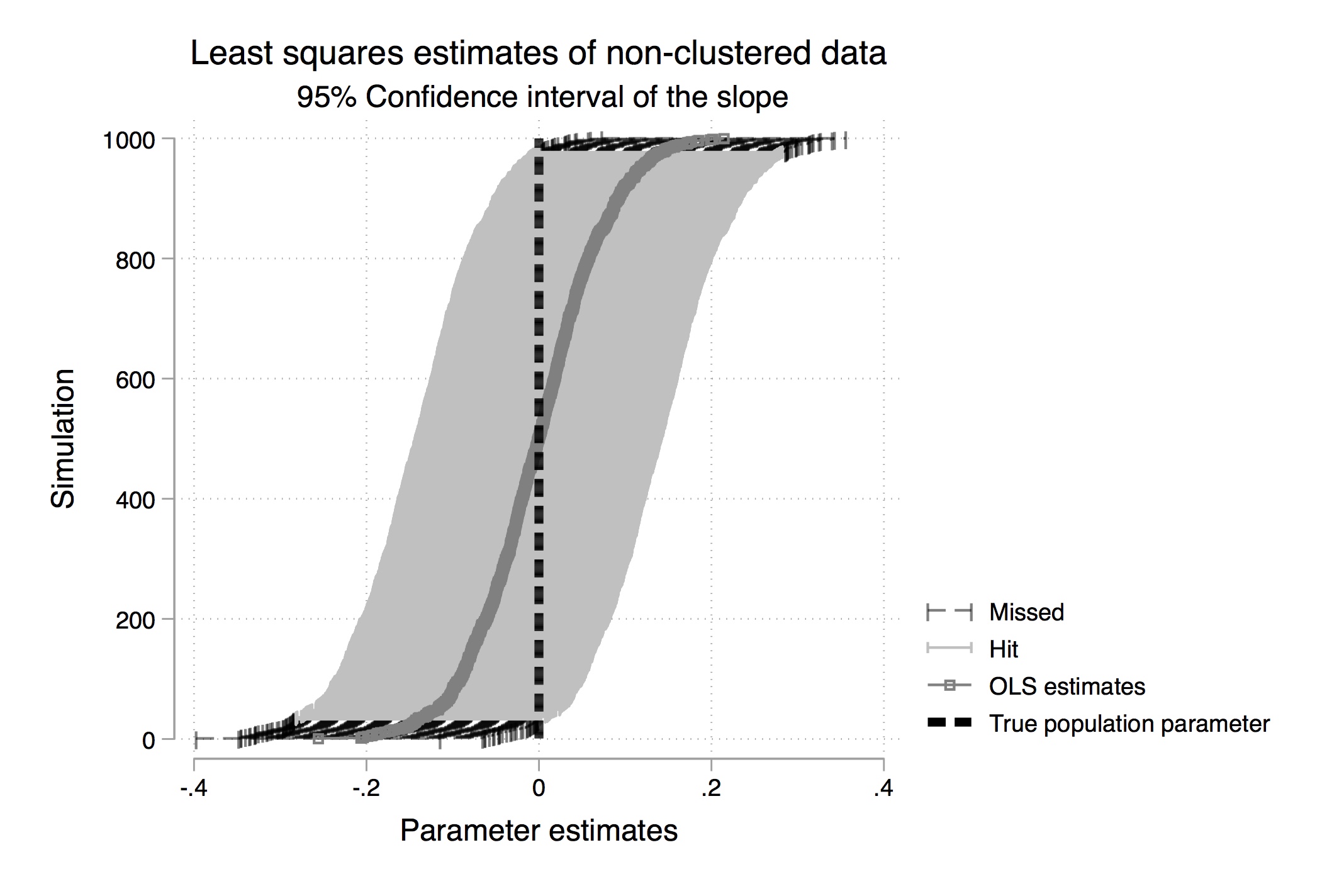

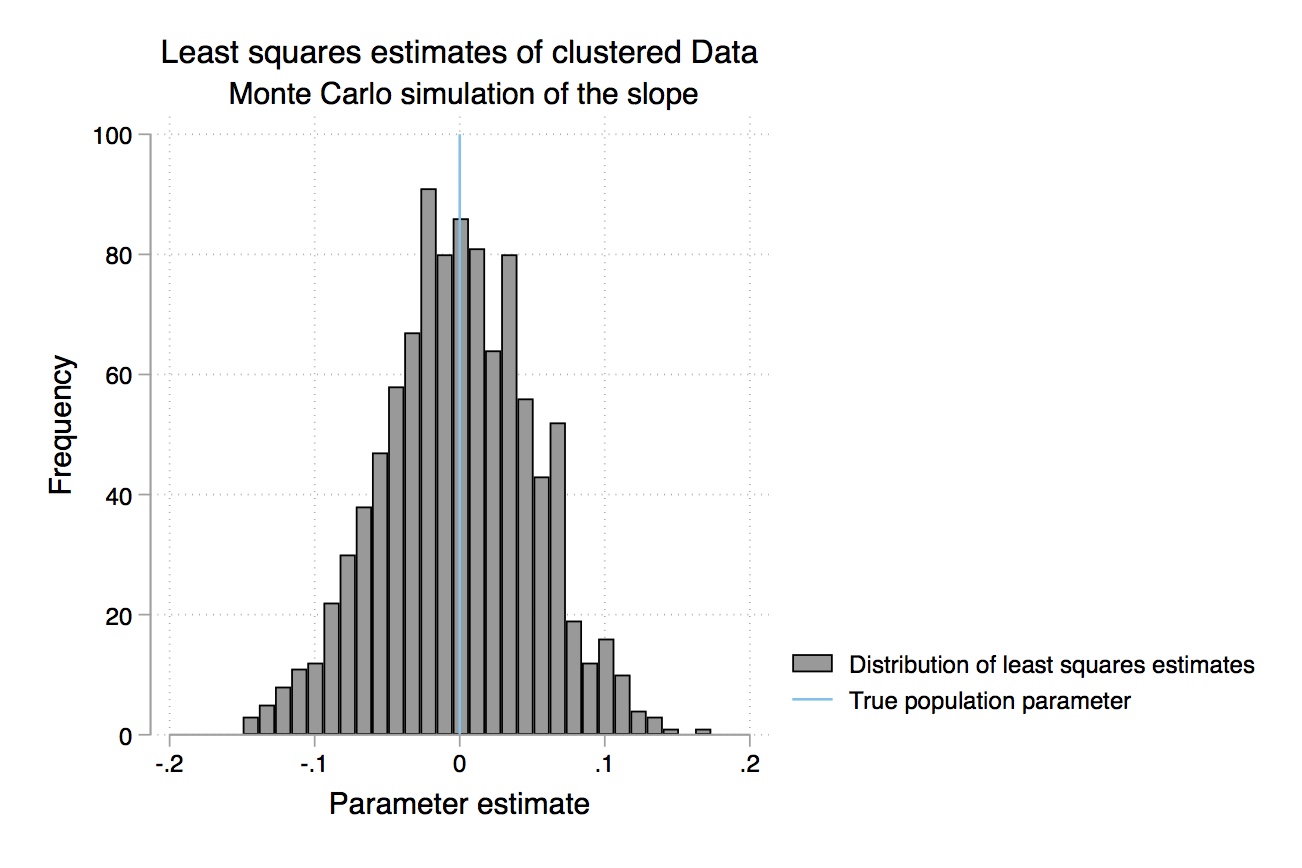

I find it helpful to be concrete when we work through exercises like this. So let’s visualize this. Let’s create a Monte Carlo simulation. We have the following population model:

\[ y=3+2x+u \tag{2.25}\] where \(x\sim Normal(0,9)\), \(u\sim Normal(0,36)\). Also, \(x\) and \(u\) are independent. The following Monte Carlo simulation will estimate OLS on a sample of data 1,000 times. The true \(\beta\) parameter equals 2. But what will the average \(\widehat{\beta}\) equal when we use repeated sampling?

clear all

program define ols, rclass

version 14.2

syntax [, obs(integer 1) mu(real 0) sigma(real 1) ]

clear

drop _all

set obs 10000

gen x = 9*rnormal()

gen u = 36*rnormal()

gen y = 3 + 2*x + u

reg y x

end

simulate beta=_b[x], reps(1000): ols

su

hist betalibrary(tidyverse)

lm <- lapply(

1:1000,

function(x) tibble(

x = 9*rnorm(10000),

u = 36*rnorm(10000),

y = 3 + 2*x + u

) %>%

lm(y ~ x, .)

)

as_tibble(t(sapply(lm, coef))) %>%

summary(x)

as_tibble(t(sapply(lm, coef))) %>%

ggplot()+

geom_histogram(aes(x), binwidth = 0.01)import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

coefs = np.zeros(1000)

for i in range(1000):

tb = pd.DataFrame({

'x': 9*np.random.normal(size=10000),

'u': 36*np.random.normal(size=10000)})

tb['y'] = 3 + 2*tb['x'].values + tb['u'].values

reg_tb = sm.OLS.from_formula('y ~ x', data=tb).fit()

coefs[i] = reg_tb.params['x']

p.ggplot() +\

p.geom_histogram(p.aes(x=coefs), binwidth = 0.01)| Variable | Obs | Mean | St. Dev. |

|---|---|---|---|

| beta | 1,000 | 1.998317 | 0.0398413 |

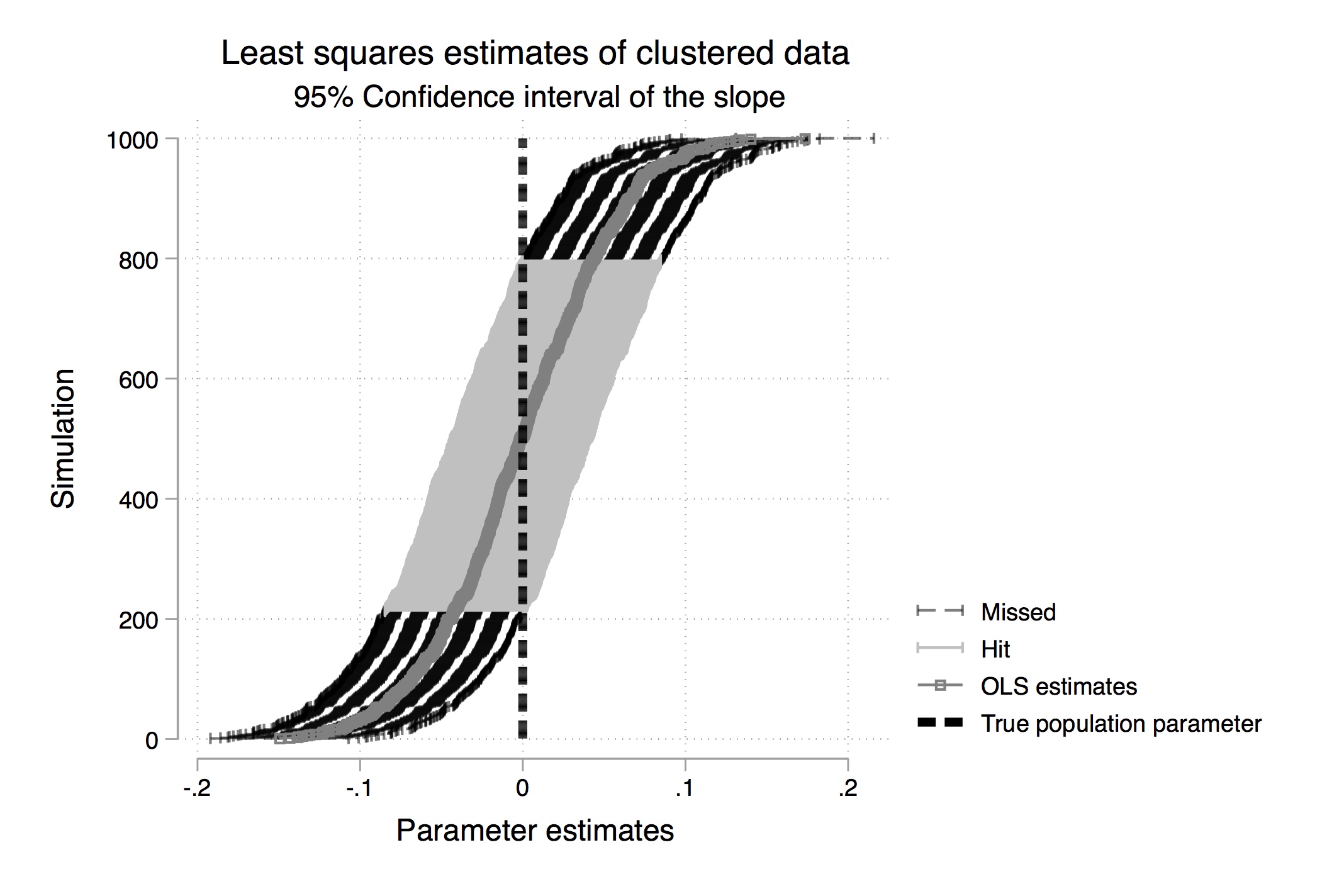

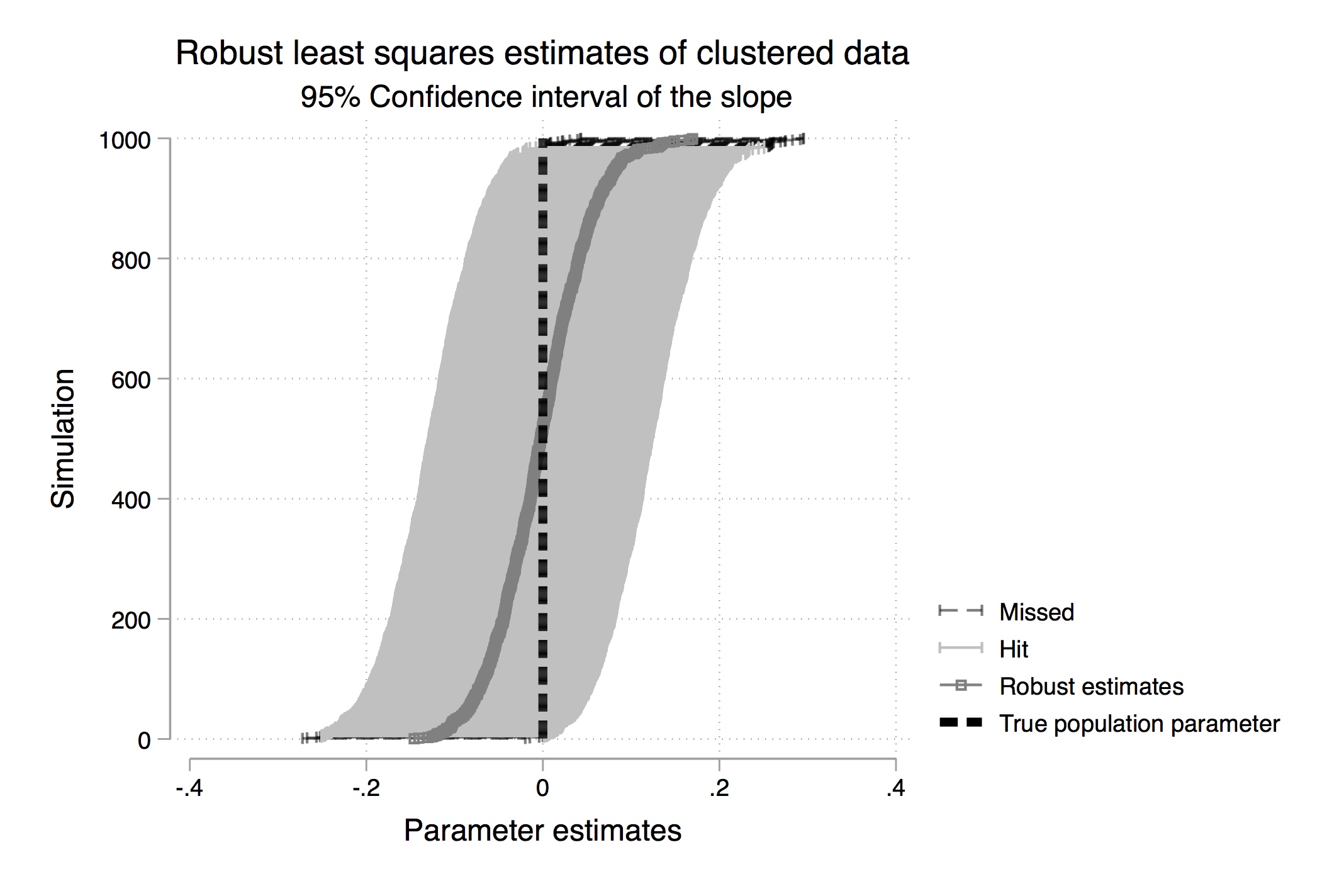

Table 2.7 gives us the mean value of \(\widehat{\beta-1}\) over the 1,000 repetitions (repeated sampling). Your results will differ from mine here only in the randomness involved in the simulation. But your results should be similar to what is shown here. While each sample had a different estimated slope, the average for \(\widehat{\beta-1}\) over all the samples was 1.998317, which is close to the true value of 2 (see Equation 2.25). The standard deviation in this estimator was 0.0398413, which is close to the standard error recorded in the regression itself.18 Thus, we see that the estimate is the mean value of the coefficient from repeated sampling, and the standard error is the standard deviation from that repeated estimation. We can see the distribution of these coefficient estimates in Figure 2.3.

The problem is, we don’t know which kind of sample we have. Do we have one of the “almost exactly 2” samples, or do we have one of the “pretty different from 2” samples? We can never know whether we are close to the population value. We hope that our sample is “typical” and produces a slope estimate close to \(\widehat{\beta_1}\), but we can’t know. Unbiasedness is a property of the procedure of the rule. It is not a property of the estimate itself. For example, say we estimated an that 8.2% return on schooling. It is tempting to say that 8.2% is an unbiased estimate of the return to schooling, but that’s technically incorrect. The rule used to get \(\widehat{\beta_1}=0.082\) is unbiased (if we believe that \(u\) is unrelated to schooling), not the actual estimate itself.

The conditional expectation function (CEF) is the mean of some outcome \(y\) with some covariate \(x\) held fixed. Let’s focus more intently on this function.19 Let’s get the notation and some of the syntax out of the way. As noted earlier, we write the CEF as \(E(y_i\mid x_i)\). Note that the CEF is explicitly a function of \(x_i\). And because \(x_i\) is random, the CEF is random—although sometimes we work with particular values for \(x_i\), like \(E(y_i\mid x_i=8\text{ years schooling})\) or \(E(y_i\mid x_i=\text{Female})\). When there are treatment variables, then the CEF takes on two values: \(E(y_i\mid d_i=0)\) and \(E(y_i\mid d_i= 1)\). But these are special cases only.

An important complement to the CEF is the law of iterated expectations (LIE). This law says that an unconditional expectation can be written as the unconditional average of the CEF. In other words, \(E(y_i)=E \{E(y_i\mid x_i)\}\). This is a fairly simple idea: if you want to know the unconditional expectation of some random variable \(y\), you can simply calculate the weighted sum of all conditional expectations with respect to some covariate \(x\). Let’s look at an example. Let’s say that average grade-point for females is 3.5, average GPA for males is a 3.2, half the population is female, and half is male. Then:

\[\begin{align} E[GPA] & = E \big\{E(GPA_i\mid \text{Gender}_i) \big\} \\ & =(0.5 \times 3.5)+(3.2 \times 0.5) \\ & = 3.35 \end{align}\] You probably use LIE all the time and didn’t even know it. The proof is not complicated. Let \(x_i\) and \(y_i\) each be continuously distributed. The joint density is defined as \(f_{xy}(u,t)\). The conditional distribution of \(y\) given \(x=u\) is defined as \(f_y(t\mid x_i=u)\). The marginal densities are \(g_y(t)\) and \(g_x(u)\).

\[\begin{align} E \{E(y\mid x)\} & = \int E(y\mid x=u) g_x(u) du \\ & = \int \bigg[ \int tf_{y\mid x} (t\mid x=u) dt \bigg] g_x(u) du \\ & = \int \int t f_{y\mid x} (t\mid x=u) g_x(u) du dt \\ & = \int t \bigg[ \int f_{y\mid x} (t\mid x=u) g_x(u) du \bigg] dt \text{} \\ & = \int t [ f_{x,y}du] dt \\ & = \int t g_y(t) dt \\ & =E(y) \end{align}\] Check out how easy this proof is. The first line uses the definition of expectation. The second line uses the definition of conditional expectation. The third line switches the integration order. The fourth line uses the definition of joint density. The fifth line replaces the prior line with the subsequent expression. The sixth line integrates joint density over the support of x which is equal to the marginal density of \(y\). So restating the law of iterated expectations: \(E(y_i)= E\{E(y\mid x_i)\}\).

The first property of the CEF we will discuss is the CEF decomposition property. The power of LIE comes from the way it breaks a random variable into two pieces—the CEF and a residual with special properties. The CEF decomposition property states that \[ y_i=E(y_i\mid x_i)+\varepsilon_i\ \]

where (i) \(\varepsilon_i\) is mean independent of \(x_i\), That is,

\[ E(\varepsilon_i\mid x_i)=0 \]

and (ii) \(\varepsilon_i\) is not correlated with any function of \(x_i\).

The theorem says that any random variable \(y_i\) can be decomposed into a piece that is explained by \(x_i\) (the CEF) and a piece that is left over and orthogonal to any function of \(x_i\). I’ll prove the (i) part first. Recall that \(\varepsilon_i=y_i - E(y_i\mid x_i)\) as we will make a substitution in the second line below.

\[\begin{align} E(\varepsilon_i\mid x_i) & =E\Big(y_i- E(y_i\mid x_i)\mid x_i\Big) \\ & =E(y_i\mid x_i) - E(y_i\mid x_i) \\ & = 0 \end{align}\]

The second part of the theorem states that \(\varepsilon_i\) is uncorrelated with any function of \(x_i\). Let \(h(x_i)\) be any function of \(x_i\). Then \(E( h(x_i) \varepsilon_i)=E \{ h(x_i) E(\varepsilon_i\mid x_i)\}\) The second term in the interior product is equal to zero by mean independence.20

The second property is the CEF prediction property. This states that \(E(y_i\mid x_i)=\arg\min_{m(x_i)}E[(y-m(x_i))^2]\), where \(m(x_i)\) is any function of \(x_i\). In words, this states that the CEF is the minimum mean squared error of \(y_i\) given \(x_i\). By adding \(E(y_i\mid x_i) - E(y_i\mid x_i)=0\) to the right side we get \[ \Big[y_i-m(x_i)\Big]^2=\Big[\big(y_i-E[y_i\mid x_i]\big) +\big(E(y_i\mid x_i)- m(x_i)\big)\Big]^2 \] I personally find this easier to follow with simpler notation. So replace this expression with the following terms: \[ (a-b+b-c)^2 = (a-b)^2 + 2 (b-c)(a-b) + (b-c)^2 \] Distribute the terms, rearrange them, and replace the terms with their original values until you get the following:

\[\begin{align} \arg\min \Big(y_i- E(y_i\mid x_i)\Big)^2 & + 2\Big(E(y_i\mid x_i)- m(x_i)\Big)\times \Big(y_i - E(y_i\mid x_i)\Big)\\ & +\Big(E(y_i\mid x_i)+m(x_i)\Big)^2 \end{align}\]

Now minimize the function with respect to \(m(x_i)\). When minimizing this function with respect to \(m(x_i)\), note that the first term \((y_i-E(y_i\mid x_i))^2\) doesn’t matter because it does not depend on \(m(x_i)\). The second and third terms, though, do depend on \(m(x_i)\). So rewrite \(2(E (y_i\mid x_i)-m(x_i))\) as \(h(x_i)\). Also set \(\varepsilon_i\) equal to \([y_i-E(y_i\mid x_i)]\) and substitute \[ \arg\min\varepsilon_i^2+h(x_i)\varepsilon_i+ \Big[ E(y_i\mid x_i)+m(x_i)\Big]^2 \] Now minimizing this function and setting it equal to zero we get \[ h'(x_i)\varepsilon_i \] which equals zero by the decomposition property.

The final property of the CEF that we will discuss is the analysis of variance theorem, or ANOVA. According to this theorem, the unconditional variance in some random variable is equal to the variance in the conditional expectation plus the expectation of the conditional variance, or \[ V(y_i)=V\Big[E(y_i\mid x_i)\Big]+ E\Big[V(y_i\mid x_i)\Big] \] where \(V\) is the variance and \(V(y_i\mid x_i)\) is the conditional variance.

As you probably know by now, the use of least squares in applied work is extremely common. That’s because regression has several justifications. We discussed one—unbiasedness under certain assumptions about the error term. But I’d like to present some slightly different arguments. Angrist and Pischke (2009) argue that linear regression may be useful even if the underlying CEF itself is not linear, because regression is a good approximation of the CEF. So keep an open mind as I break this down a little bit more.

Angrist and Pischke (2009) give several arguments for using regression, and the linear CEF theorem is probably the easiest. Let’s assume that we are sure that the CEF itself is linear. So what? Well, if the CEF is linear, then the linear CEF theorem states that the population regression is equal to that linear CEF. And if the CEF is linear, and if the population regression equals it, then of course you should use the population regression to estimate CEF. If you need a proof for what could just as easily be considered common sense, I provide one. If \(E(y_i\mid x_i)\) is linear, then \(E(y_i\mid x_i)= x'\widehat{\beta}\) for some vector \(\widehat{\beta}\). By the decomposition property, you get: \[ E\Big(x(y-E(y\mid x)\Big)=E\Big(x(y-x'\widehat{\beta})\Big)=0 \] And then when you solve this, you get \(\widehat{\beta}=\beta\). Hence \(E(y\mid x)=x'\beta\).

There are a few other linear theorems that are worth bringing up in this context. For instance, recall that the CEF is the minimum mean squared error predictor of \(y\) given \(x\) in the class of all functions, according to the CEF prediction property. Given this, the population regression function is the best that we can do in the class of all linear functions.21