Causal Inference:

The Mixtape.

Buy the print version today:

Buy the print version today:

\[ % Define terms \newcommand{\Card}{\text{Card }} \DeclareMathOperator*{\cov}{cov} \DeclareMathOperator*{\var}{var} \DeclareMathOperator{\Var}{Var\,} \DeclareMathOperator{\Cov}{Cov\,} \DeclareMathOperator{\Prob}{Prob} \newcommand{\independent}{\perp \!\!\! \perp} \DeclareMathOperator{\Post}{Post} \DeclareMathOperator{\Pre}{Pre} \DeclareMathOperator{\Mid}{\,\vert\,} \DeclareMathOperator{\post}{post} \DeclareMathOperator{\pre}{pre} \]

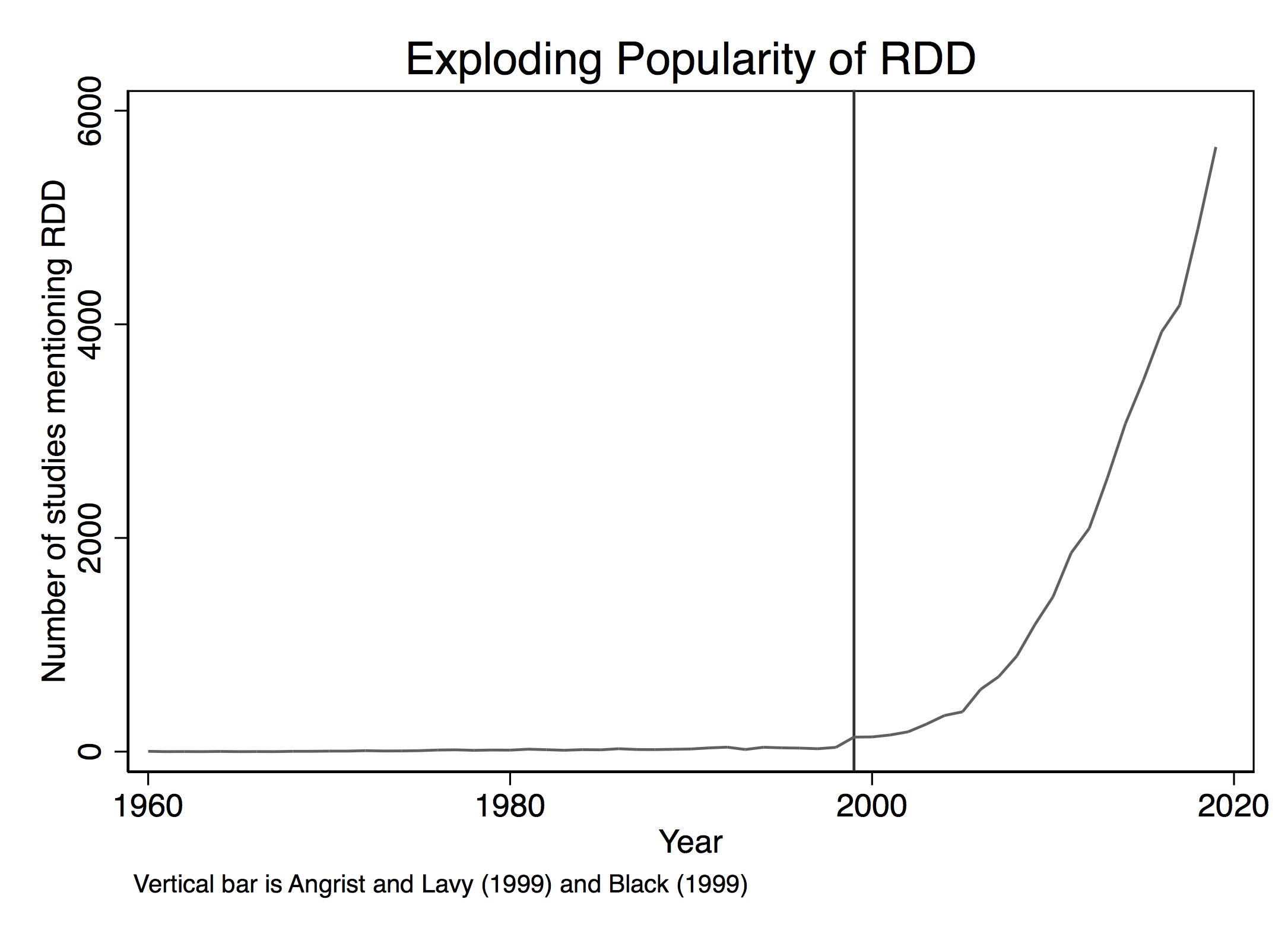

Over the past twenty years, interest in the regression-discontinuity design (RDD) has increased (Figure 6.1). It was not always so popular, though. The method dates back about sixty years to Donald Campbell, an educational psychologist, who wrote several studies using it, beginning with Thistlehwaite and Campbell (1960).1 In a wonderful article on the history of thought around RDD, Cook (2008) documents its social evolution. Despite Campbell’s many efforts to advocate for its usefulness and understand its properties, RDD did not catch on beyond a few doctoral students and a handful of papers here and there. Eventually, Campbell too moved on from it.

To see its growing popularity, let’s look at counts of papers from Google Scholar by year that mentioned the phrase “regression discontinuity design” (see Figure 6.1).2 Thistlehwaite and Campbell (1960) had no influence on the broader community of scholars using his design, confirming what Cook (2008) wrote. The first time RDD appears in the economics community is with an unpublished econometrics paper (Goldberger 1972). Starting in 1976, RDD finally gets annual double-digit usage for the first time, after which it begins to slowly tick upward. But for the most part, adoption was imperceptibly slow.

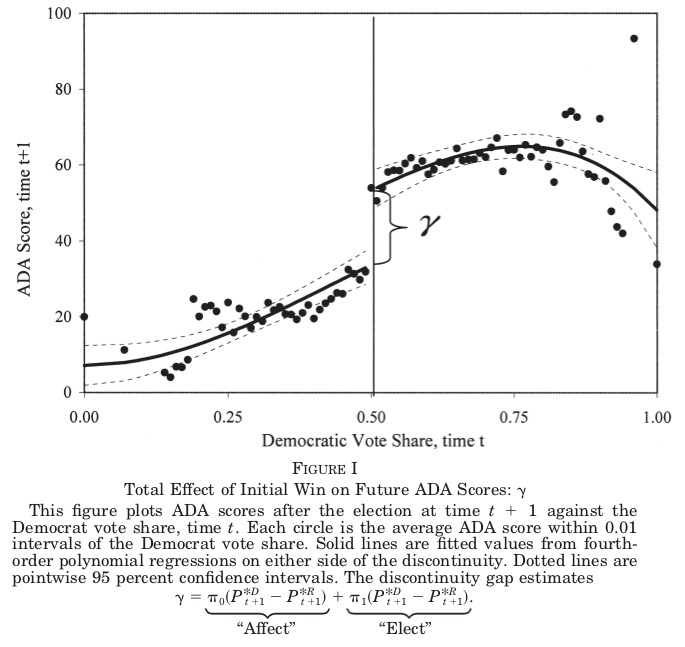

But then things change starting in 1999. That’s the year when a couple of notable papers in the prestigious Quarterly Journal of Economics resurrected the method. These papers were Angrist and Lavy (1999) and Black (1999), followed by Hahn, Todd, and Klaauw (2001) two years later. Angrist and Lavy (1999), which we discuss in detail later, studied the effect of class size on pupil achievement using an unusual feature in Israeli public schools that created smaller classes when the number of students passed a particular threshold. Black (1999) used a kind of RDD approach when she creatively exploited discontinuities at the geographical level created by school district zoning to estimate people’s willingness to pay for better schools. The year 1999 marks a watershed in the design’s widespread adoption. A 2010 Journal of Economic Literature article by Lee and Lemieux, which has nearly 4,000 cites shows up in a year with nearly 1,500 new papers mentioning the method. By 2019, RDD output would be over 5,600. The design is today incredibly popular and shows no sign of slowing down.

But 1972 to 1999 is a long time without so much as a peep for what is now considered one of the most credible research designs with observational data, so what gives? Cook (2008) says that RDD was “waiting for life” during this time. The conditions for life in empirical microeconomics were likely the growing acceptance of the potential outcomes framework among microeconomists (i.e., the so-called credibility revolution led by Josh Angrist, David Card, Alan Krueger, Steven Levitt, and many others) as well as, and perhaps even more importantly, the increased availability of large digitized the administrative data sets, many of which often captured unusual administrative rules for treatment assignments. These unusual rules, combined with the administrative data sets’ massive size, provided the much-needed necessary conditions for Campbell’s original design to bloom into thousands of flowers.

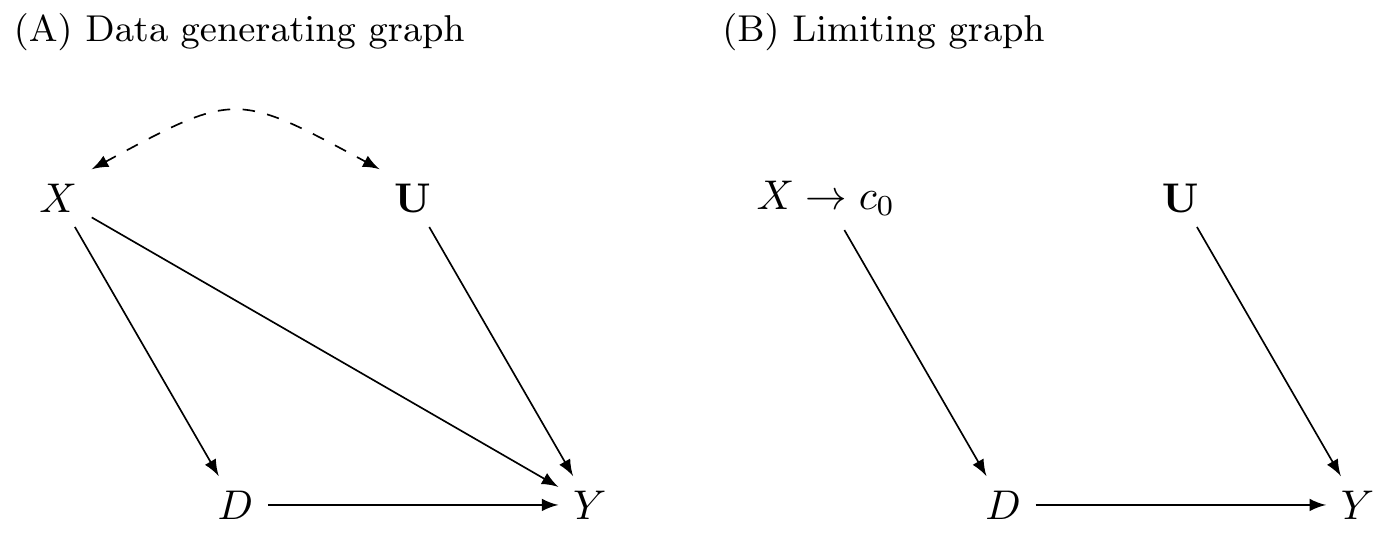

So what’s the big deal? Why is RDD so special? The reason RDD is so appealing to many is because of its ability to convincingly eliminate selection bias. This appeal is partly due to the fact that its underlying identifying assumptions are viewed by many as easier to accept and evaluate. Rendering selection bias impotent, the procedure is capable of recovering average treatment effects for a given subpopulation of units. The method is based on a simple, intuitive idea. Consider the following DAG developed by Steiner et al. (2017) that illustrates this method very well.

In the first graph, \(X\) is a continuous variable assigning units to treatment \(D\) (\(X \rightarrow D\)). This assignment of units to treatment is based on a “cutoff” score \(c_0\) such that any unit with a score above the cutoff gets placed into the treatment group, and units below do not. An example might be a charge of driving while intoxicated (or impaired; DWI). Individuals with a blood-alcohol content of 0.08 or higher are arrested and charged with DWI, whereas those with a blood-alcohol level below 0.08 are not (Hansen 2015). The assignment variable may itself independently affect the outcome via the \(X \rightarrow Y\) path and may even be related to a set of variables \(U\) that independently determine \(Y\). Notice for the moment that a unit’s treatment status is exclusively determined by the assignment rule. Treatment is not determined by \(U\).

This DAG clearly shows that the assignment variable \(X\)—or what is often called the “running variable”—is an observable confounder since it causes both \(D\) and \(Y\). Furthermore, because the assignment variable assigns treatment on the basis of a cutoff, we are never able to observe units in both treatment and control for the same value of \(X\). Calling back to our matching chapter, this means a situation such as this one does not satisfy the overlap condition needed to use matching methods, and therefore the backdoor criterion cannot be met.3

However, we can identify causal effects using RDD, which is illustrated in the limiting graph DAG. We can identify causal effects for those subjects whose score is in a close neighborhood around some cutoff \(c_0\). Specifically, as we will show, the average causal effect for this subpopulation is identified as \(X \rightarrow c_0\) in the limit. This is possible because the cutoff is the sole point where treatment and control subjects overlap in the limit.

There are a variety of explicit assumptions buried in this graph that must hold in order for the methods we will review later to recover any average causal effect. But the main one I discuss here is that the cutoff itself cannot be endogenous to some competing intervention occurring at precisely the same moment that the cutoff is triggering units into the \(D\) treatment category. This assumption is called continuity, and what it formally means is that the expected potential outcomes are continuous at the cutoff. If expected potential outcomes are continuous at the cutoff, then it necessarily rules out competing interventions occurring at the same time.

The continuity assumption is reflected graphically by the absence of an arrow from \(X \rightarrow Y\) in the second graph because the cutoff \(c_0\) has cut it off. At \(c_0\), the assignment variable \(X\) no longer has a direct effect on \(Y\). Understanding continuity should be one of your main goals in this chapter. It is my personal opinion that the null hypothesis should always be continuity and that any discontinuity necessarily implies some cause, because the tendency for things to change gradually is what we have come to expect in nature. Jumps are so unnatural that when we see them happen, they beg for explanation. Charles Darwin, in his On the Origin of Species, summarized this by saying Natura non facit saltum, or “nature does not make jumps.” Or to use a favorite phrase of mine from growing up in Mississippi, if you see a turtle on a fencepost, you know he didn’t get there by himself.

That’s the heart and soul of RDD. We use our knowledge about selection into treatment in order to estimate average treatment effects. Since we know the probability of treatment assignment changes discontinuously at \(c_0\), then our job is simply to compare people above and below \(c_0\) to estimate a particular kind of average treatment effect called the local average treatment effect, or LATE (Guideo W. Imbens and Angrist 1994). Because we do not have overlap, or “common support,” we must rely on extrapolation, which means we are comparing units with different values of the running variable. They only overlap in the limit as \(X\) approaches the cutoff from either direction. All methods used for RDD are ways of handling the bias from extrapolation as cleanly as possible.

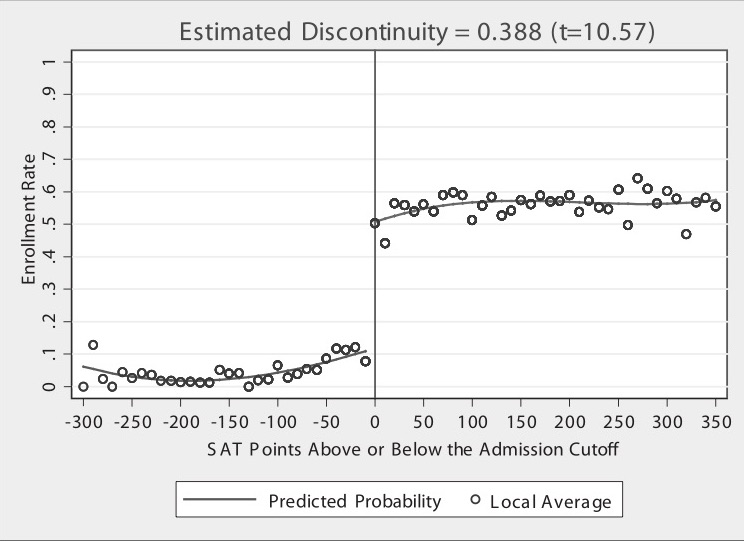

As I’ve said before, and will say again and again—pictures of your main results, including your identification strategy, are absolutely essential to any study attempting to convince readers of a causal effect. And RDD is no different. In fact, pictures are the comparative advantage of RDD. RDD is, like many modern designs, a very visually intensive design. It and synthetic control are probably two of the most visually intensive designs you’ll ever encounter, in fact. So to help make RDD concrete, let’s first look at a couple of pictures. The following discussion derives from Hoekstra (2009).4

Labor economists had for decades been interested in estimating the causal effect of college on earnings. But Hoekstra wanted to crack open the black box of college’s returns a little by checking whether there were heterogeneous returns to college. He does this by estimating the causal effect of attending the state flagship university on earnings. State flagship universities are often more selective than other public universities in the same state. In Texas, the top 7% of graduating high school students can select their university in state, and the modal first choice is University of Texas at Austin. These universities are often environments of higher research, with more resources and strongly positive peer effects. So it is natural to wonder whether there are heterogeneous returns across public universities.

The challenge in this type of question should be easy to see. Let’s say that we were to compare individuals who attended the University of Florida to those who attended the University of South Florida. Insofar as there is positive selection into the state flagship school, we might expect individuals with higher observed and unobserved ability to sort into the state flagship school. And insofar as that ability increases one’s marginal product, then we expect those individuals to earn more in the workforce regardless of whether they had in fact attended the state flagship. Such basic forms of selection bias confound our ability to estimate the causal effect of attending the state flagship on earnings. But Hoekstra (2009) had an ingenious strategy to disentangle the causal effect from the selection bias using an RDD. To illustrate, let’s look at two pictures associated with this interesting study.

Before talking about the picture, I want to say something about the data. Hoekstra has data on all applications to the state flagship university. To get these data, he would’ve had to build a relationship with the admissions office. This would have involved making introductions, holding meetings to explain his project, convincing administrators the project had value for them as well as him, and ultimately winning their approval to cooperatively share the data. This likely would’ve involved the school’s general counsel, careful plans to de-identify the data, agreements on data storage, and many other assurances that students’ names and identities were never released and could not be identified. There is a lot of trust and social capital that must be created to do projects like this, and this is the secret sauce in most RDDs—your acquisition of the data requires far more soft skills, such as friendship, respect, and the building of alliances, than you may be accustomed to. This isn’t as straightforward as simply downloading the CPS from IPUMS; it’s going to take genuine smiles, hustle, and luck. Given that these agencies have considerable discretion in whom they release data to, it is likely that certain groups will have more trouble than others in acquiring the data. So it is of utmost importance that you approach these individuals with humility, genuine curiosity, and most of all, scientific integrity. They ultimately are the ones who can give you the data if it is not public use, so don’t be a jerk.5

But on to the picture. Figure 6.2 has a lot going on, and it’s worth carefully unpacking each element for the reader. There are four distinct elements to this picture that I want to focus on. First, notice the horizontal axis. It ranges from a negative number to a positive number with a zero around the center of the picture. The caption reads “SAT Points Above or Below the Admission Cutoff.” Hoekstra has “recentered” the university’s admissions criteria by subtracting the admission cutoff from the students’ actual score, which is something I discuss in more detail later in this chapter. The vertical line at zero marks the “cutoff,” which was this university’s minimum SAT score for admissions. It appears it was binding, but not deterministically, for there are some students who enrolled but did not have the minimum SAT requirements. These individuals likely had other qualifications that compensated for their lower SAT scores. This recentered SAT score is in today’s parlance called the “running variable.”

Second, notice the dots. Hoekstra used hollow dots at regular intervals along the recentered SAT variable. These dots represent conditional mean enrollments per recentered SAT score. While his administrative data set contains thousands and thousands of observations, he only shows the conditional means along evenly spaced out bins of the recentered SAT score.

Third are the curvy lines fitting the data. Notice that the picture has two such lines—there is a curvy line fitted to the left of zero, and there is a separate line fit to the right. These lines are the least squares fitted values of the running variable, where the running variable was allowed to take on higher-order terms. By including higher-order terms in the regression itself, the fitted values are allowed to more flexibly track the central tendencies of the data itself. But the thing I really want to focus your attention on is that there are two lines, not one. He fit the lines separately to the left and right of the cutoff.

Finally, and probably the most vivid piece of information in this picture—the gigantic jump in the dots at zero on the recentered running variable. What is going on here? Well, I think you probably know, but let me spell it out. The probability of enrolling at the flagship state university jumps discontinuously when the student just barely hits the minimum SAT score required by the school. Let’s say that the score was 1250. That means a student with 1240 had a lower chance of getting in than a student with 1250. Ten measly points and they have to go a different path.

Imagine two students—the first student got a 1240, and the second got a 1250. Are these two students really so different from one another? Well, sure: those two individual students are likely very different. But what if we had hundreds of students who made 1240 and hundreds more who made 1250. Don’t you think those two groups are probably pretty similar to one another on observable and unobservable characteristics? After all, why would there be suddenly at 1250 a major difference in the characteristics of the students in a large sample? That’s the question you should reflect on. If the university is arbitrarily picking a reasonable cutoff, are there reasons to believe they are also picking a cutoff where the natural ability of students jumps at that exact spot?

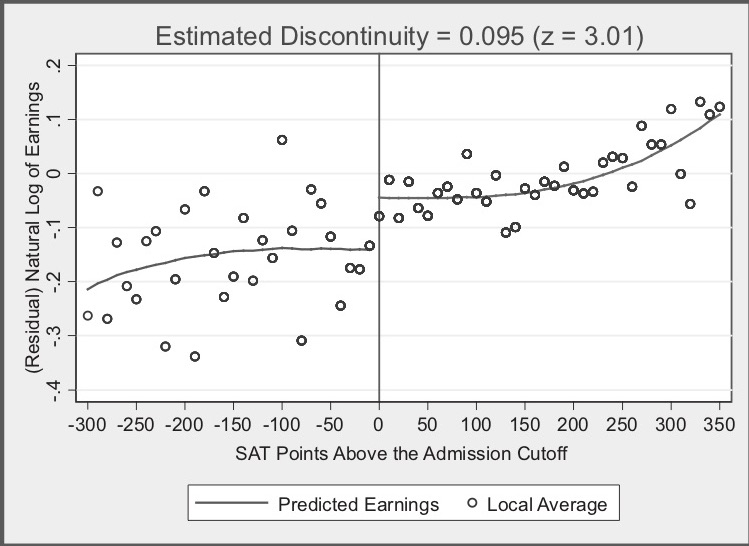

But I said Hoekstra is evaluating the effect of attending the state flagship university on future earnings. Here’s where the study gets even more intriguing. States collect data on workers in a variety of ways. One is through unemployment insurance tax reports. Hoekstra’s partner, the state flagship university, sent the university admissions data directly to a state office in which employers submit unemployment insurance tax reports. The university had social security numbers, so the matching of student to future worker worked quite well since a social security number uniquely identifies a worker. The social security numbers were used to match quarterly earnings records from 1998 through the second quarter of 2005 to the university records. He then estimated: \[ \ln(\text{Earnings})=\psi_{\text{Year}} + \omega_{\text{Experience}} + \theta_{\text{Cohort}} + \varepsilon \] where \(\psi\) is a vector of year dummies, \(\omega\) is a dummy for years after high school that earnings were observed, and \(\theta\) is a vector of dummies controlling for the cohort in which the student applied to the university (e.g., 1988). The residuals from this regression were then averaged for each applicant, with the resulting average residual earnings measure being used to implement a partialled out future earnings variable according to the Frisch-Waugh-Lovell theorem. Hoekstra then takes each students’ residuals from the natural log of earnings regression and collapses them into conditional averages for bins along the recentered running variable. Let’s look at that in Figure 6.3.

In this picture, we see many of the same elements we saw in Figure 6.2. For instance, we see the recentered running variable along the horizontal axis, the little hollow dots representing conditional means, the curvy lines which were fit left and right of the cutoff at zero, and a helpful vertical line at zero. But now we also have an interesting title: “Estimated Discontinuity = 0.095 (z = 3.01).” What is this exactly?

The visualization of a discontinuous jump at zero in earnings isn’t as compelling as the prior figure, so Hoekstra conducts hypothesis tests to determine if the mean between the groups just below and just above are the same. He finds that they are not: those just above the cutoff earn 9.5% higher wages in the long term than do those just below. In his paper, he experiments with a variety of binning of the data (what he calls the “bandwidth”), and his estimates when he does so range from 7.4% to 11.1%.

Now let’s think for a second about what Hoekstra is finding. Hoekstra is finding that at exactly the point where workers experienced a jump in the probability of enrolling at the state flagship university, there is, ten to fifteen years later, a separate jump in logged earnings of around 10%. Those individuals who just barely made it in to the state flagship university made around 10% more in long-term earnings than those individuals who just barely missed the cutoff.

This, again, is the heart and soul of the RDD. By exploiting institutional knowledge about how students were accepted (and subsequently enrolled) into the state flagship university, Hoekstra was able to craft an ingenious natural experiment. And insofar as the two groups of applicants right around the cutoff have comparable future earnings in a world where neither attended the state flagship university, then there is no selection bias confounding his comparison. And we see this result in powerful, yet simple graphs. This study was an early one to show that not only does college matter for long-term earnings, but the sort of college you attend—even among public universities—matters as well.

RDD is all about finding “jumps” in the probability of treatment as we move along some running variable \(X\). So where do we find these jumps? Where do we find these discontinuities? The answer is that humans often embed jumps into rules. And sometimes, if we are lucky, someone gives us the data that allows us to use these rules for our study.

I am convinced that firms and government agencies are unknowingly sitting atop a mountain of potential RDD-based projects. Students looking for thesis and dissertation ideas might try to find them. I encourage you to find a topic you are interested in and begin building relationships with local employers and government administrators for whom that topic is a priority. Take them out for coffee, get to know them, learn about their job, and ask them how treatment assignment works. Pay close attention to precisely how individual units get assigned to the program. Is it random? Is it via a rule? Oftentimes they will describe a process whereby a running variable is used for treatment assignment, but they won’t call it that. While I can’t promise this will yield pay dirt, my hunch, based in part on experience, is that they will end up describing to you some running variable that when it exceeds a threshold, people switch into some intervention. Building alliances with local firms and agencies can pay when trying to find good research ideas.

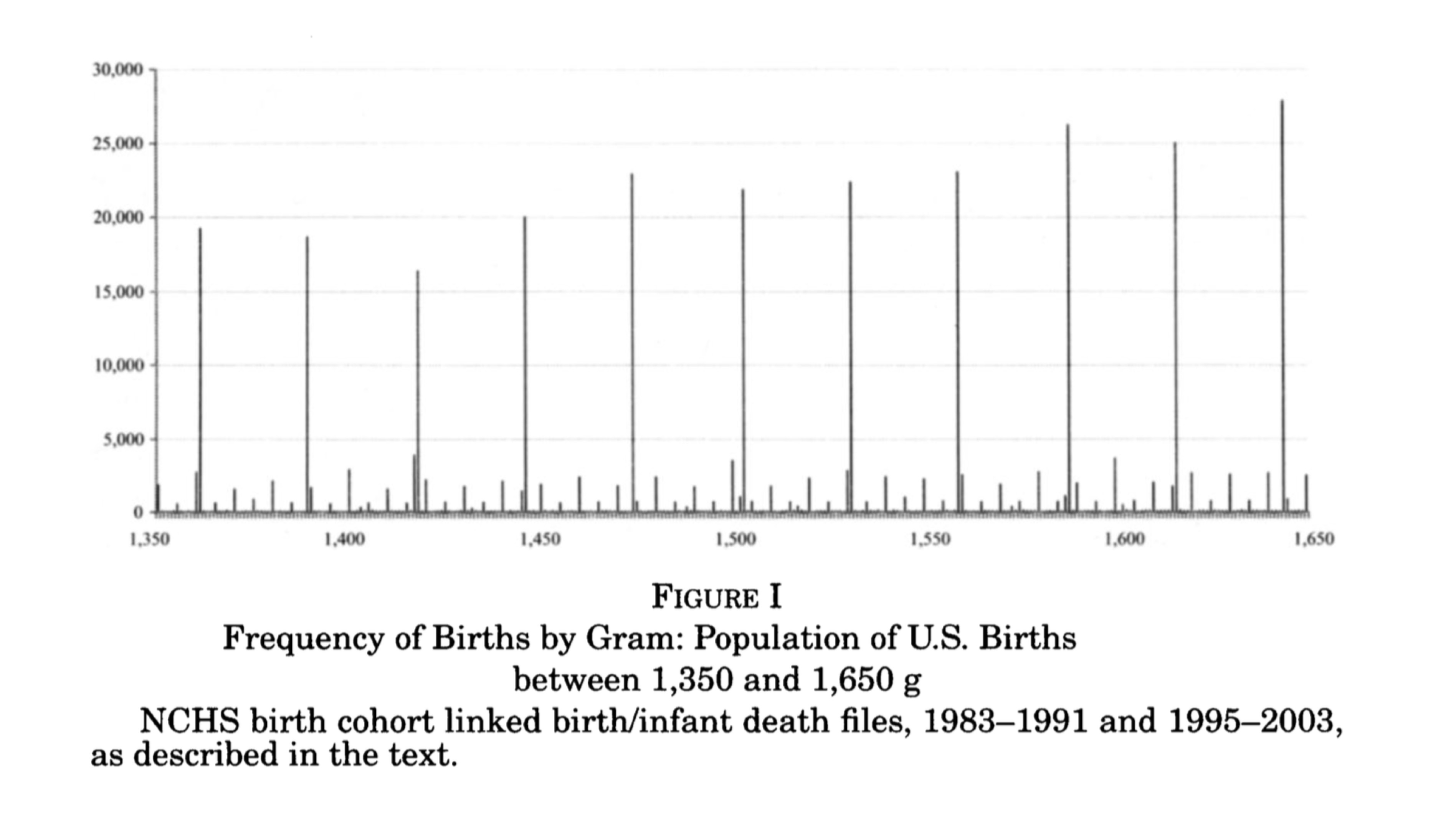

The validity of an RDD doesn’t require that the assignment rule be arbitrary. It only requires that it be known, precise and free of manipulation. The most effective RDD studies involve programs where \(X\) has a “hair trigger” that is not tightly related to the outcome being studied. Examples include the probability of being arrested for DWI jumping at greater than 0.08 blood-alcohol content (Hansen 2015); the probability of receiving health-care insurance jumping at age 65, (Card, Dobkin, and Maestas 2008); the probability of receiving medical attention jumping when birthweight falls below 1,500 grams (Almond et al. 2010; Barreca et al. 2011); the probability of attending summer school when grades fall below some minimum level (Jacob and Lefgen 2004), and as we just saw, the probability of attending the state flagship university jumping when the applicant’s test scores exceed some minimum requirement (Hoekstra 2009).

In all these kinds of studies, we need data. But specifically, we need a lot of data around the discontinuities, which itself implies that the data sets useful for RDD are likely very large. In fact, large sample sizes are characteristic features of the RDD. This is also because in the face of strong trends in the running variable, sample-size requirements get even larger. Researchers are typically using administrative data or settings such as birth records where there are many observations.

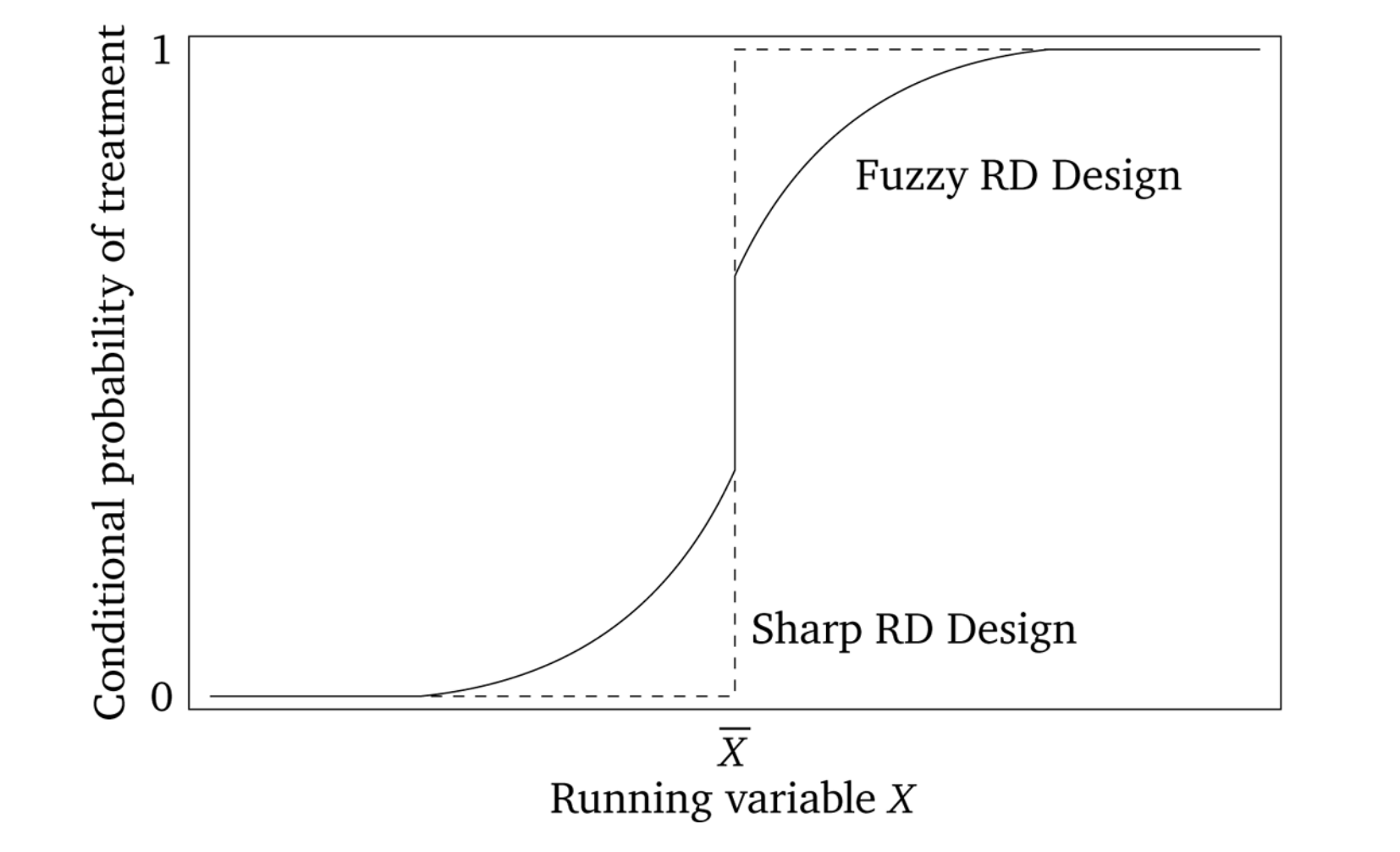

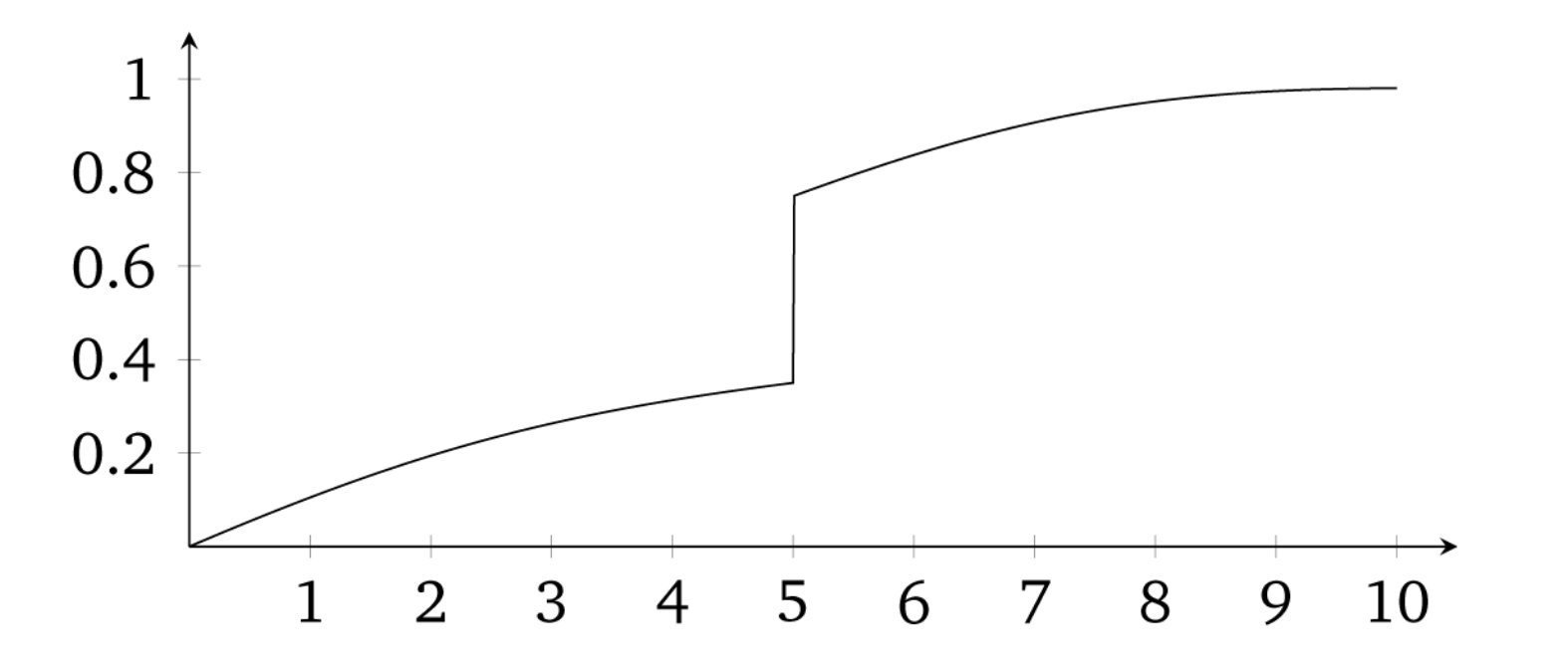

There are generally accepted two kinds of RDD studies. There are designs where the probability of treatment goes from 0 to 1 at the cutoff, or what is called a “sharp” design. And there are designs where the probability of treatment discontinuously increases at the cutoff. These are often called “fuzzy” designs. In all of these, though, there is some running variable \(X\) that, upon reaching a cutoff \(c_0\), the likelihood of receiving some treatment flips. Let’s look at the diagram in Figure 6.4, which illustrates the similarities and differences between the two designs.

Sharp RDD is where treatment is a deterministic function of the running variable \(X\).6 An example might be Medicare enrollment, which happens sharply at age 65, excluding disability situations. A fuzzy RDD represents a discontinuous “jump” in the probability of treatment when \(X>c_0\). In these fuzzy designs, the cutoff is used as an instrumental variable for treatment, like Angrist and Lavy (1999), who instrument for class size with a class-size function they created from the rules used by Israeli schools to construct class sizes.

More formally, in a sharp RDD, treatment status is a deterministic and discontinuous function of a running variable \(X_i\), where \[ D_i = \begin{cases} 1 \text{ if } & X_i\geq{c_0} \\ 0 \text{ if } & X_i < c_0 \end{cases} \] where \(c_0\) is a known threshold or cutoff. If you know the value of \(X_i\) for unit \(i\), then you know treatment assignment for unit \(i\) with certainty. But, if for every value of \(X\) you can perfectly predict the treatment assignment, then it necessarily means that there are no overlap along the running variable.

If we assume constant treatment effects, then in potential outcomes terms, we get

\[ \begin{align} Y_i^0 & = \alpha + \beta X_i \\ Y^1_i & = Y_i^0 + \delta \end{align} \]

Using the switching equation, we get

\[ \begin{align} Y_i & =Y_i^0 + (Y_i^1 - Y_i^0) D_i \\ Y_i & =\alpha+\beta X_i + \delta D_i + \varepsilon_i \end{align} \]

where the treatment effect parameter, \(\delta\), is the discontinuity in the conditional expectation function:

\[ \begin{align} \delta & =\lim_{X_i\rightarrow{X_0}} E\big[Y^1_i\mid X_i=X_0\big] - \lim_{X_0\leftarrow{X_i}} E\big[Y^0_i\mid X_i=X_0\big] \\ & =\lim_{X_i\rightarrow{X_0}} E\big[Y_i\mid X_i=X_0\big]- \lim_{X_0\leftarrow{X_i}} E\big[Y_i\mid X_i=X_0\big] \end{align} \]

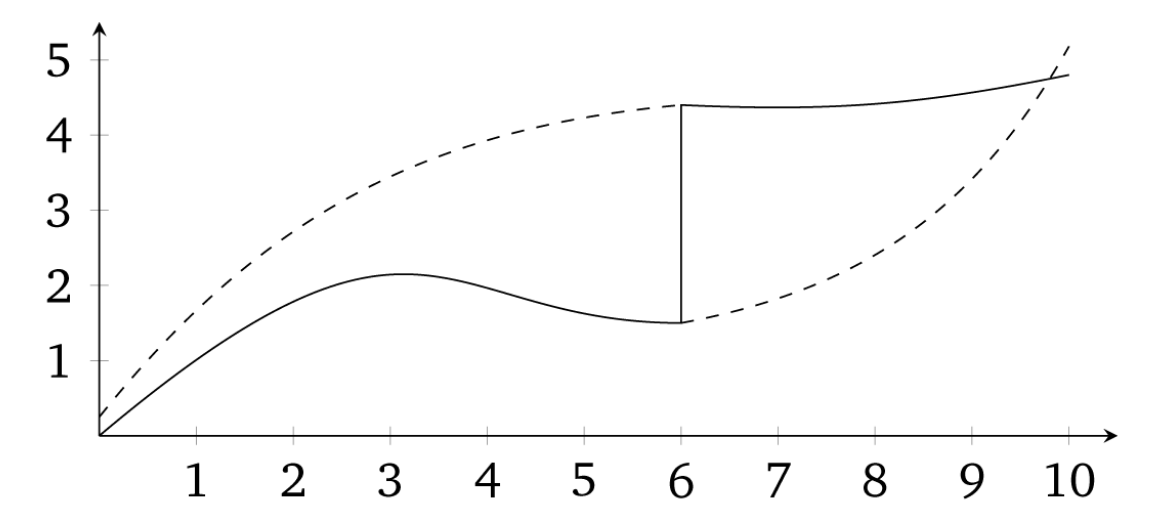

The sharp RDD estimation is interpreted as an average causal effect of the treatment as the running variable approaches the cutoff in the limit, for it is only in the limit that we have overlap. This average causal effect is the local average treatment effect (LATE). We discuss LATE in greater detail in the instrumental variables, but I will say one thing about it here. Since identification in an RDD is a limiting case, we are technically only identifying an average causal effect for those units at the cutoff. Insofar as those units have treatment effects that differ from units along the rest of the running variable, then we have only estimated an average treatment effect that is local to the range around the cutoff. We define this local average treatment effect as follows: \[ \delta_{SRD}=E\big[Y^1_i - Y_i^0\mid X_i=c_0] \] Notice the role that extrapolation plays in estimating treatment effects with sharp RDD. If unit \(i\) is just below \(c_0\), then \(D_i=0\). But if unit \(i\) is just above \(c_0\), then the \(D_i=1\). But for any value of \(X_i\), there are either units in the treatment group or the control group, but not both. Therefore, the RDD does not have common support, which is one of the reasons we rely on extrapolation for our estimation. See Figure 6.5.

Notes: Dashed lines are extrapolations

The key identifying assumption in an RDD is called the continuity assumption. It states that \(E[Y^0_i\mid X=c_0]\) and \(E[Y_i^1\mid X=c_0]\) are continuous (smooth) functions of \(X\) even across the \(c_0\) threshold. Absent the treatment, in other words, the expected potential outcomes wouldn’t have jumped; they would’ve remained smooth functions of \(X\). But think about what that means for a moment. If the expected potential outcomes are not jumping at \(c_0\), then there necessarily are no competing interventions occurring at \(c_0\). Continuity, in other words, explicitly rules out omitted variable bias at the cutoff itself. All other unobserved determinants of \(Y\) are continuously related to the running variable \(X\). Does there exist some omitted variable wherein the outcome, would jump at \(c_0\) even if we disregarded the treatment altogether? If so, then the continuity assumption is violated and our methods do not require the LATE.

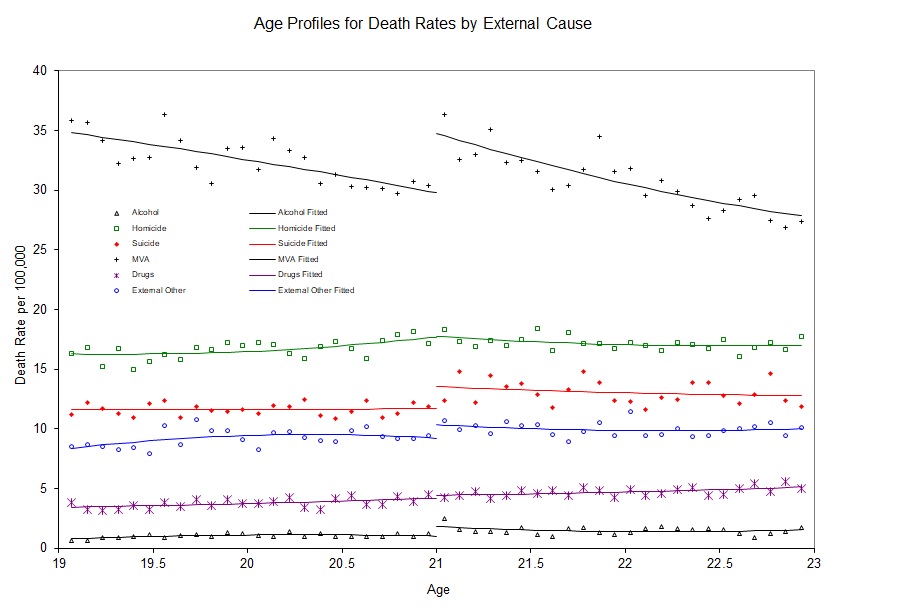

I apologize if I’m beating a dead horse, but continuity is a subtle assumption and merits a little more discussion. The continuity assumption means that \(E[Y^1\mid X]\) wouldn’t have jumped at \(c_0\). If it had jumped, then it means something other than the treatment caused it to jump because \(Y^1\) is already under treatment. So an example might be a study finding a large increase in motor vehicle accidents at age 21. I’ve reproduced a figure from and interesting study on mortality rates for different types of causes (Carpenter and Dobkin 2009). I have reproduced one of the key figures in Figure 6.6. Notice the large discontinuous jump in motor vehicle death rates at age 21. The most likely explanation is that age 21 causes people to drink more, and sometimes even while they are driving.

But this is only a causal effect if motor vehicle accidents don’t jump at age 21 for other reasons. Formally, this is exactly what is implied by continuity—the absence of simultaneous treatments at the cutoff. For instance, perhaps there is something biological that happens to 21-year-olds that causes them to suddenly become bad drivers. Or maybe 21-year-olds are all graduating from college at age 21, and during celebrations, they get into wrecks. To test this, we might replicate Carpenter and Dobkin (2009) using data from Uruguay, where the drinking age is 18. If we saw a jump in motor vehicle accidents at age 21 in Uruguay, then we might have reason to believe the continuity assumption does not hold in the United States. Reasonably defined placebos can help make the case that the continuity assumption holds, even if it is not a direct test per se.

Sometimes these abstract ideas become much easier to understand with data, so here is an example of what we mean using a simulation.

clear

capture log close

set obs 1000

set seed 1234567

* Generate running variable. Stata code attributed to Marcelo Perraillon.

gen x = rnormal(50, 25)

replace x=0 if x < 0

drop if x > 100

sum x, det

* Set the cutoff at X=50. Treated if X > 50

gen D = 0

replace D = 1 if x > 50

gen y1 = 25 + 0*D + 1.5*x + rnormal(0, 20)

* Potential outcome Y1 not jumping at cutoff (continuity)

twoway (scatter y1 x if D==0, msize(vsmall) msymbol(circle_hollow)) (scatter y1 x if D==1, sort mcolor(blue) msize(vsmall) msymbol(circle_hollow)) (lfit y1 x if D==0, lcolor(red) msize(small) lwidth(medthin) lpattern(solid)) (lfit y1 x, lcolor(dknavy) msize(small) lwidth(medthin) lpattern(solid)), xtitle(Test score (X)) xline(50) legend(off)library(tidyverse)

# simulate the data

dat <- tibble(

x = rnorm(1000, 50, 25)

) %>%

mutate(

x = if_else(x < 0, 0, x)

) %>%

filter(x < 100)

# cutoff at x = 50

dat <- dat %>%

mutate(

D = if_else(x > 50, 1, 0),

y1 = 25 + 0 * D + 1.5 * x + rnorm(n(), 0, 20)

)

ggplot(aes(x, y1, colour = factor(D)), data = dat) +

geom_point(alpha = 0.5) +

geom_vline(xintercept = 50, colour = "grey", linetype = 2)+

stat_smooth(method = "lm", se = F) +

labs(x = "Test score (X)", y = "Potential Outcome (Y1)")import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

np.random.seed(12282020)

dat = pd.DataFrame({'x': np.random.normal(50, 25, 1000)})

dat.loc[dat.x<0, 'x'] = 0

dat = dat[dat.x<100]

dat['D'] = 0

dat.loc[dat.x>50, 'D'] = 1

dat['y1'] = 25 + 0*dat.D + 1.5 * dat.x + np.random.normal(0, 20, dat.shape[0])

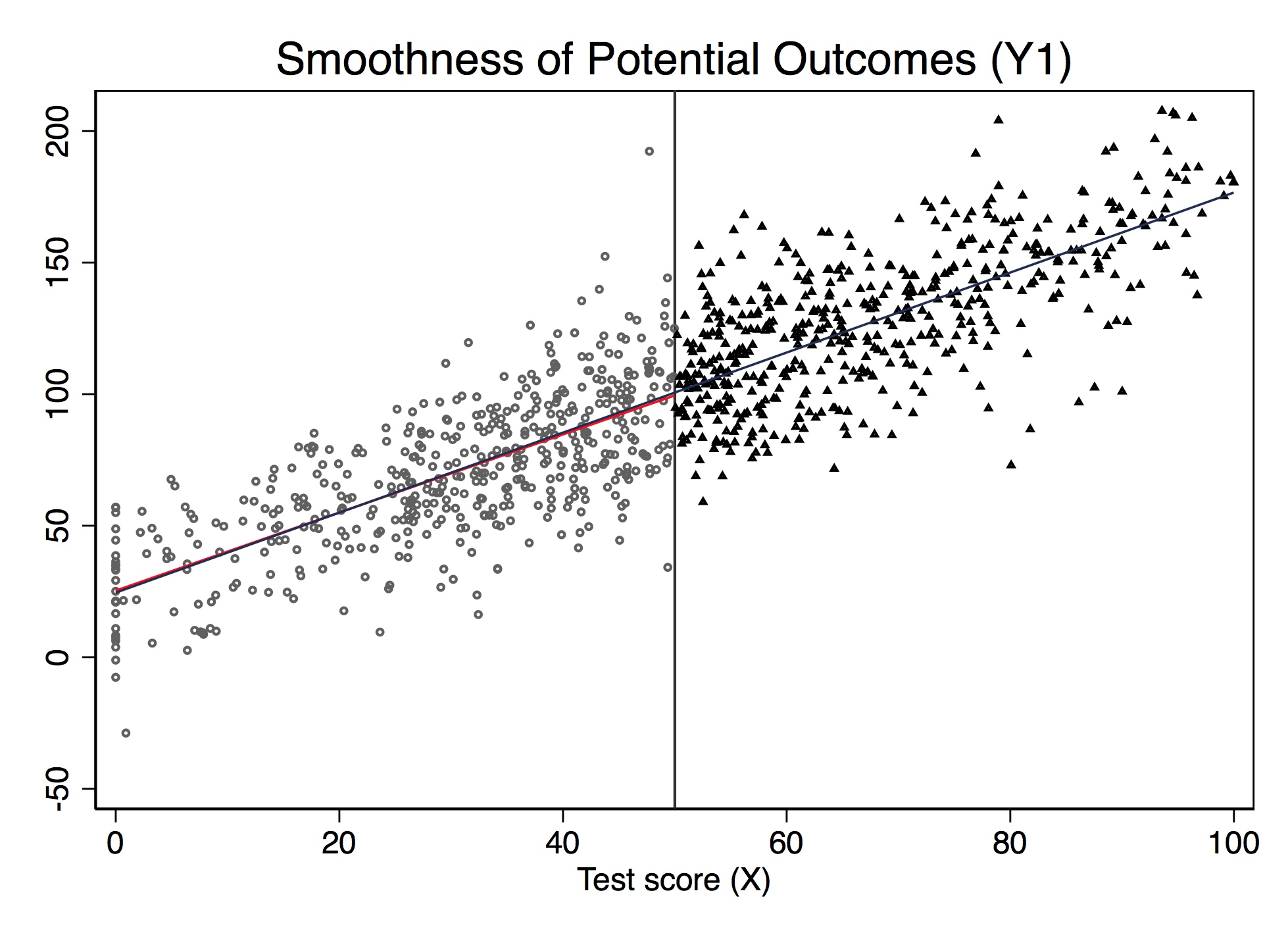

dat['y2'] = 25 + 40*dat.D + 1.5 * dat.x + np.random.normal(0, 20, dat.shape[0])

print('"Counterfactual Potential Outcomes')

p.ggplot(dat, p.aes(x='x', y='y1', color = 'factor(D)')) + p.geom_point(alpha = 0.5) + p.geom_vline(xintercept = 50, colour = "grey") + p.stat_smooth(method = "lm", se = 'F') + p.labs(x = "Test score (X)", y = "Potential Outcome (Y1)")Figure 6.7 shows the results from this simulation. Notice that the value of \(E[Y^1\mid X]\) is changing continuously over \(X\) and through \(c_0\). This is an example of the continuity assumption. It means absent the treatment itself, the expected potential outcomes would’ve remained a smooth function of \(X\) even as passing \(c_0\). Therefore, if continuity held, then only the treatment, triggered at \(c_0\), could be responsible for discrete jumps in \(E[Y\mid X]\).

The nice thing about simulations is that we actually observe the potential outcomes since we made them ourselves. But in the real world, we don’t have data on potential outcomes. If we did, we could test the continuity assumption directly. But remember—by the switching equation, we only observe actual outcomes, never potential outcomes. Thus, since units switch from \(Y^0\) to \(Y^1\) at \(c_0\), we actually can’t directly evaluate the continuity assumption. This is where institutional knowledge goes a long way, because it can help build the case that nothing else is changing at the cutoff that would otherwise shift potential outcomes.

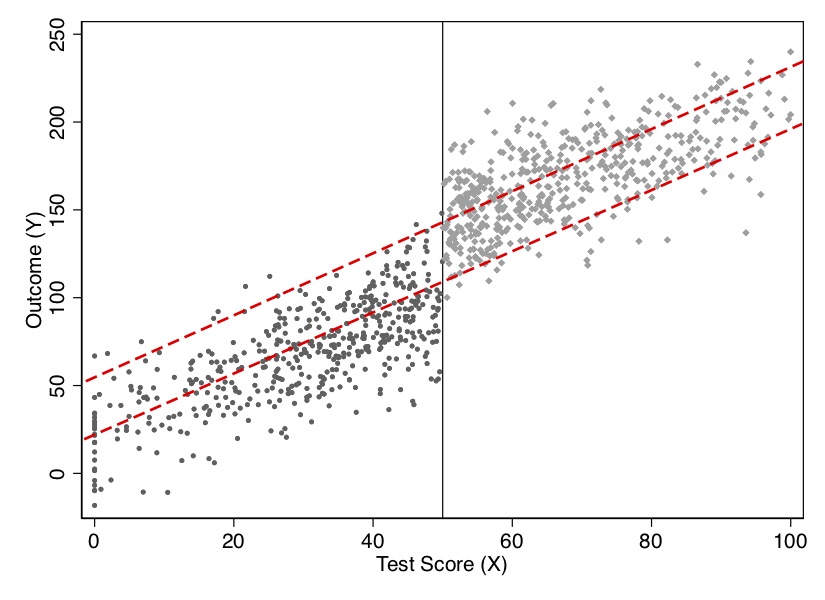

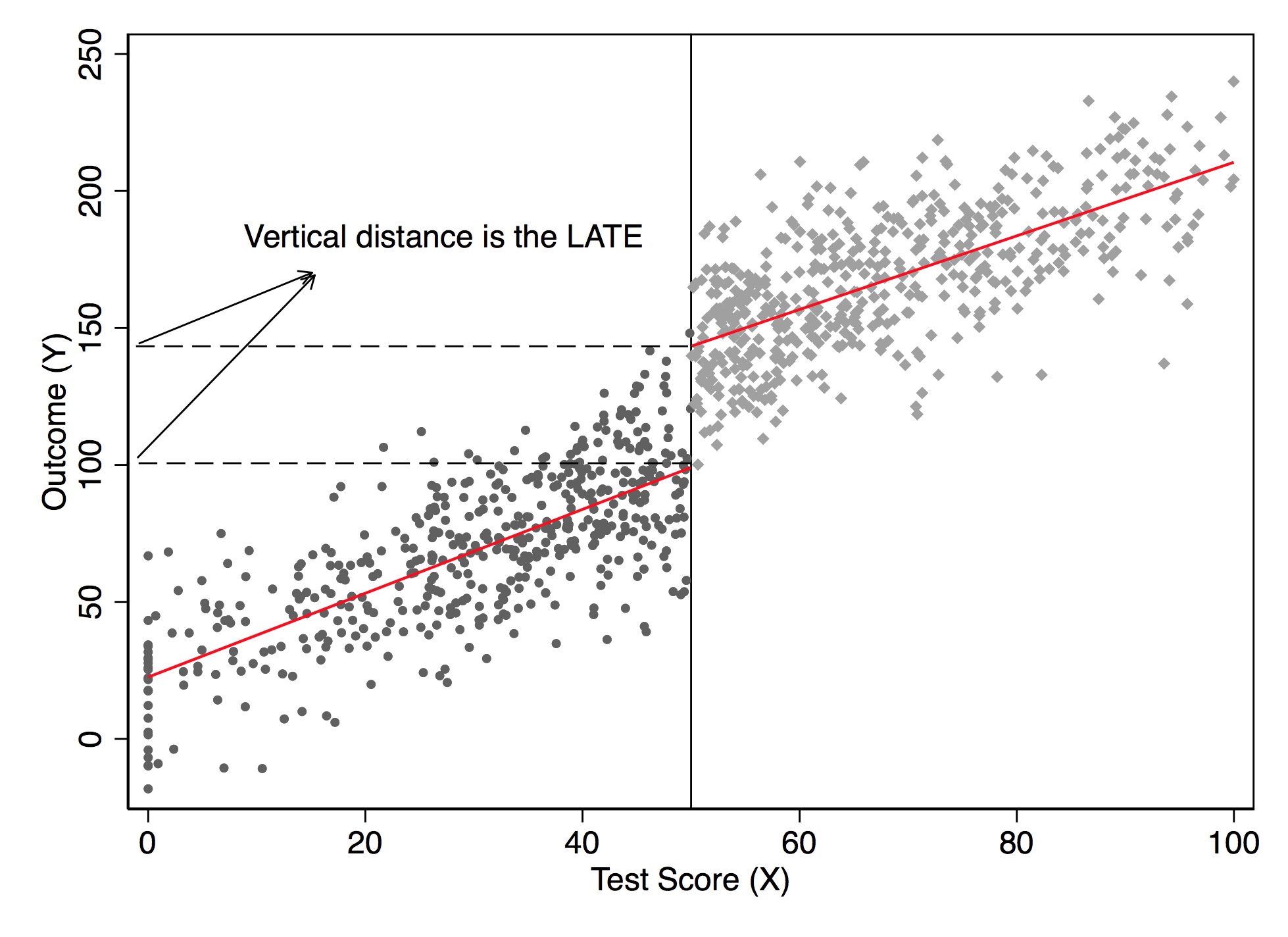

Let’s illustrate this using simulated data. Notice that while \(Y^1\) by construction had not jumped at 50 on the \(X\) running variable, \(Y\) will. Let’s look at the output in Figure 6.8. Notice the jump at the discontinuity in the outcome, which I’ve labeled the LATE, or local average treatment effect.

* Stata code attributed to Marcelo Perraillon.

gen y = 25 + 40*D + 1.5*x + rnormal(0, 20)

scatter y x if D==0, msize(vsmall) || scatter y x if D==1, msize(vsmall) legend(off) xline(50, lstyle(foreground)) || lfit y x if D ==0, color(red) || lfit y x if D ==1, color(red) ytitle("Outcome (Y)") xtitle("Test Score (X)") # simulate the discontinuity

dat <- dat %>%

mutate(

y2 = 25 + 40 * D + 1.5 * x + rnorm(n(), 0, 20)

)

# figure

ggplot(aes(x, y2, colour = factor(D)), data = dat) +

geom_point(alpha = 0.5) +

geom_vline(xintercept = 50, colour = "grey", linetype = 2) +

stat_smooth(method = "lm", se = F) +

labs(x = "Test score (X)", y = "Potential Outcome (Y)")import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

np.random.seed(12282020)

dat = pd.DataFrame({'x': np.random.normal(50, 25, 1000)})

dat.loc[dat.x<0, 'x'] = 0

dat = dat[dat.x<100]

dat['D'] = 0

dat.loc[dat.x>50, 'D'] = 1

dat['y1'] = 25 + 0*dat.D + 1.5 * dat.x + np.random.normal(0, 20, dat.shape[0])

dat['y2'] = 25 + 40*dat.D + 1.5 * dat.x + np.random.normal(0, 20, dat.shape[0])

print('"Counterfactual Potential Outcomes')

print('"Counterfactual Potential Outcomes after Treatment')

p.ggplot(dat, p.aes(x='x', y='y2', color = 'factor(D)')) + p.geom_point(alpha = 0.5) + p.geom_vline(xintercept = 50, colour = "grey") + p.stat_smooth(method = "lm", se = 'F') + p.labs(x = "Test score (X)", y = "Potential Outcome (Y)")

I’d like to now dig into the actual regression model you would use to estimate the LATE parameter in an RDD. We will first discuss some basic modeling choices that researchers often make—some trivial, some important. This section will focus primarily on regression-based estimation.

While not necessary, it is nonetheless quite common for authors to transform the running variable \(X\) by recentering at \(c_0\): \[ Y_i=\alpha+\beta(X_i-c_0)+\delta D_i+\varepsilon_i \] This doesn’t change the interpretation of the treatment effect—only the interpretation of the intercept. Let’s use Card, Dobkin, and Maestas (2008) as an example. Medicare is triggered when a person turns 65. So recenter the running variable (age) by subtracting 65:

\[ \begin{align} Y & =\beta_0 + \beta_1 (Age-65) + \beta_2 Edu + \varepsilon \\ & =\beta_0 + \beta_1 Age - \beta_1 65 + \beta_2 Edu+ \varepsilon \\ & =(\beta_0 - \beta_1 65) + \beta_1 Age + \beta_2 Edu + \varepsilon \\ & = \alpha + \beta_1 Age + \beta_2 Edu+ \varepsilon \end{align} \]

where \(\alpha=\beta_0 + \beta_1 65\). All other coefficients, notice, have the same interpretation except for the intercept.

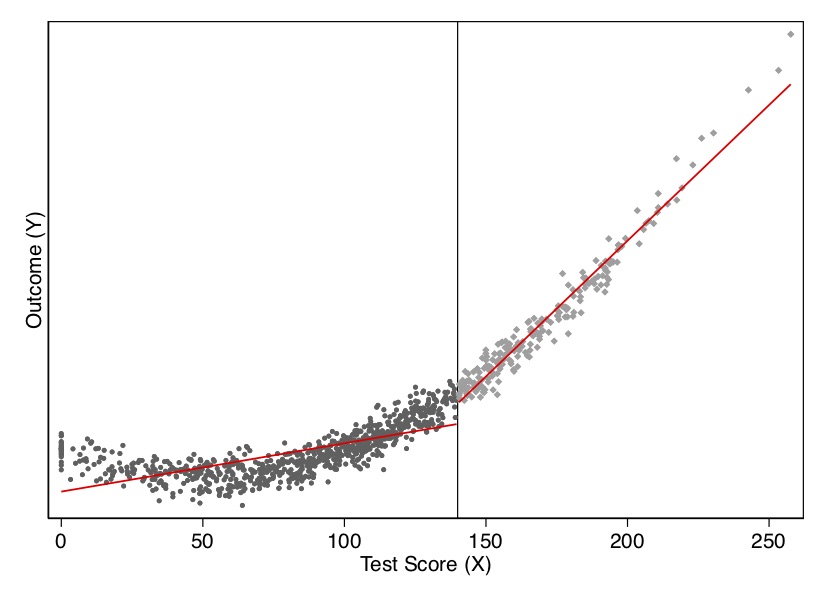

Another practical question relates to nonlinear data-generating processes. A nonlinear data-generating process could easily yield false positives if we do not handle the specification carefully. Because sometimes we are fitting local linear regressions around the cutoff, we could spuriously pick up an effect simply for no other reason than that we imposed linearity on the model. But if the underlying data-generating process is nonlinear, then it may be a spurious result due to misspecification of the model. Consider an example of this nonlinearity in Figure 6.9.

* Stata code attributed to Marcelo Perraillon.

drop y y1 x* D

set obs 1000

gen x = rnormal(100, 50)

replace x=0 if x < 0

drop if x > 280

sum x, det

* Set the cutoff at X=140. Treated if X > 140

gen D = 0

replace D = 1 if x > 140

gen x2 = x*x

gen x3 = x*x*x

gen y = 10000 + 0*D - 100*x +x2 + rnormal(0, 1000)

reg y D x

scatter y x if D==0, msize(vsmall) || scatter y x ///

if D==1, msize(vsmall) legend(off) xline(140, ///

lstyle(foreground)) ylabel(none) || lfit y x ///

if D ==0, color(red) || lfit y x if D ==1, ///

color(red) xtitle("Test Score (X)") ///

ytitle("Outcome (Y)")

* Polynomial estimation

capture drop y

gen y = 10000 + 0*D - 100*x +x2 + rnormal(0, 1000)

reg y D x x2 x3

predict yhat

scatter y x if D==0, msize(vsmall) || scatter y x ///

if D==1, msize(vsmall) legend(off) xline(140, ///

lstyle(foreground)) ylabel(none) || line yhat x ///

if D ==0, color(red) sort || line yhat x if D==1, ///

sort color(red) xtitle("Test Score (X)") ///

ytitle("Outcome (Y)") # simultate nonlinearity

dat <- tibble(

x = rnorm(1000, 100, 50)

) %>%

mutate(

x = case_when(x < 0 ~ 0, TRUE ~ x),

D = case_when(x > 140 ~ 1, TRUE ~ 0),

x2 = x*x,

x3 = x*x*x,

y3 = 10000 + 0 * D - 100 * x + x2 + rnorm(1000, 0, 1000)

) %>%

filter(x < 280)

ggplot(aes(x, y3, colour = factor(D)), data = dat) +

geom_point(alpha = 0.2) +

geom_vline(xintercept = 140, colour = "grey", linetype = 2) +

stat_smooth(method = "lm", se = F) +

labs(x = "Test score (X)", y = "Potential Outcome (Y)")

ggplot(aes(x, y3, colour = factor(D)), data = dat) +

geom_point(alpha = 0.2) +

geom_vline(xintercept = 140, colour = "grey", linetype = 2) +

stat_smooth(method = "loess", se = F) +

labs(x = "Test score (X)", y = "Potential Outcome (Y)")import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

np.random.seed(12282020)

dat = pd.DataFrame({'x': np.random.normal(100, 50, 1000)})

dat.loc[dat.x<0, 'x'] = 0

dat['x2'] = dat['x']**2

dat['x3'] = dat['x']**3

dat['D'] = 0

dat.loc[dat.x>140, 'D'] = 1

dat['y3'] = 10000 + 0*dat.D - 100 * dat.x + dat.x2 + np.random.normal(0, 1000, 1000)

dat = dat[dat.x < 280]

# Linear Model for conditional expectation

p.ggplot(dat, p.aes(x='x', y='y3', color = 'factor(D)')) + p.geom_point(alpha = 0.2) + p.geom_vline(xintercept = 140, colour = "grey") + p.stat_smooth(method = "lm", se = 'F') + p.labs(x = "Test score (X)", y = "Potential Outcome (Y)")

# Linear Model for conditional expectation

p.ggplot(dat, p.aes(x='x', y='y3', color = 'factor(D)')) + p.geom_point(alpha = 0.2) + p.geom_vline(xintercept = 140, colour = "grey") + p.stat_smooth(method = "lowess", se = 'F') + p.labs(x = "Test score (X)", y = "Potential Outcome (Y)")

I show this both visually and with a regression. As you can see in Figure 6.9, the data-generating process was nonlinear, but when with straight lines to the left and right of the cutoff, the trends in the running variable generate a spurious discontinuity at the cutoff. This shows up in a regression as well. When we fit the model using a least squares regression controlling for the running variable, we estimate a causal effect though there isn’t one. In Table 6.1, the estimated effect of \(D\) on \(Y\) is large and highly significant, even though the true effect is zero. In this situation, we would need some way to model the nonlinearity below and above the cutoff to check whether, even given the nonlinearity, there had been a jump in the outcome at the discontinuity.

| Dependent variable | |

|---|---|

| Treatment (D) | 6580.16\(^{**}\)\(^{*}\) |

| (305.88) |

Suppose that the nonlinear relationships is \[ E\big[Y^0_i\mid X_i\big]=f(X_i) \] for some reasonably smooth function \(f(X_i)\). In that case, we’d fit the regression model: \[ Y_i=f(X_i) + \delta D_i + \eta_i \] Since \(f(X_i)\) is counterfactual for values of \(X_i>c_0\), how will we model the nonlinearity? There are two ways of approximating \(f(X_i)\). The traditional approaches let \(f(X_Be i)\) equal a \(p{th}\)-order polynomial: \[ Y_i = \alpha + \beta_1 x_i + \beta_2 x_i^2 + \dots + \beta_p x_i^p + \delta D_i + \eta_i \] Higher-order polynomials can lead to overfitting and have been found to introduce bias (Gelman and Imbens 2019). Those authors recommend using local linear regressions with linear and quadratic forms only. Another way of approximating \(f(X_i)\) is to use a nonparametric kernel, which I discuss later.

Though Gelman and Imbens (2019) warn us about higher-order polynomials, I’d like to use an example with \(p\)th-order polynomials, mainly because it’s not uncommon to see this done today. I’d also like you to know some of the history of this method and understand better what old papers were doing. We can generate this function, \(f(X_i)\), by allowing the \(X_i\) terms to differ on both sides of the cutoff by including them both individually and interacting them with \(D_i\). In that case, we have:

\[ \begin{align} E\big[Y^0_i\mid X_i\big] & =\alpha + \beta_{01} \widetilde{X}_i + \dots + \beta_{0p}\widetilde{X}_i^p \\ E\big[Y^1_i\mid X_i\big] & =\alpha + \delta + \beta_{11} \widetilde{X}_i + \dots + \beta_{1p} \widetilde{X}_i^p \end{align} \]

where \(\widetilde{X}_i\) is the recentered running variable (i.e., \(X_i - c_0\)). Centering at \(c_0\) ensures that the treatment effect at \(X_i=X_0\) is the coefficient on \(D_i\) in a regression model with interaction terms. As Lee and Lemieux (2010) note, allowing different functions on both sides of the discontinuity should be the main results in an RDD paper.

To derive a regression model, first note that the observed values must be used in place of the potential outcomes: \[ E[Y\mid X]=E[Y^0\mid X]+\Big(E[Y^1\mid X] - E[Y^0 \mid X]\Big)D \] Your regression model then is \[ Y_i= \alpha + \beta_{01}\tilde{X}_i + \dots + \beta_{0p}\tilde{X}_i^p + \delta D_i+ \beta_1^*D_i \tilde{X}_i + \dots + \beta_p^* D_i \tilde{X}_i^p + \varepsilon_i \] where \(\beta_1^* = \beta_{11} - \beta_{01}\), and \(\beta_p^* = \beta_{1p} - \beta_{0p}\). The equation we looked at earlier was just a special case of the above equation with \(\beta_1^*=\beta_p^*=0\). The treatment effect at \(c_0\) is \(\delta\). And the treatment effect at \(X_i-c_0>0\) is \(\delta + \beta_1^*c + \dots + \beta_p^* c^p\). Let’s see this in action with another simulation.

* Stata code attributed to Marcelo Perraillon.

capture drop y

gen y = 10000 + 0*D - 100*x +x2 + rnormal(0, 1000)

reg y D##c.(x x2 x3)

predict yhat

scatter y x if D==0, msize(vsmall) || scatter y x ///

if D==1, msize(vsmall) legend(off) xline(140, ///

lstyle(foreground)) ylabel(none) || line yhat x ///

if D ==0, color(red) sort || line yhat x if D==1, ///

sort color(red) xtitle("Test Score (X)") ///

ytitle("Outcome (Y)") library(stargazer)

dat <- tibble(

x = rnorm(1000, 100, 50)

) %>%

mutate(

x = case_when(x < 0 ~ 0, TRUE ~ x),

D = case_when(x > 140 ~ 1, TRUE ~ 0),

x2 = x*x,

x3 = x*x*x,

y3 = 10000 + 0 * D - 100 * x + x2 + rnorm(1000, 0, 1000)

) %>%

filter(x < 280)

regression <- lm(y3 ~ D*., data = dat)

stargazer(regression, type = "text")

ggplot(aes(x, y3, colour = factor(D)), data = dat) +

geom_point(alpha = 0.2) +

geom_vline(xintercept = 140, colour = "grey", linetype = 2) +

stat_smooth(method = "loess", se = F) +

labs(x = "Test score (X)", y = "Potential Outcome (Y)")import numpy as np

import pandas as pd

import statsmodels.api as sm

import statsmodels.formula.api as smf

from itertools import combinations

import plotnine as p

# read data

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

def read_data(file):

return pd.read_stata("https://github.com/scunning1975/mixtape/raw/master/" + file)

np.random.seed(12282020)

dat = pd.DataFrame({'x': np.random.normal(100, 50, 1000)})

dat.loc[dat.x<0, 'x'] = 0

dat['x2'] = dat['x']**2

dat['x3'] = dat['x']**3

dat['D'] = 0

dat.loc[dat.x>140, 'D'] = 1

dat['y3'] = 10000 + 0*dat.D - 100 * dat.x + dat.x2 + np.random.normal(0, 1000, 1000)

dat = dat[dat.x < 280]

# Fully interacted regression

all_columns = "+".join(dat.columns.difference(["D", 'y3']))

formula = 'y3 ~ D * ({})'.format(all_columns)

regression = sm.OLS.from_formula(formula, data = dat).fit()

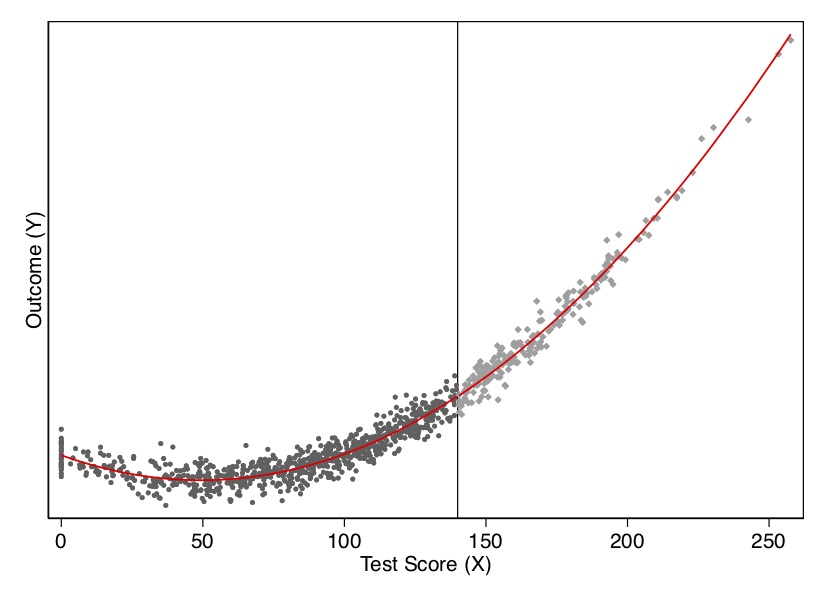

regression.summary()Let’s look at the output from this exercise in Figure 6.10 and Table Table 6.2. As you can see, once we model the data using a quadratic (the cubic ultimately was unnecessary), there is no estimated treatment effect at the cutoff. There is also no effect in our least squares regression.

| Dependent variable | |

|---|---|

| Treatment (D) | -43.24 |

| (147.29) |

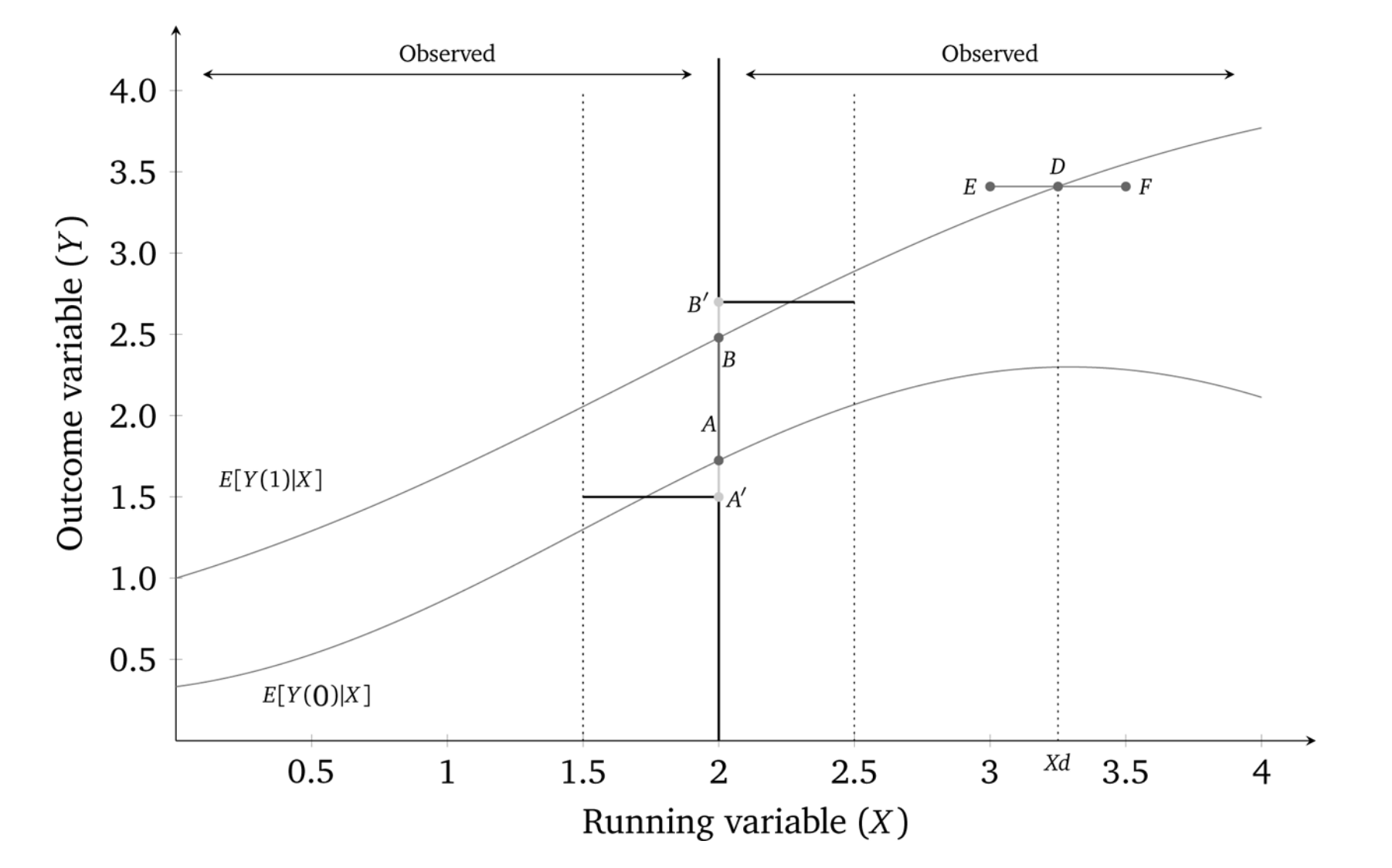

But, as we mentioned earlier, Gelman and Imbens (2019) have discouraged the use of higher-order polynomials when estimating local linear regressions. An alternative is to use kernel regression. The nonparametric kernel method has problems because you are trying to estimate regressions at the cutoff point, which can result in a boundary problem (see Figure 6.11). In this picture, the bias is caused by strong trends in expected potential outcomes throughout the running variable.

While the true effect in this diagram is \(AB\), with a certain bandwidth a rectangular kernel would estimate the effect as \(A'B'\), which is as you can see a biased estimator. There is systematic bias with the kernel method if the underlying nonlinear function, \(f(X)\), is upwards-or downwards-xsloping.

The standard solution to this problem is to run local linear nonparametric regression (Hahn, Todd, and Klaauw 2001). In the case described above, this would substantially reduce the bias. So what is that? Think of kernel regression as a weighted regression restricted to a window (hence “local”). The kernel provides the weights to that regression.7 A rectangular kernel would give the same result as taking \(E[Y]\) at a given bin on \(X\). The triangular kernel gives more importance to the observations closest to the center.

The model is some version of: \[ (\widehat{a},\widehat{b})= \text{argmin}_{a,b} \sum_{i=1}^n\Big(y_i - a -b(x_i-c_0)\Big)^2K \left(\dfrac{x_i-c_o}{h}\right)1(x_i>c_0) \] While estimating this in a given window of width \(h\) around the cutoff is straightforward, what’s not straightforward is knowing how large or small to make the bandwidth. This method is sensitive to the choice of bandwidth, but more recent work allows the researcher to estimate optimal bandwidths (G. Imbens and Kalyanaraman 2011; Calonico, Cattaneo, and Titiunik 2014). These may even allow for bandwidths to vary left and right of the cutoff.

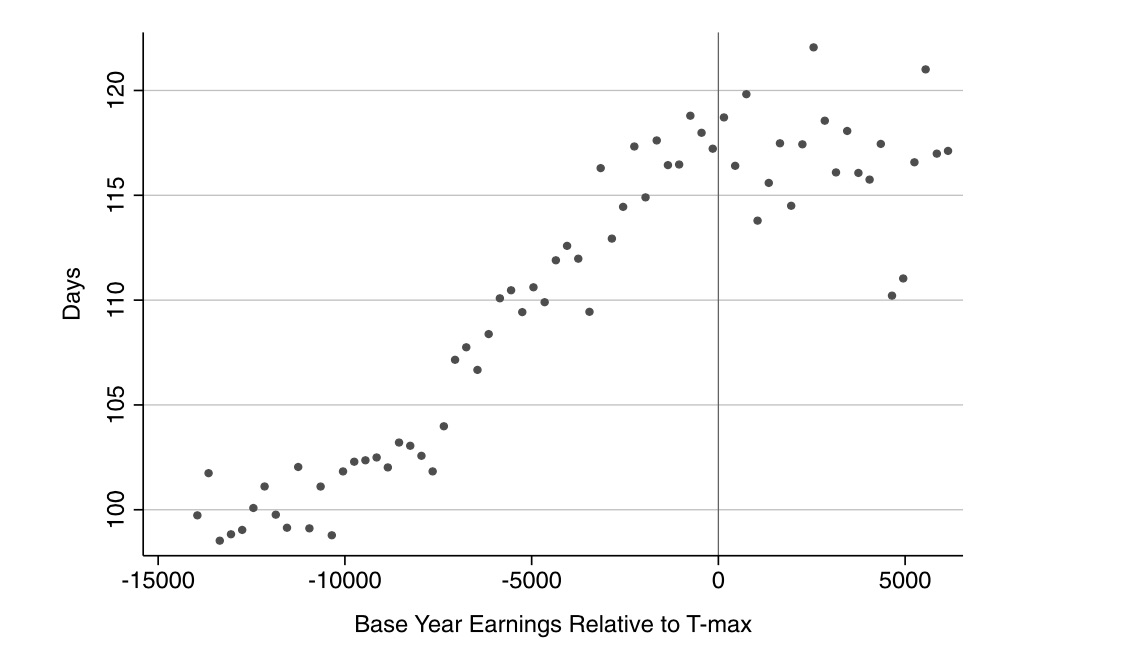

Card, Dobkin, and Maestas (2008) is an example of a sharp RDD, because it focuses on the provision of universal health-care insurance for the elderly—Medicare at age 65. What makes this a policy-relevant question? Universal insurance has become highly relevant because of the debates surrounding the Affordable Care Act, as well as several Democratic senators supporting Medicare for All. But it is also important for its sheer size. In 2014, Medicare was 14% of the federal budget at $505 billion.

Approximately 20% of non-elderly adults in the United States lacked insurance in 2005. Most were from lower-income families, and nearly half were African American or Hispanic. Many analysts have argued that unequal insurance coverage contributes to disparities in health-care utilization and health outcomes across socioeconomic status. But, even among the policies, there is heterogeneity in the form of different copays, deductibles, and other features that affect use. Evidence that better insurance causes better health outcomes is limited because health insurance suffers from deep selection bias. Both supply and demand for insurance depend on health status, confounding observational comparisons between people with different insurance characteristics.

The situation for elderly looks very different, though. Less than 1% of the elderly population is uninsured. Most have fee-for-service Medicare coverage. And that transition to Medicare occurs sharply at age 65—the threshold for Medicare eligibility.

The authors estimate a reduced form model measuring the causal effect of health insurance status on health-care usage: \[ y_{ija} = X_{ija} \alpha + f_k(\alpha ; \beta ) + \sum_k C_{ija}^k \delta^k + u_{ija} \] where \(i\) indexes individuals, \(j\) indexes a socioeconomic group, \(a\) indexes age, \(u_{ija}\) indexes the unobserved error, \(y_{ija}\) health care usage, \(X_{ija}\) a set of covariates (e.g., gender and region), \(f_j(\alpha ; \beta )\) a smooth function representing the age profile of outcome \(y\) for group \(j\), and \(C_{ija}^k\) \((k=1,2,\dots ,K)\) are characteristics of the insurance coverage held by the individual such as copayment rates. The problem with estimating this model, though, is that insurance coverage is endogenous: \(cov(u,C) \neq 0\). So the authors use as identification of the age threshold for Medicare eligibility at 65, which they argue is credibly exogenous variation in insurance status.

Suppose health insurance coverage can be summarized by two dummy variables: \(C_{ija}^1\) (any coverage) and \(C_{ija}^2\) (generous insurance). Card, Dobkin, and Maestas (2008) estimate the following linear probability models:

\[ \begin{align} C_{ija}^1 & =X_{ija}\beta_j^1 + g_j^1(a) + D_a \pi_j^1 + v_{ija}^1 \\ C_{ija}^2 & =X_{ija} \beta_j^2 + g_j^2(a) + D_a \pi_j^2 + v_{ija}^2 \end{align} \]

where \(\beta_j^1\) and \(\beta_j^2\) are group-specific coefficients, \(g_j^1(a)\) and \(g_j^2(a)\) are smooth age profiles for group \(j\), and \(D_a\) is a dummy if the respondent is equal to or over age 65. Recall the reduced form model:

\[ \begin{align} y_{ija} = X_{ija} \alpha + f_k(\alpha ; \beta ) + \sum_k C_{ija}^k \delta^k + u_{ija} \end{align} \]

Combining the \(C_{ija}\) equations, and rewriting the reduced form model, we get:

\[ \begin{align} y_{ija}=X_{ija}\Big(\alpha_j+\beta_j^1\delta_j^1+ \beta_j^2 \delta_j^2\Big)h_j(a)+D_a\pi_j^y+ v_{ija}^y \end{align} \]

where \(h(a)=f_j(a) + \delta^1 g_j^1(a) + \delta^2 g_j^2(a)\) is the reduced form age profile for group \(j\), \(\pi_j^y=\pi_j^1\delta^1 + \pi_j^2 \delta^2\) and \(v_{ija}^y=u_{ija} + v_{ija}^1 \delta^1 + v_{ija}^2 \delta^2\) is the error term. Assuming that the profiles \(f_j(a)\), \(g_j(a)\), and \(g_j^2(a)\) are continuous at age 65 (i.e., the continuity assumption necessary for identification), then any discontinuity in \(y\) is due to insurance. The magnitudes will depend on the size of the insurance changes at age 65 (\(\pi_j^1\) and \(\pi_j^2\)) and on the associated causal effects (\(\delta^1\) and \(\delta^2\)).

For some basic health-care services, such as routine doctor visits, it may be that the only thing that matters is insurance. But, in those situations, the implied discontinuity in \(Y\) at age 65 for group \(j\) will be proportional to the change in insurance status experienced by that group. For more expensive or elective services, the generosity of the coverage may matter—for instance, if patients are unwilling to cover the required copay or if the managed care program won’t cover the service. This creates a potential identification problem in interpreting the discontinuity in \(y\) for any one group. Since \(\pi_j^y\) is a linear combination of the discontinuities in coverage and generosity, \(\delta^1\) and \(\delta^2\) can be estimated by a regression across groups: \[ \pi_j^y=\delta^0+\delta^1\pi_j^1+\delta_j^2\pi_j^2+e_j \] where \(e_j\) is an error term reflecting a combination of the sampling errors in \(\pi_j^y\), \(\pi_j^1\) and, \(\pi_j^2\).

Card, Dobkin, and Maestas (2008) use a couple of different data sets—one a standard survey and the other administrative records from hospitals in three states. First, they use the 1992–2003 National Health Interview Survey (NHIS). The NHIS reports respondents’ birth year, birth month, and calendar quarter of the interview. Authors used this to construct an estimate of age in quarters at date of interview. A person who reaches 65 in the interview quarter is coded as age 65 and 0 quarters. Assuming a uniform distribution of interview dates, one-half of these people will be 0–6 weeks younger than 65 and one-half will be 0–6 weeks older. Analysis is limited to people between 55 and 75. The final sample has 160,821 observations.

The second data set is hospital discharge records for California, Florida, and New York. These records represent a complete census of discharges from all hospitals in the three states except for federally regulated institutions. The data files include information on age in months at the time of admission. Their sample selection criteria is to drop records for people admitted as transfers from other institutions and limit people between 60 and 70 years of age at admission. Sample sizes are 4,017,325 (California), 2,793,547 (Florida), and 3,121,721 (New York).

Some institutional details about the Medicare program may be helpful. Medicare is available to people who are at least 65 and have worked forty quarters or more in covered employment or have a spouse who did. Coverage is available to younger people with severe kidney disease and recipients of Social Security Disability Insurance. Eligible individuals can obtain Medicare hospital insurance (Part A) free of charge and medical insurance (Part B) for a modest monthly premium. Individuals receive notice of their impending eligibility for Medicare shortly before they turn 65 and are informed they have to enroll in it and choose whether to accept Part B coverage. Coverage begins on the first day of the month in which they turn 65.

There are five insurance-related variables: probability of Medicare coverage, any health insurance coverage, private coverage, two or more forms of coverage, and individual’s primary health insurance is managed care. Data are drawn from the 1999–2003 NHIS, and for each characteristic, authors show the incidence rate at age 63–64 and the change at age 65 based on a version of the \(C_K\) equations that include a quadratic in age, fully interacted with a post-65 dummy as well as controls for gender, education, race/ethnicity, region, and sample year. Alternative specifications were also used, such as a parametric model fit to a narrower age window (age 63–67) and a local linear regression specification using a chosen bandwidth. Both show similar estimates of the change at age 65.

| On Medicare | Any insurance | Private coverage | 2\(+\) forms coverage | Managed care | |

|---|---|---|---|---|---|

| Overall sample | 59.7 | 9.5 | \(-2.9\) | 44.1 | \(-28.4\) |

| (4.1) | (0.6) | (1.1) | (2.8) | (2.1) | |

| White non-Hispanic | |||||

| High school dropout | 58.5 | 13.0 | \(-6.2\) | 44.5 | \(-25.0\) |

| (4.6) | (2.7) | (3.3) | (4.0) | (4.5) | |

| High school graduate | 64.7 | 7.6 | \(-1.9\) | 51.8 | \(-30.3\) |

| (5.0) | (0.7) | (1.6) | (3.8) | (2.6) | |

| Some college | 68.4 | 4.4 | \(-2.3\) | 55.1 | \(-40.1\) |

| (4.7) | (0.5) | (1.8) | (4.0) | (2.6) | |

| Minority | |||||

| High school dropout | 44.5 | 21.5 | \(-1.2\) | 19.4 | \(-8.3\) |

| (3.1) | (2.1) | (2.5) | (1.9) | (3.1) | |

| High school graduate | 44.6 | 8.9 | \(-5.8\) | 23.4 | \(-15.4\) |

| (4.7) | (2.8) | (5.1) | (4.8) | (3.5) | |

| Some college | 52.1 | 5.8 | \(-5.4\) | 38.4 | \(-22.3\) |

| (4.9) | (2.0) | (4.3) | (3.8) | (7.2) | |

| Classified by ethnicity only | |||||

| White non-Hispanic | 65.2 | 7.3 | \(-2.8\) | 51.9 | \(-33.6\) |

| (4.6) | (0.5) | (1.4) | (3.5) | (2.3) | |

| Black non-Hispanic | 48.5 | 11.9 | \(-4.2\) | 27.8 | \(-13.5\) |

| (3.6) | (2.0) | (2.8) | (3.7) | (3.7) | |

| Hispanic | 44.4 | 17.3 | \(-2.0\) | 21.7 | \(-12.1\) |

| (3.7) | (3.0) | (1.7) | (2.1) | (3.7) |

The authors present their findings in Table 6.3. The way that you read this table is each cell shows the average treatment effect for the 65-year-old population that complies with the treatment. We can see, not surprisingly, that the effect of receiving Medicare is to cause a very large increase of being on Medicare, as well as reducing coverage on private and managed care.

Formal identification in an RDD relating to some outcome (insurance coverage) to a treatment (Medicare age-eligibility) that itself depends on some running variable, age, relies on the continuity assumptions that we discussed earlier. That is, we must assume that the conditional expectation functions for both potential outcomes is continuous at age\(=65\). This means that both \(E[Y^0\mid a]\) and \(E[Y^1\mid a]\) are continuous through age of 65. If that assumption is plausible, then the average treatment effect at age 65 is identified as: \[ \lim_{65 \leftarrow a}E\big[y^1\mid a\big] - \lim_{a \rightarrow 65}E\big[y^0\mid a\big] \] The continuity assumption requires that all other factors, observed and unobserved, that affect insurance coverage are trending smoothly at the cutoff, in other words. But what else changes at age 65 other than Medicare eligibility? Employment changes. Typically, 65 is the traditional age when people retire from the labor force. Any abrupt change in employment could lead to differences in health-care utilization if nonworkers have more time to visit doctors.

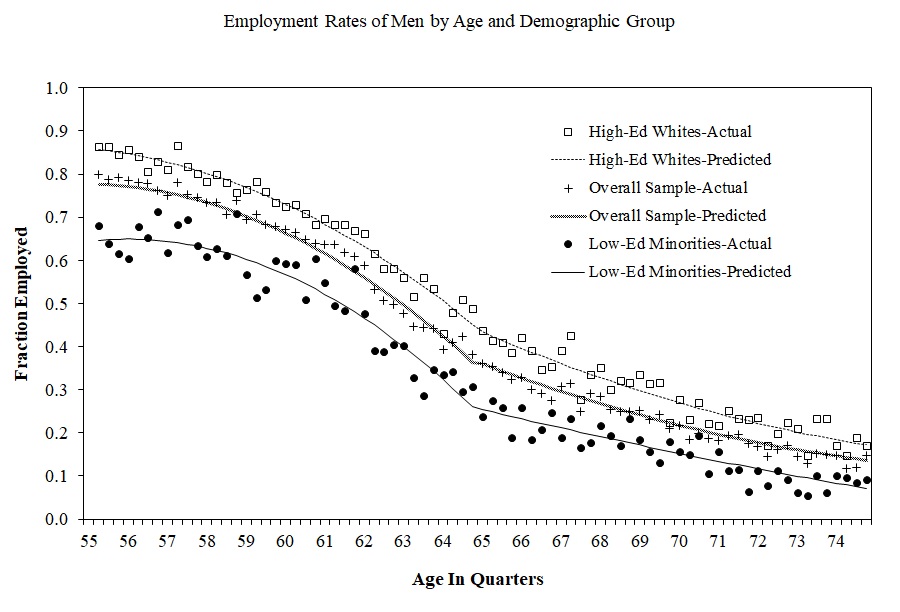

The authors need to, therefore, investigate this possible confounder. They do this by testing for any potential discontinuities at age 65 for confounding variables using a third data set—the March CPS 1996–2004. And they ultimately find no evidence for discontinuities in employment at age 65 (Figure 6.12).

Next the authors investigate the impact that Medicare had on access to care and utilization using the NHIS data. Since 1997, NHIS has asked four questions. They are:

“During the past 12 months has medical care been delayed for this person because of worry about the cost?”

“During the past 12 months was there any time when this person needed medical care but did not get it because this person could not afford it?”

“Did the individual have at least one doctor visit in the past year?”

“Did the individual have one or more overnight hospital stays in the past year?”

Estimates from this analysis are presented in Table 6.4. Each cell measures the average treatment effect for the complier population

at the discontinuity. Standard errors are in parentheses. There are a few encouraging findings from this table. First, the share of the relevant population who delayed care the previous year fell 1.8 points, and similar for the share who did not get care at all in the previous year. The share who saw a doctor went up slightly, as did the share who stayed at a hospital. These are not very large effects in magnitude, it is important to note, but they are relatively precisely estimated. Note that these effects differed considerably by race and ethnicity as well as education.

| Delayed last year | Did not get care last year | Saw doctor last year | Hospital stay last year | |

|---|---|---|---|---|

| Overall sample | \(-1.8\) | \(-1.3\) | 1.3 | 1.2 |

| (0.4) | (0.3) | (0.7) | (0.4) | |

| White non-Hispanic | ||||

| High school dropout | \(-1.5\) | \(-0.2\) | 3.1 | 1.6 |

| (1.1) | (1.0) | (1.3) | (1.3) | |

| High school graduate | 0.3 | \(-1.3\) | \(-0.4\) | 0.3 |

| (2.8) | (2.8) | (1.5) | (0.7) | |

| Some college | \(-1.5\) | \(-1.4\) | 0.0 | 2.1 |

| (0.4) | (0.3) | (1.3) | (0.7) | |

| Minority | ||||

| High school dropout | \(-5.3\) | \(-4.2\) | 5.0 | 0.0 |

| (1.0) | (0.9) | (2.2) | (1.4) | |

| High school graduate | \(-3.8\) | 1.5 | 1.9 | 1.8 |

| (3.2) | (3.7) | (2.7) | (1.4) | |

| Some college | \(-0.6\) | \(-0.2\) | 3.7 | 0.7 |

| (1.1) | (0.8) | (3.9) | (2.0) | |

| Classified by ethnicity only | ||||

| White non-Hispanic | \(-1.6\) | \(-1.2\) | 0.6 | 1.3 |

| (0.4) | (0.3) | (0.8) | (0.5) | |

| Black non-Hispanic | \(-1.9\) | \(-0.3\) | 3.6 | 0.5 |

| (1.1) | (1.1) | (1.9) | (1.1) | |

| Hispanic | \(-4.9\) | \(-3.8\) | 8.2 | 11.8 |

| (0.8) | (0.7) | (0.8) | (1.6) |

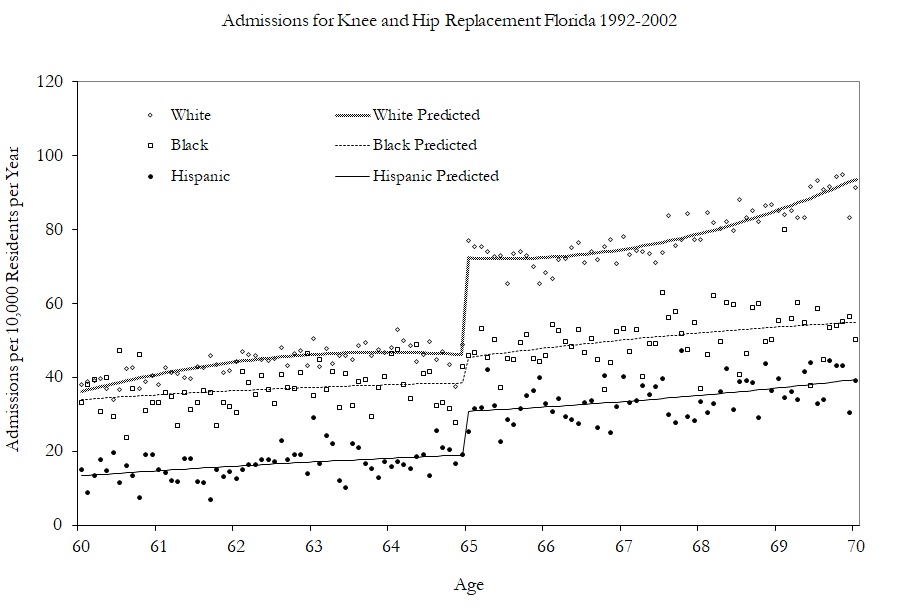

Having shown modest effects on care and utilization, the authors turn to examining the kinds of care they received by examining specific changes in hospitalizations. Figure 6.13 shows the effect of Medicare on hip and knee replacements by race. The effects are largest for whites.

In conclusion, the authors find that universal health-care coverage for the elderly increases care and utilization as well as coverage. In a subsequent study (Card, Dobkin, and Maestas 2009), the authors examined the impact of Medicare on mortality and found slight decreases in mortality rates (see Table 6.5).

| Quadratic no controls | \(-1.1\) | \(-1.0\) | \(-1.1\) | \(-1.2\) | \(-1.0\) | |

| (0.2) | (0.2) | (0.3) | (0.3) | (0.4) | (0.4) | |

| Quadratic plus controls | \(-1.0\) | \(-0.8\) | \(-0.9\) | \(-0.9\) | \(-0.8\) | \(-0.7\) |

| 0.2) | (0.2) | (0.3) | (0.3) | (0.3) | (0.4) | |

| Cubic plus controls | \(-0.7\) | \(-0.7\) | \(-0.6\) | \(-0.9\) | \(-0.9\) | \(-0.4\) |

| (0.3) | (0.2) | (0.4) | (0.4) | (0.5) | (0.5) | |

| Local OLS with ad hoc bandwidths | \(-0.8\) | \(-0.8\) | \(-0.8\) | \(-0.9\) | \(-1.1\) | \(-0.8\) |

| (0.2) | (0.2) | (0.2) | (0.2) | (0.3) | (0.3) |

Dependent variable for death within interval shown in the column heading. Regression estimates at the discontinuity of age 65 for flexible regression models. Standard errors in parenthesis.

As we’ve mentioned, it’s standard practice in the RDD to estimate causal effects using local polynomial regressions. In its simplest form, this amounts to nothing more complicated than fitting a linear specification separately on each side of the cutoff using a least squares regression. But when this is done, you are using only the observations within some pre-specified window (hence “local”). As the true conditional expectation function is probably not linear at this window, the resulting estimator likely suffers from specification bias. But if you can get the window narrow enough, then the bias of the estimator is probably small relative to its standard deviation.

But what if the window cannot be narrowed enough? This can happen if the running variable only takes on a few values, or if the gap between values closest to the cutoff are large. The result could be you simply do not have enough observations close to the cutoff for the local polynomial regression. This also can lead to the heteroskedasticity-robust confidence intervals to undercover the average causal effect because it is not centered. And here’s the really bad news—this probably is happening a lot in practice.

In a widely cited and very influential study, Lee and Card (2008) suggested that researchers should cluster their standard errors by the running variable. This advice has since become common practice in the empirical literature. Lee and Lemieux (2010), in a survey article on proper RDD methodology, recommend this practice, just to name one example. But in a recent study, Kolesár and Rothe (2018) provide extensive theoretical and simulation-based evidence that clustering on the running variable is perhaps one of the worst approaches you could take. In fact, clustering on the running variable can actually be substantially worse than heteroskedastic-robust standard errors.

As an alternative to clustering and robust standard errors, the authors propose two alternative confidence intervals that have guaranteed coverage properties under various restrictions on the conditional expectation function. Both confidence intervals are “honest,” which means they achieve correct coverage uniformly over all conditional expectation functions in large samples. These confidence intervals are currently unavailable in Stata as of the time of this writing, but they can be implemented in R with the RDHonest package.8 R users are encouraged to use these confidence intervals. Stata users are encouraged to switch (grudgingly) to R so as to use these confidence intervals. Barring that, Stata users should use the heteroskedastic robust standard errors. But whatever you do, don’t cluster on the running variable, as that is nearly an unambiguously bad idea.

A separate approach may be to use randomization inference. As we noted, Hahn, Todd, and Klaauw (2001) emphasized that the conditional expected potential outcomes must be continuous across the cutoff for a regression discontinuity design to identify the local average treatment effect. But Cattaneo, Frandsen, and Titiunik (2015) suggest an alternative assumption which has implications for inference. They ask us to consider that perhaps around the cutoff, in a short enough window, the treatment was assigned to units randomly. It was effectively a coin flip which side of the cutoff someone would be for a small enough window around the cutoff. Assuming there exists a neighborhood around the cutoff where this randomization-type condition holds, then this assumption may be viewed as an approximation of a randomized experiment around the cutoff. Assuming this is plausible, we can proceed as if only those observations closest to the discontinuity were randomly assigned, which leads naturally to randomization inference as a methodology for conducting exact or approximate p-values.

In the sharp RDD, treatment was determined when \(X_i \geq c_0\). But that kind of deterministic assignment does not always happen. Sometimes there is a discontinuity, but it’s not entirely deterministic, though it nonetheless is associated with a discontinuity in treatment assignment. When there is an increase in the probability of treatment assignment, we have a fuzzy RDD. The earlier paper by Hoekstra (2009) had this feature, as did Angrist and Lavy (1999). The formal definition of a probabilistic treatment assignment is \[ \lim_{X_i\rightarrow{c_0}} \Pr\big(D_i=1\mid X_i=c_0\big) \ne \lim_{c_0 \leftarrow X_i} \Pr\big(D_i=1\mid X_i=c_0\big) \]

In other words, the conditional probability is discontinuous as \(X\) approaches \(c_0\) in the limit. A visualization of this is presented from Guido W. Imbens and Lemieux (2008) in Figure 6.14.

The identifying assumptions are the same under fuzzy designs as they are under sharp designs: they are the continuity assumptions. For identification, we must assume that the conditional expectation of the potential outcomes (e.g., \(E[Y^0|X<c_0]\)) is changing smoothly through \(c_0\). What changes at \(c_0\) is the treatment assignment probability. An illustration of this identifying assumption is in Figure 6.15.

Estimating some average treatment effect under a fuzzy RDD is very similar to how we estimate a local average treatment effect with instrumental variables. I will cover instrumental variables in more detail later in the book, but for now let me tell you about estimation under fuzzy designs using IV. One can estimate several ways. One simple way is a type of Wald estimator, where you estimate some causal effect as the ratio of a reduced form difference in mean outcomes around the cutoff and a reduced form difference in mean treatment assignment around the cutoff. \[ \delta_{\text{Fuzzy RDD}} = \dfrac{\lim_{X \rightarrow c_0} E\big[Y\mid X = c_0\big]-\lim_{X_0 \leftarrow X} E\big[Y\mid X=c_0\big]}{\lim_{X \rightarrow c_0} E\big[D\mid X=c_0\big]-\lim_{X_0 \leftarrow X} E\big[D\mid X=c_0\big]} \] The assumptions for identification here are the same as with any instrumental variables design: all the caveats about exclusion restrictions, monotonicity, SUTVA, and the strength of the first stage.9

But one can also estimate the effect using a two-stage least squares model or similar appropriate model such as limited-information maximum likelihood. Recall that there are now two events: the first event is when the running variable exceeds the cutoff, and the second event is when a unit is placed in the treatment. Let \(Z_i\) be an indicator for when \(X\) exceeds \(c_0\). One can use both \(Z_i\) and the interaction terms as instruments for the treatment \(D_i\). If one uses only \(Z_i\) as an instrumental variable, then it is a “just identified” model, which usually has good finite sample properties.

Let’s look at a few of the regressions that are involved in this instrumental variables approach. There are three possible regressions: the first stage, the reduced form, and the second stage. Let’s look at them in order. In the just identified case (meaning only one instrument for one endogenous variable), the first stage would be: \[ D_i = \gamma_0 + \gamma_1X_i+\gamma_2X_i^2 + \dots + \gamma_pX_i^p + \pi{Z}_i + \zeta_{1i} \] where \(\pi\) is the causal effect of \(Z_i\) on the conditional probability of treatment. The fitted values from this regression would then be used in a second stage. We can also use both \(Z_i\) and the interaction terms as instruments for \(D_i\). If we used \(Z_i\) and all its interactions, the estimated first stage would be: \[ D_i= \gamma_{00} + \gamma_{01}\tilde{X}_i + \gamma_{02}\tilde{X}_i^2 + \dots + \gamma_{0p}\tilde{X}_i^p + \pi Z_i + \gamma_1^*\tilde{X}_i Z_i + \gamma_2^* \tilde{X}_i Z_i + \dots + \gamma_p^*Z_i + \zeta_{1i} \] We would also construct analogous first stages for \(\tilde{X}_iD_i, \dots, \tilde{X}_i^pD_i\).

If we wanted to forgo estimating the full IV model, we might estimate the reduced form only. You’d be surprised how many applied people prefer to simply report the reduced form and not the fully specified instrumental variables model. If you read Hoekstra (2009), for instance, he favored presenting the reduced form—that second figure, in fact, was a picture of the reduced form. The reduced form would regress the outcome \(Y\) onto the instrument and the running variable. The form of this fuzzy RDD reduced form is: \[ Y_i = \mu + \kappa_1X_i + \kappa_2X_i^2 + \dots + \kappa_pX_i^p + \delta \pi Z_i + \zeta_{2i} \] As in the sharp RDD case, one can allow the smooth function to be different on both sides of the discontinuity by interacting \(Z_i\) with the running variable. The reduced form for this regression is:

\[ \begin{align} Y_i & =\mu + \kappa_{01}X_i\tilde{X}_i + \kappa_{02}X_i{}\tilde{X}_i^2 + \dots + \kappa_{0p}X_i{}\tilde{X}_i^p \\ & + \delta \pi Z_i + \kappa_{11}\tilde{X}_iZ_i + \kappa_{12} \tilde{X}_i Z_i + \dots + \kappa_{1p}\tilde{X}_iZ_i + \zeta_{1i} \end{align} \]

But let’s say you wanted to present the estimated effect of the treatment on some outcome. That requires estimating a first stage, using fitted values from that regression, and then estimating a second stage on those fitted values. This, and only this, will identify the causal effect of the treatment on the outcome of interest. The reduced form only estimates the causal effect of the instrument on the outcome. The second-stage model with interaction terms would be the same as before:

\[ \begin{align} Y_i & =\alpha + \beta_{01}\tilde{x}_i + \beta_{02}\tilde{x}_i^2 + \dots + \beta_{0p}\tilde{x}_i^p \\ & + \delta \widehat{D_i} + \beta_1^*\widehat{D_i}\tilde{x}_i + \beta_2^*\widehat{D_i}\tilde{x}_i^2 + \dots + \beta_p^*\widehat{D_i}\tilde{x}_i^p + \eta_i \end{align} \]

Where \(\tilde{x}\) are now not only normalized with respect to \(c_0\) but are also fitted values obtained from the first-stage regressions.

As Hahn, Todd, and Klaauw (2001) point out, one needs the same assumptions for identification as one needs with IV. As with other binary instrumental variables, the fuzzy RDD is estimating the local average treatment effect (LATE) (Guideo W. Imbens and Angrist 1994), which is the average treatment effect for the compliers. In RDD, the compliers are those whose treatment status changed as we moved the value of \(x_i\) from just to the left of \(c_0\) to just to the right of \(c_0\).

The requirement for RDD to estimate a causal effect are the continuity assumptions. That is, the expected potential outcomes change smoothly as a function of the running variable through the cutoff. In words, this means that the only thing that causes the outcome to change abruptly at \(c_0\) is the treatment. But, this can be violated in practice if any of the following is true:

The assignment rule is known in advance.

Agents are interested in adjusting.

Agents have time to adjust.

The cutoff is endogenous to factors that independently cause potential outcomes to shift.

There is nonrandom heaping along the running variable.

Examples include retaking an exam, self-reporting income, and so on. But some other unobservable characteristic change could happen at the threshold, and this has a direct effect on the outcome. In other words, the cutoff is endogenous. An example would be age thresholds used for policy, such as when a person turns 18 years old and faces more severe penalties for crime. This age threshold triggers the treatment (i.e., higher penalties for crime), but is also correlated with variables that affect the outcomes, such as graduating from high school and voting rights. Let’s tackle these problems separately.

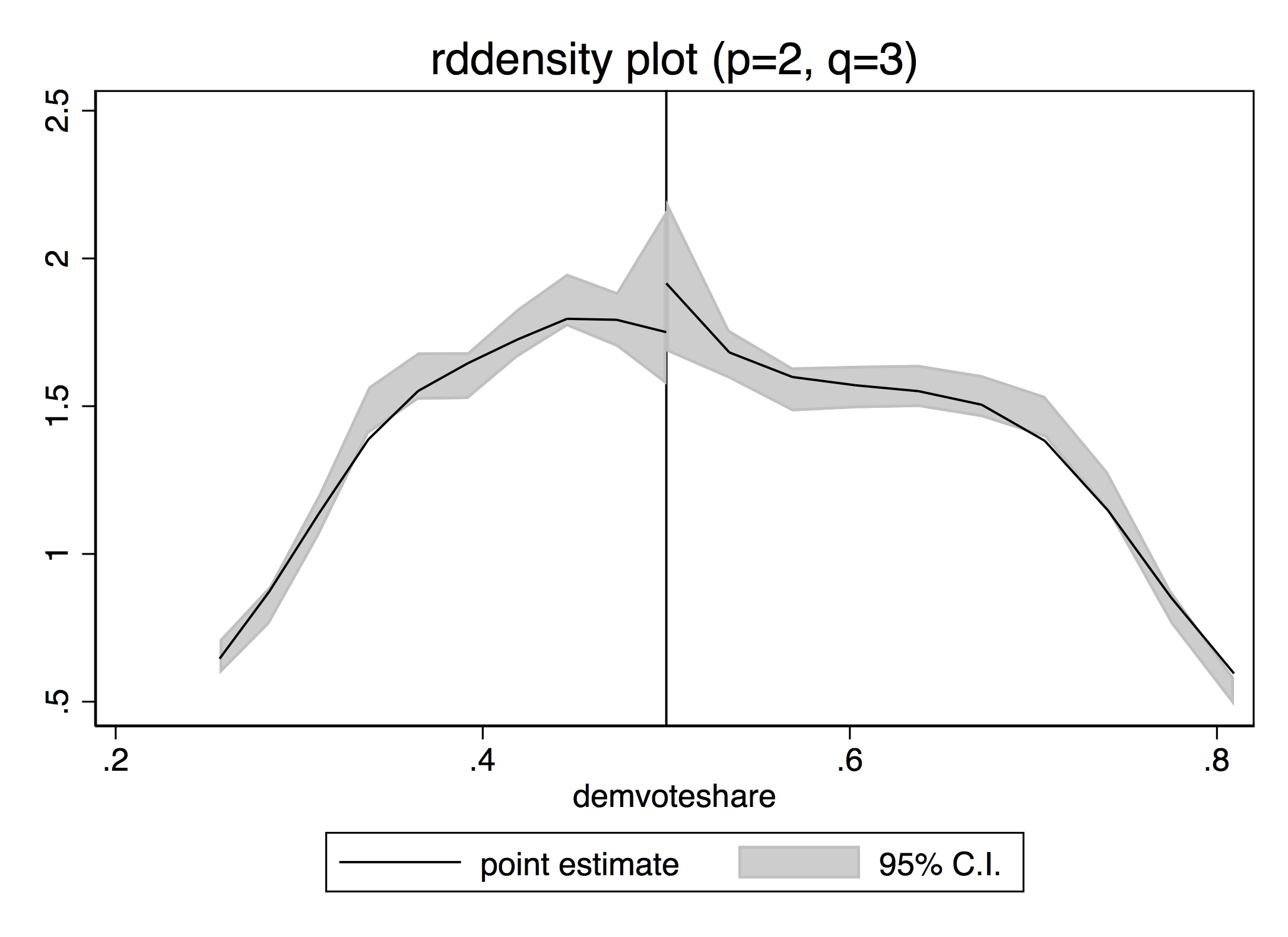

Because of these challenges to identification, a lot of work by econometricians and applied microeconomists has gone toward trying to figure out solutions to these problems. The most influential is a density test by Justin McCrary, now called the McCrary density test (McCrary 2008). The McCrary density test is used to check whether units are sorting on the running variable. Imagine that there are two rooms with patients in line for some life-saving treatment. Patients in room A will receive the life-saving treatment, and patients in room B will knowingly receive nothing. What would you do if you were in room B? Like me, you’d probably stand up, open the door, and walk across the hall to room A. There are natural incentives for the people in room B to get into room A, and the only thing that would keep people in room B from sorting into room A is if doing so were impossible.

But, let’s imagine that the people in room B had successfully sorted themselves into room A. What would that look like to an outsider? If they were successful, then room A would have more patients than room B. In fact, in the extreme, room A is crowded and room B is empty. This is the heart of the McCrary density test, and when we see such things at the cutoff, we have some suggestive evidence that people are sorting on the running variable. This is sometimes called manipulation.

Remember earlier when I said we should think of continuity as the null because nature doesn’t make jumps? If you see a turtle on a fencepost, it probably didn’t get there itself. Well, the same goes for the density. If the null is a continuous density through the cutoff, then bunching in the density at the cutoff is a sign that someone is moving over to the cutoff—probably to take advantage of the rewards that await there. Sorting on the sorting variable is a testable prediction under the null of a continuous density. Assuming a continuous distribution of units, sorting on the running variable means that units are moving just on the other side of the cutoff. Formally, if we assume a desirable treatment \(D\) and an assignment rule \(X\geq c_0\), then we expect individuals will sort into \(D\) by choosing \(X\) such that \(X\geq c_0\)—so long as they’re able. If they do, then it could imply selection bias insofar as their sorting is a function of potential outcomes.

The kind of test needed to investigate whether manipulation is occurring is a test that checks whether there is bunching of units at the cutoff. In other words, we need a density test. McCrary (2008) suggests a formal test where under the null, the density should be continuous at the cutoff point. Under the alternative hypothesis, the density should increase at the kink.10 I’ve always liked this test because it’s a really simple statistical test based on a theory that human beings are optimizing under constraints. And if they are optimizing, that makes for testable predictions—like a discontinuous jump in the density at the cutoff. Statistics built on behavioral theory can take us further.

To implement the McCrary density test, partition the assignment variable into bins and calculate frequencies (i.e., the number of observations) in each bin. Treat the frequency counts as the dependent variable in a local linear regression. If you can estimate the conditional expectations, then you have the data on the running variable, so in principle you can always do a density test. I recommend the package rddensity,11 which you can install for R as well.12 These packages are based on Cattaneo, Jansson, and Ma (2019), which is based on local polynomial regressions that have less bias in the border regions.

This is a high-powered test. You need a lot of observations at \(c_0\) to distinguish a discontinuity in the density from noise. Let me illustrate in Figure 6.16 with a picture from McCrary (2008) that shows a situation with and without manipulation.

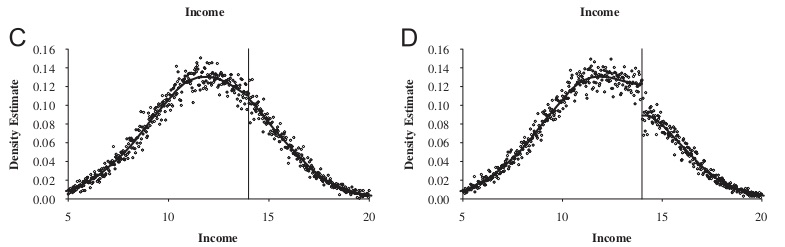

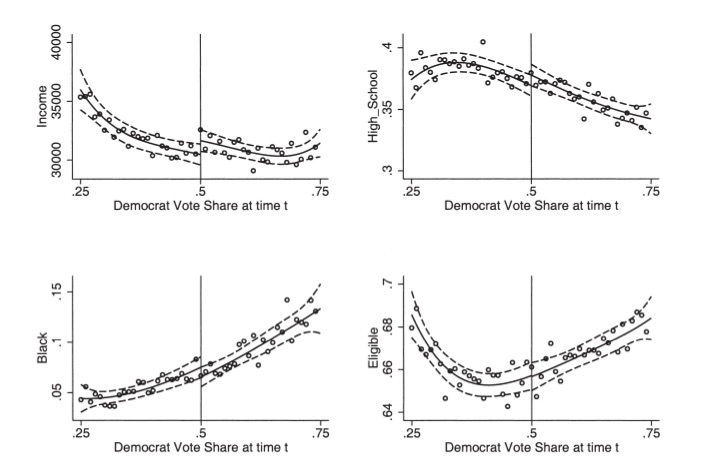

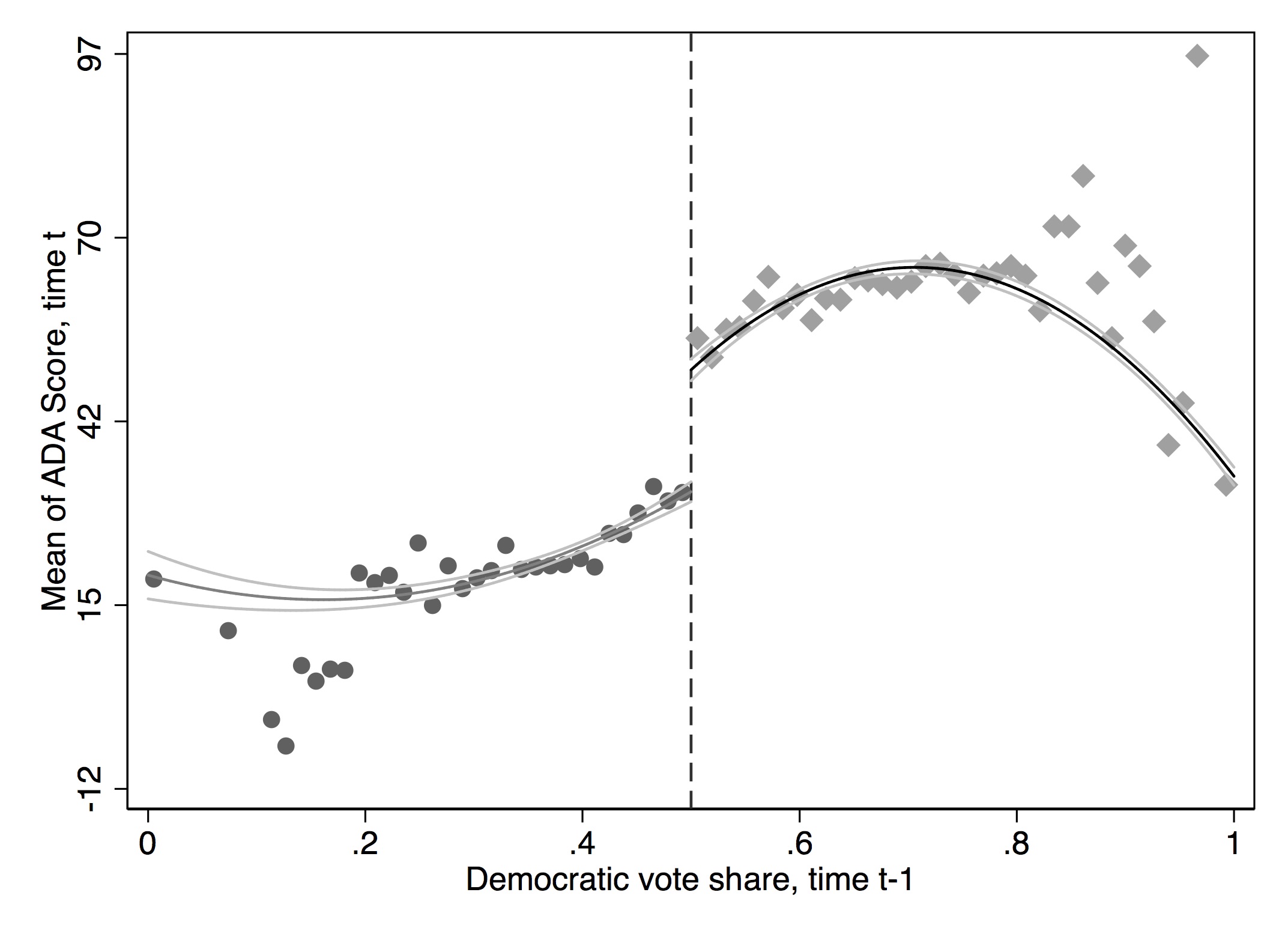

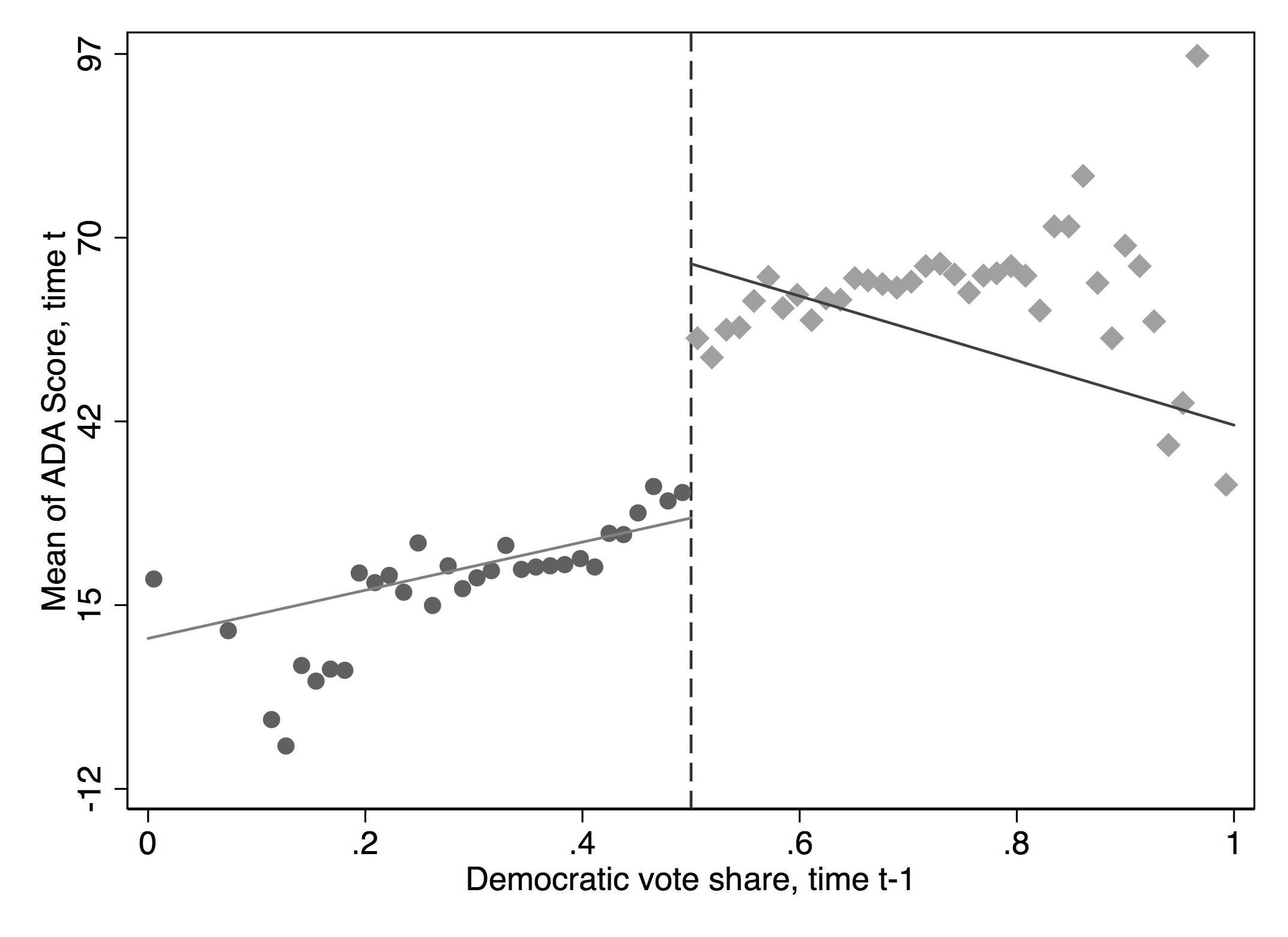

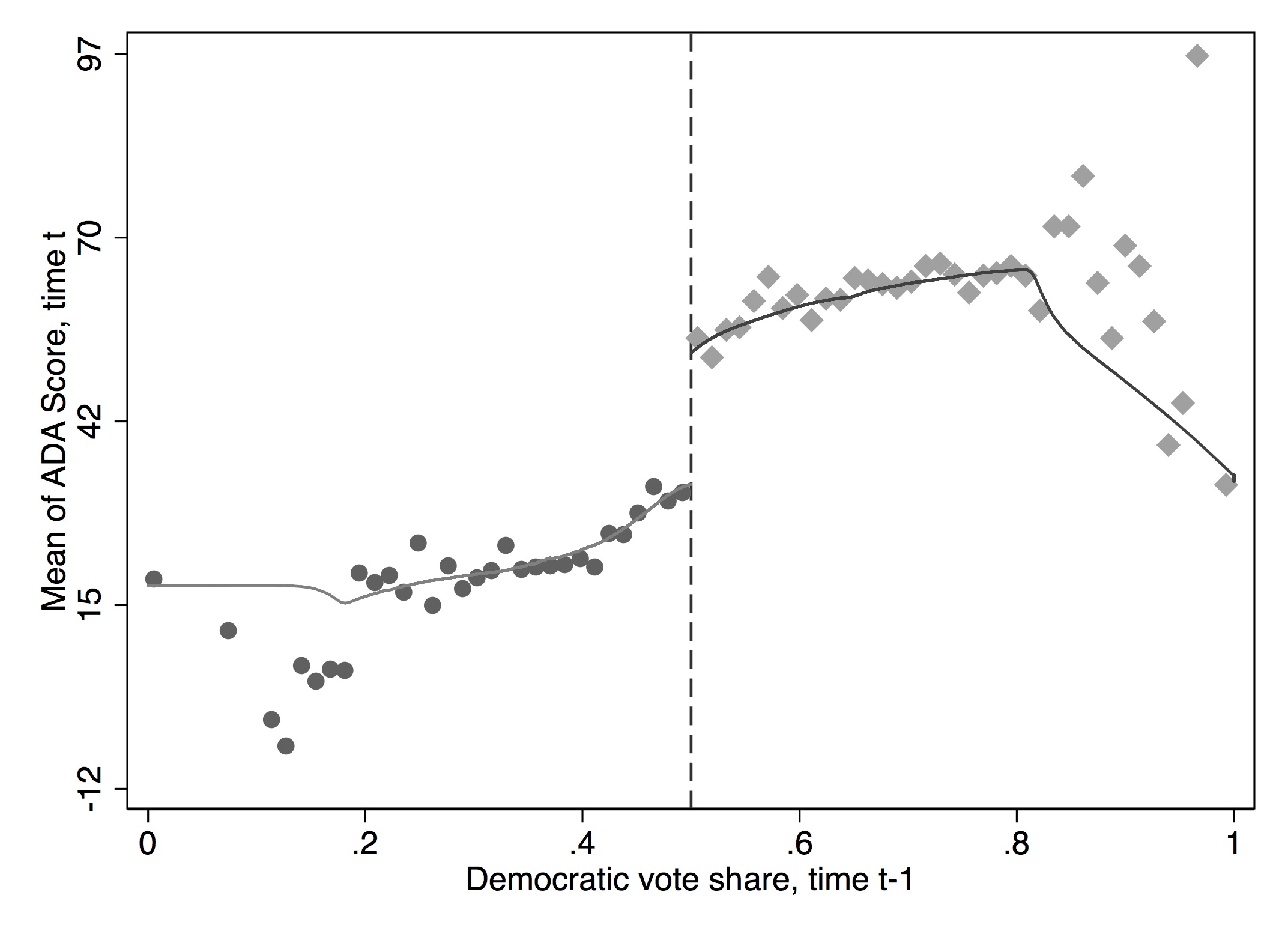

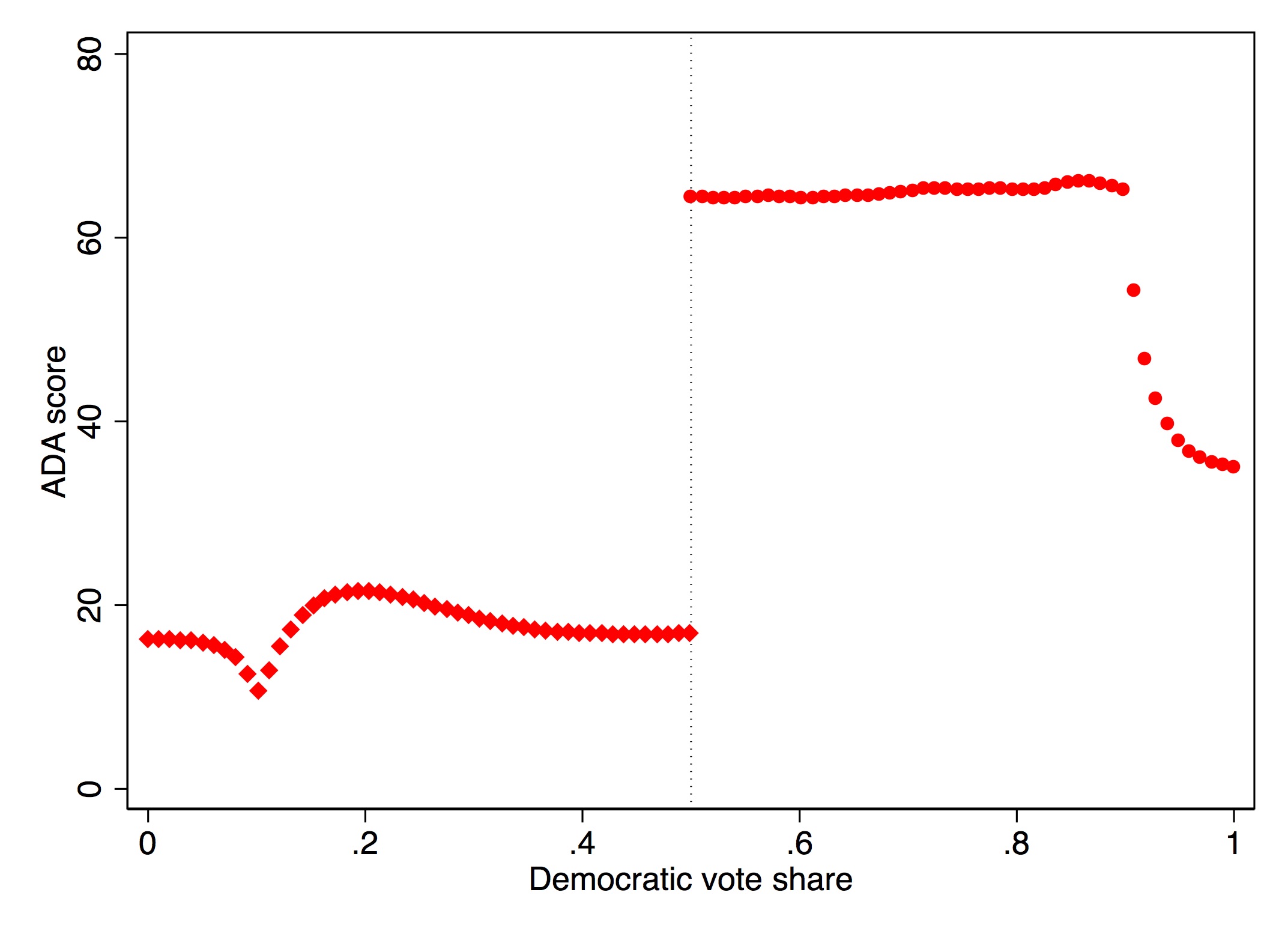

It has become common in this literature to provide evidence for the credibility of the underlying identifying assumptions, at least to some degree. While the assumptions cannot be directly tested, indirect evidence may be persuasive. I’ve already mentioned one such test—the McCrary density test. A second test is a covariate balance test. For RDD to be valid in your study, there must not be an observable discontinuous change in the average values of reasonably chosen covariates around the cutoff. As these are pretreatment characteristics, they should be invariant to change in treatment assignment. An example of this is from Lee, Moretti, and Butler (2004), who evaluated the impact of Democratic vote share just at 50%, on various demographic factors (Figure 6.17).